NickF

Guru

- Joined

- Jun 12, 2014

- Messages

- 763

Not sure if anyone else is experiencing this in BETA 2. I actually tried the first BETA and had the same exact issue, except none of the VMs worked. I waited for BETA 2 and am now reporting, as some of the VMs work now...but not all Some of my VMs have a problem where I can't get to the VNC window, and some don't. It's a really odd behavior.

ixsystems.atlassian.net

ixsystems.atlassian.net

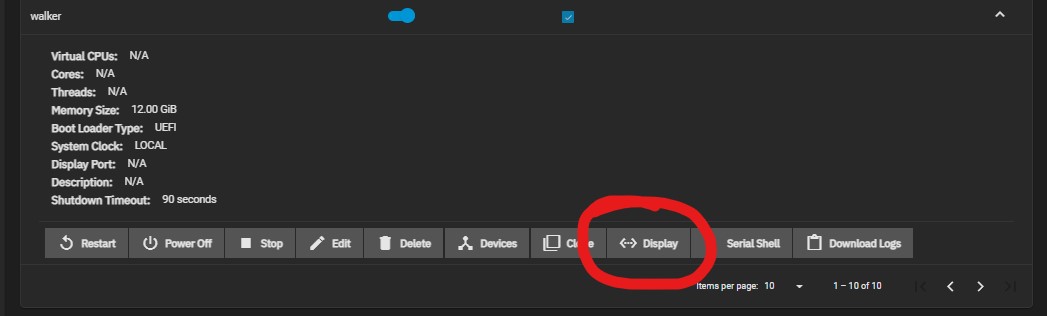

When I press this:

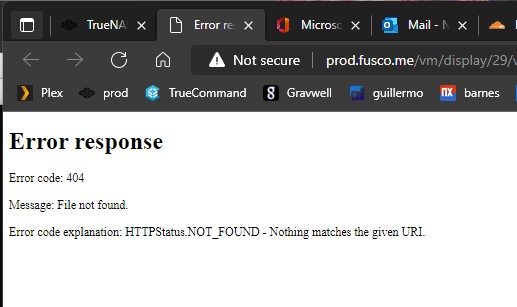

I get this:

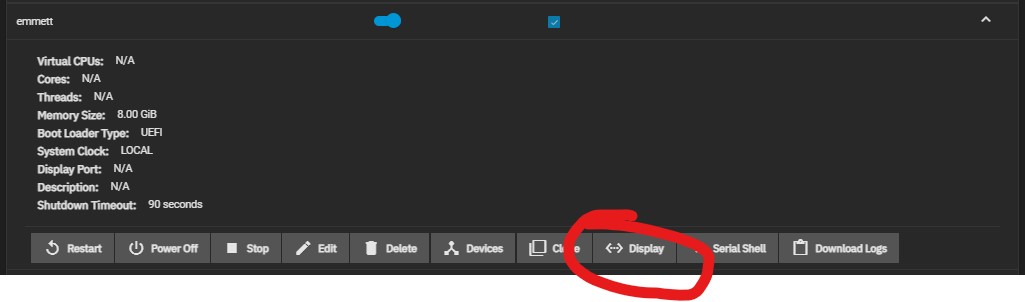

But when I press the same button on this one:

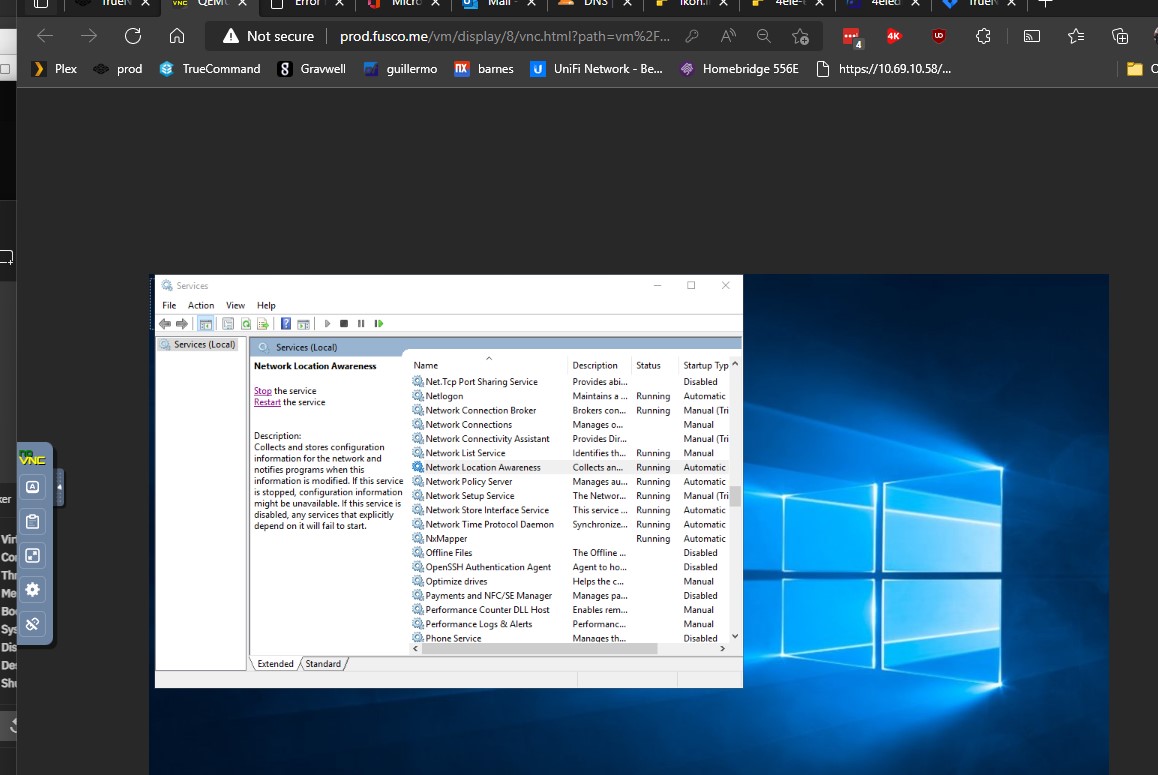

I get to the VM:

Problem goes away after reverting to TrueNAS-SCALE-22.02.3

[NAS-118691] - iXsystems TrueNAS Jira

When I press this:

I get this:

But when I press the same button on this one:

I get to the VM:

Problem goes away after reverting to TrueNAS-SCALE-22.02.3

Last edited: