Sysadmin#39

Cadet

- Joined

- May 29, 2022

- Messages

- 4

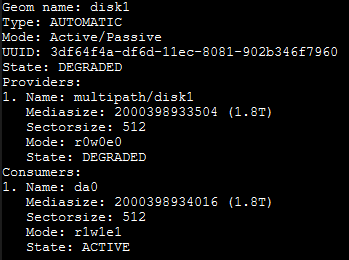

I'm rebuilding my 16-bay NAS with Truenas Core 12.0-U8.1, after running a previous version of Freenas with the same hardware. My issue is that one or more of the disks (all sata, no sas) are improperly detected as multipath disks and show a degraded status. Usually just da0, but sometimes also da1, da2, and da3. I can destroy the multipath/diskX from the CLI (while not in a pool) but it's automatically re-added after a reboot. My concern is that if I need to replace a drive one day, I won't be able to because of the multipath issue. Indeed, I already broke the pool once and wasn't able to detach and replace the disk.

Presently there is no data in the pool so I'll try anything. This is my first post so if I have neglected to include any critical information, please bear with me and help me to find any additional info needed.

Here's my setup:

SuperMicro 15-bay rackmount case

Motherboard: Gigabyte GA-970A-DS3 (rev 1)

CPU: AMD FX-6350

Memory: 16GB

Storage: 16 x 2TB on a RocketRaid 3540 HBA card with a backplane

Here's what I've learned and tried so far (multiple iterations) after referencing dozens of other posts.

- For each iteration, as necessary, I disable SMB, unconfigure Active Directory, destroy all datasets, and destroy the pool, all from the GUI.

- Clear the previous pool info from each drive with 'zpool labelclear daX'. This always results in an 'Operation not permitted' error, so if I set debugflags to 16, it succeeds.

- list and destroy any detected multipaths

Rinse and repeat for any other listed mutlipaths, but usually it's only disk1

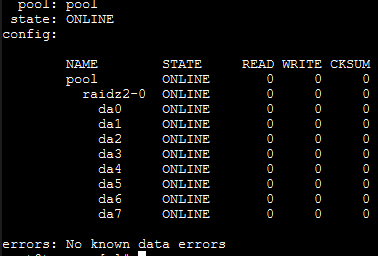

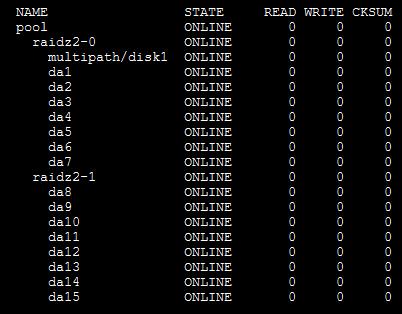

- Create the pool. I've settled on 2 x 8-disk vdevs in a raid z2 configuration.

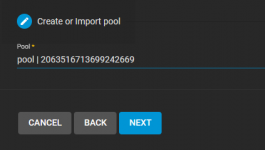

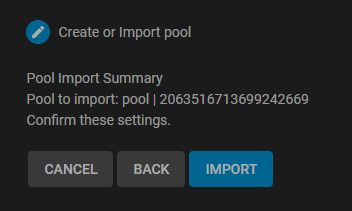

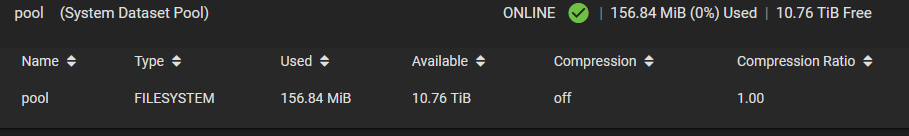

- 'zpool status' shows the pool is correctly created, with no multipaths listed. However, since I've created the pool in the CLI a reboot is needed before I can import the pool via the GUI to configure further. If you're wondering why I don't create the pool in the GUI in the first place, it's because the gui doesn't show any disks. Well, sometimes it'll show one or two disks. This is another topic for discussion that needs no attention here. For now let's just accept that I can reliably create the pool from the CLI and import it into the GUI upon the next reboot.

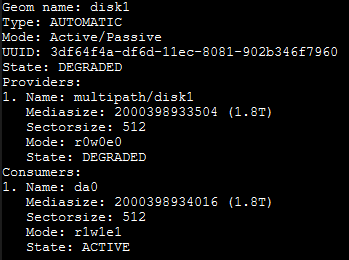

- Following a reboot and importing the pool into the GUI, da0 (and sometimes also da1-3) are listed as a multipath disk. However, I am able to configure the system further, adding it to Active Directory, configuring datasets, samba, and so on.

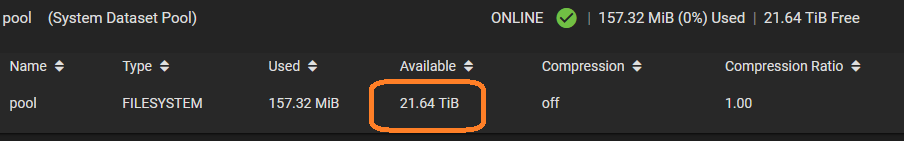

I can even expand the pool by adding the other 8 disks, though I didn't necessarily do this on each iteration.

Everything appears to be working normally save for da0 being improperly listed as a multipath disk.

If I attempt to destroy the multipath now, the array shows as Degraded, and from here I was not able to remove and reimport the disk to restore the pool to a healthy state. The only thing that works is to destroy and recreate the pool. Obviously this is fine for now but isn't viable once I have data.

One post suggested that the multipath data is saved on the disk, much like the zfs pool information, and that it needs to be cleared with dd. I've tried this between destroying and recreating the pool, but there was no change.

www.truenas.com

www.truenas.com

The gmultipath man page explained that you could load gmultipath at boot time with

I've seen posts that suggest using tunables to disable gmultipath but can't find any information that actually explains how to do it. The gmultipath man page lists two variables, kern.geom.multipath.debug and kern.geom.multipath.exclusive, but neither seems appropriate for disabling gmultipath. Reply #2 at

www.truenas.com

www.truenas.com

Here are some other posts I've referenced but they did not help my situation.

Unload gmultipath kernel module at startup

www.truenas.com

www.truenas.com

Again, this is my first post so please bear with me and provide detailed instructions to help me gather any additional info you may wish to see. Thanks in advance!

Presently there is no data in the pool so I'll try anything. This is my first post so if I have neglected to include any critical information, please bear with me and help me to find any additional info needed.

Here's my setup:

SuperMicro 15-bay rackmount case

Motherboard: Gigabyte GA-970A-DS3 (rev 1)

CPU: AMD FX-6350

Memory: 16GB

Storage: 16 x 2TB on a RocketRaid 3540 HBA card with a backplane

Here's what I've learned and tried so far (multiple iterations) after referencing dozens of other posts.

- For each iteration, as necessary, I disable SMB, unconfigure Active Directory, destroy all datasets, and destroy the pool, all from the GUI.

- Clear the previous pool info from each drive with 'zpool labelclear daX'. This always results in an 'Operation not permitted' error, so if I set debugflags to 16, it succeeds.

sysctl kern.geom.debugflags=0x10zpool labelclear daX- list and destroy any detected multipaths

gmultipath listgmultipath destroy disk1Rinse and repeat for any other listed mutlipaths, but usually it's only disk1

- Create the pool. I've settled on 2 x 8-disk vdevs in a raid z2 configuration.

zpool create pool raidz2 da0 da1 da2 da3 da4 da5 da6 da7- 'zpool status' shows the pool is correctly created, with no multipaths listed. However, since I've created the pool in the CLI a reboot is needed before I can import the pool via the GUI to configure further. If you're wondering why I don't create the pool in the GUI in the first place, it's because the gui doesn't show any disks. Well, sometimes it'll show one or two disks. This is another topic for discussion that needs no attention here. For now let's just accept that I can reliably create the pool from the CLI and import it into the GUI upon the next reboot.

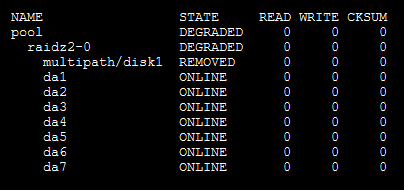

zpool status- Following a reboot and importing the pool into the GUI, da0 (and sometimes also da1-3) are listed as a multipath disk. However, I am able to configure the system further, adding it to Active Directory, configuring datasets, samba, and so on.

I can even expand the pool by adding the other 8 disks, though I didn't necessarily do this on each iteration.

zpool add pool raidz2 da8 da9 da10 da11 da12 da13 da14 da15

zpool statusEverything appears to be working normally save for da0 being improperly listed as a multipath disk.

gmultipath listIf I attempt to destroy the multipath now, the array shows as Degraded, and from here I was not able to remove and reimport the disk to restore the pool to a healthy state. The only thing that works is to destroy and recreate the pool. Obviously this is fine for now but isn't viable once I have data.

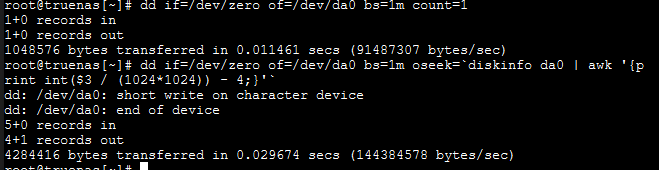

gmultipath destroy disk1zpool statusOne post suggested that the multipath data is saved on the disk, much like the zfs pool information, and that it needs to be cleared with dd. I've tried this between destroying and recreating the pool, but there was no change.

dd if=/dev/zero of=/dev/da0 bs=1m count=1

dd if=/dev/zero of=/dev/da0 bs=1m oseek=`diskinfo da0 | awk '{print int($3 / (1024*1024)) - 4;}'`Operation not permitted / HDD-Trouble

Hello Forum! I got going with FreeNAS some time ago. First having it tested on a Proxmox now it has its own Hardware in my server-rack. I have two 1TB Harddisks (Seagate an Hitachi). The OS is running on a 4GB SD-Card. The System is mostly going to be used for FTP (archiving Install-Images...

The gmultipath man page explained that you could load gmultipath at boot time with

geom_multipath_load="YES", I figured I could prevent it from loading with geom_multipath_load="NO", which I added to the end of /boot/loader.conf. Again, there was no change, after the next reboot the multipath was listed again.I've seen posts that suggest using tunables to disable gmultipath but can't find any information that actually explains how to do it. The gmultipath man page lists two variables, kern.geom.multipath.debug and kern.geom.multipath.exclusive, but neither seems appropriate for disabling gmultipath. Reply #2 at

Multipath Corrupted Multiple Disks and Possibly Destroyed Pool - Please Help

THE PROBLEM: I had a perfectly running volume for 3+ years that may be lost due to Sata drives being introduced to a brand new Supermicro SAS3 multipath, dual expander backplane environment. Any assistance that can be offered would be greatly appreciated. OLD SERVER: FreeNAS-11.1-U6 Norco 4224...

Here are some other posts I've referenced but they did not help my situation.

Unload gmultipath kernel module at startup

Unload gmultipath kernel module at startup

This should be simple, but I am trying to unload the gmultipath from bootup as I don't want to deal with multipath. I have both SAS and SATA drives and mixed support for multipath so I want to disable it. If anyone could let me know what the tunable is it would be super helpful. Thanks

Again, this is my first post so please bear with me and provide detailed instructions to help me gather any additional info you may wish to see. Thanks in advance!