dbrannon79

Dabbler

- Joined

- Oct 21, 2022

- Messages

- 32

Hello all, I just joined the forum but have been lurking around finding answers to various things in the past. I have recently came across an issue after upgrading from core to scale.

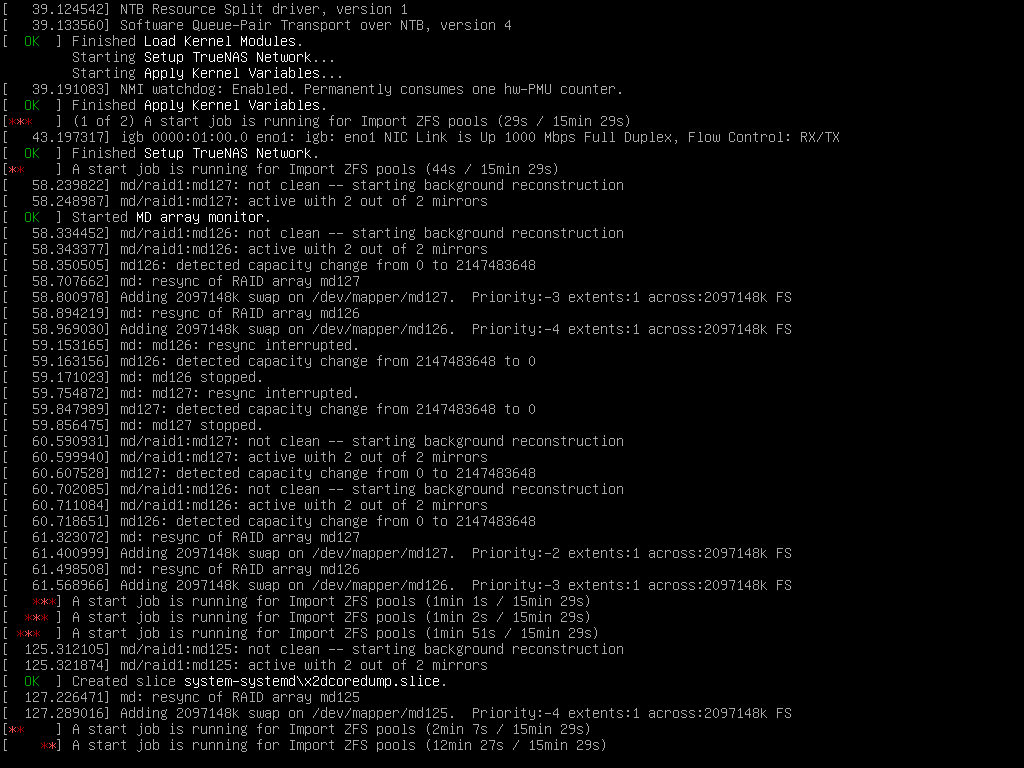

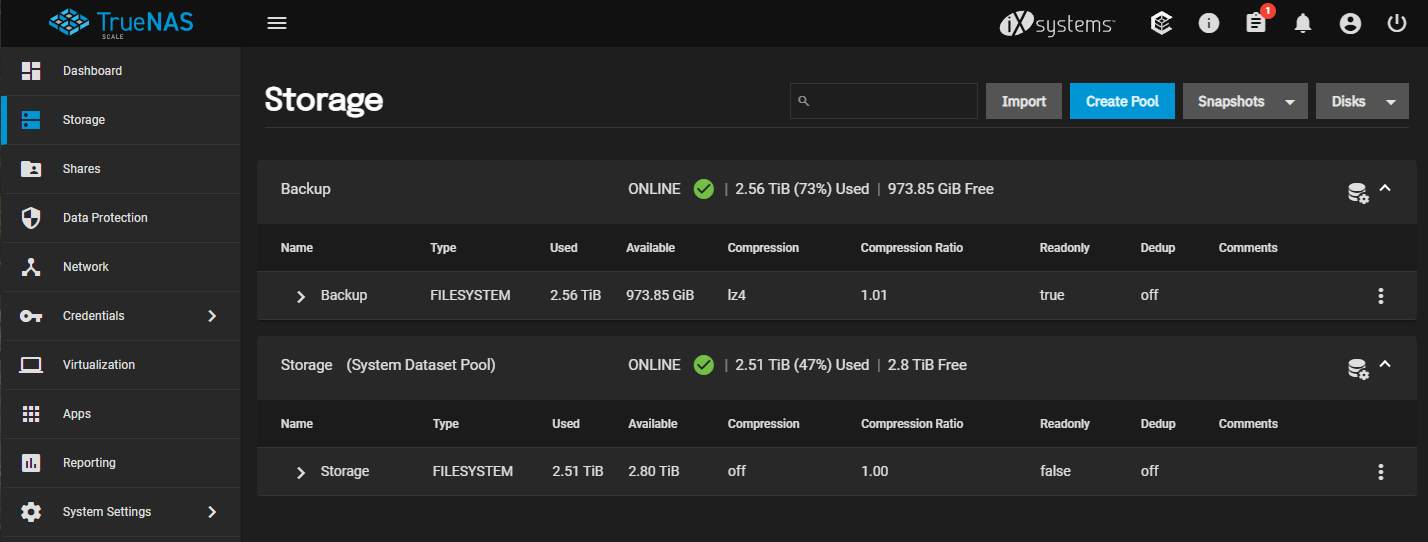

upon every boot, I see messages on the server showing my raid is not clean starting background reconstruction for both pools. it will always run for 15 minutes, but once booted and I can access the web gui, all pools look good and show nothing wrong. I have manually ran the smart test checking each drive but everything seems to pass with flying colors as far as I can tell.

After a few days of seeing this and searching online only to come up empty, I decided to wipe the boot ssd and do a fresh install, then upload my saved configuration.

upon reboot the same messages come back. I have attached a couple of screenshots and a text file which I copied and pasted the boot log from, in hopes there is some kind of error in the config that I am missing.

I have two pools in my server both setup in raid z1, pool name Storage has 4 3 tb sas drives with one hot spare, the other is named Backup with three 2tb sata drives in raid z1 with no spare. it's also setup for a replication task every so often from my mains storage to the backup pool to help save me from loosing any data.

I'm hopeful some of you all can help me figure this out.

upon every boot, I see messages on the server showing my raid is not clean starting background reconstruction for both pools. it will always run for 15 minutes, but once booted and I can access the web gui, all pools look good and show nothing wrong. I have manually ran the smart test checking each drive but everything seems to pass with flying colors as far as I can tell.

After a few days of seeing this and searching online only to come up empty, I decided to wipe the boot ssd and do a fresh install, then upload my saved configuration.

upon reboot the same messages come back. I have attached a couple of screenshots and a text file which I copied and pasted the boot log from, in hopes there is some kind of error in the config that I am missing.

I have two pools in my server both setup in raid z1, pool name Storage has 4 3 tb sas drives with one hot spare, the other is named Backup with three 2tb sata drives in raid z1 with no spare. it's also setup for a replication task every so often from my mains storage to the backup pool to help save me from loosing any data.

I'm hopeful some of you all can help me figure this out.