winnielinnie

MVP

- Joined

- Oct 22, 2019

- Messages

- 3,641

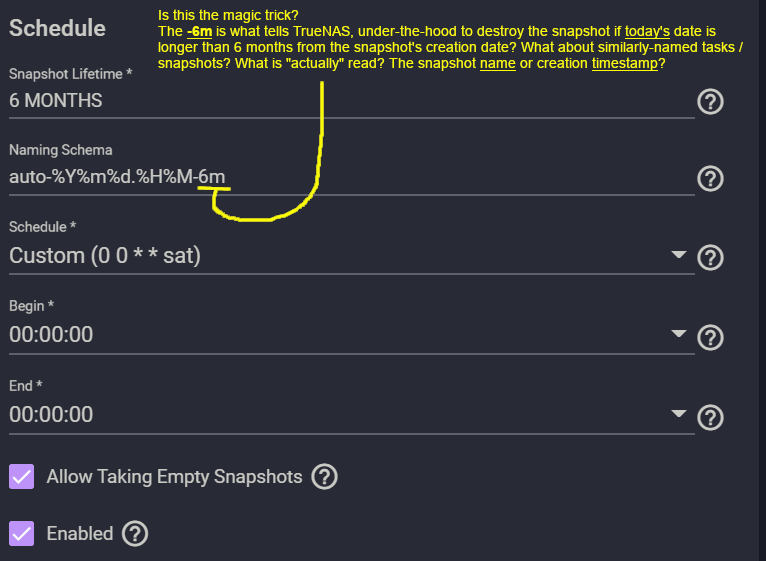

I could never find a definitive answer to this particular question. What exactly runs behind the scenes to mark and delete old snapshots?

Is there a script that searches for old snapshots based on their name? Or is it based on their creation timestamp?

Is it more sophisticated where it stores a table of snapshots and their expiration date, regardless of the snapshot's name and regardless of the Periodic Snapshot Task name? (In other words, renaming a snapshot will not save it from certain doom once its expiration arrives, since it is "tagged" regardless of its name?)

What happens if you change the "Naming Schema" for a Periodic Snapshot Task that already exists? Will expired snapshots eventually "slip through the cracks" and live for an eternity?

Attached is a screenshot to illustrate my confusion.

Is there a script that searches for old snapshots based on their name? Or is it based on their creation timestamp?

Is it more sophisticated where it stores a table of snapshots and their expiration date, regardless of the snapshot's name and regardless of the Periodic Snapshot Task name? (In other words, renaming a snapshot will not save it from certain doom once its expiration arrives, since it is "tagged" regardless of its name?)

What happens if you change the "Naming Schema" for a Periodic Snapshot Task that already exists? Will expired snapshots eventually "slip through the cracks" and live for an eternity?

Attached is a screenshot to illustrate my confusion.

Last edited: