winnielinnie

MVP

- Joined

- Oct 22, 2019

- Messages

- 3,641

You can tell I twisted my brain into several knots trying to muster a title that can describe my question...

Is it possible to create a single periodic snapshot task that follows the same principles of "smart" backups?

For example, from a single task...

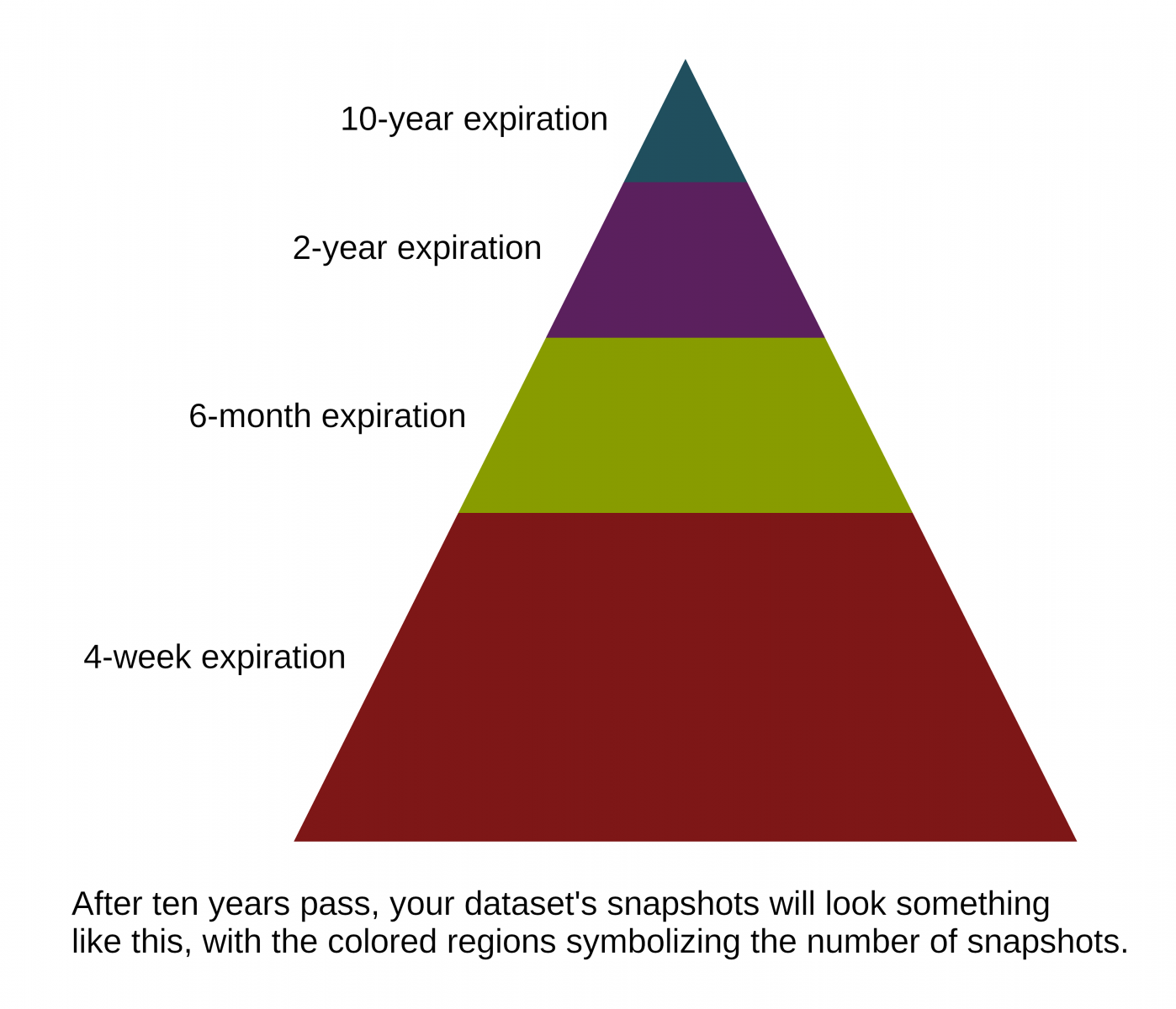

This would theoretically yield you with about a couple dozen daily snapshots that are destroyed on a 4-week conveyor belt, while a couple of them "survive" for another 6 months. Of the eventual handful that survive for another six months, some will be destroyed, while a few will survive for another two years. Only a couple of those from the group that survives for 2 years will be allowed to live for another 10 years. Think of it as snapshot pyramid from a single task.

I'm not sure what the terminology for this procedure is, but I recall backup and filesystem snapshot software (not TrueNAS- nor ZFS-related) using terms like "smart" or "staggered" backups.

Is this possible with TrueNAS, or must you configure a different Task for each expiration lifespan? (I'm hoping it's possible with the former, as it requires fewer overall tasks, which is more elegant.)

Is it possible to create a single periodic snapshot task that follows the same principles of "smart" backups?

For example, from a single task...

- Snapshots are created daily and marked with a 4 weeks expiration

- Of these a couple of them are tagged for a longer expiration of 6 months

- Of these, a couple of them are tagged for an even longer expiration of 2 years

- And finally of these, a couple of them are tagged for an even longer expiration of 10 years

This would theoretically yield you with about a couple dozen daily snapshots that are destroyed on a 4-week conveyor belt, while a couple of them "survive" for another 6 months. Of the eventual handful that survive for another six months, some will be destroyed, while a few will survive for another two years. Only a couple of those from the group that survives for 2 years will be allowed to live for another 10 years. Think of it as snapshot pyramid from a single task.

I'm not sure what the terminology for this procedure is, but I recall backup and filesystem snapshot software (not TrueNAS- nor ZFS-related) using terms like "smart" or "staggered" backups.

Is this possible with TrueNAS, or must you configure a different Task for each expiration lifespan? (I'm hoping it's possible with the former, as it requires fewer overall tasks, which is more elegant.)