willtruenas111

Dabbler

- Joined

- Aug 16, 2022

- Messages

- 17

Hi

I experience consistently high iowait times as shown in the CPU chart below. I wanted to check if this is 1) normal? 2) how I could further debug this?

Any help would be appreciated, it's impacting the performance of a ubuntu VM I have running. All the disks I have are fairly new and listed below as well.

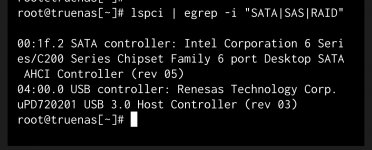

I've read something about HP gen8 microservers having a terrible raid controller. I don't know what a raid controller does exactly and if that might be the case in my setup.

OS Version:TrueNAS-SCALE-23.10.1.3

Product:ProLiant MicroServer Gen8

Model:Intel(R) Xeon(R) CPU E31265L @ 2.40GHz

Memory:16 GiB

2x SSDs (1 pool mirrored zfs) - a couple of VMs have zvols here.

2x HDDs (1 pool mirrored zfs) - media storage, not used much

USB boot-pool. (not ideal but I don't have a spare pool to dedicate to the boot-pool, or any more space for additional drives. I don't know enough about partitioning to carve up some of the HDD pool if thats possible)

I experience consistently high iowait times as shown in the CPU chart below. I wanted to check if this is 1) normal? 2) how I could further debug this?

Any help would be appreciated, it's impacting the performance of a ubuntu VM I have running. All the disks I have are fairly new and listed below as well.

I've read something about HP gen8 microservers having a terrible raid controller. I don't know what a raid controller does exactly and if that might be the case in my setup.

OS Version:TrueNAS-SCALE-23.10.1.3

Product:ProLiant MicroServer Gen8

Model:Intel(R) Xeon(R) CPU E31265L @ 2.40GHz

Memory:16 GiB

2x SSDs (1 pool mirrored zfs) - a couple of VMs have zvols here.

2x HDDs (1 pool mirrored zfs) - media storage, not used much

USB boot-pool. (not ideal but I don't have a spare pool to dedicate to the boot-pool, or any more space for additional drives. I don't know enough about partitioning to carve up some of the HDD pool if thats possible)