Hi e1, I have been using FreeNAS a while and finally joined the forums so I could complain. I find this free software does not live up to expectations, and as a millennial I expect, Nay!, demand better free stuff ...I am entitled to free stuff! I deserve everything for free!

Ok, ok, just kidding. Thank you for your hard work, and for making your product freely available; it has been a big help even though it isn't perfect, and I am grateful to all who have contributed. I have an odd problem with processes idling that I cannot seem to find an answer for. Actually, I have not even been able to identify what exactly the cause of the problem is, just a set of symptoms; which include: slow IO, crashing server, disconnects, data corruption, and some other misc. stuff.

First, my specs:

OS: FreeNAS-11.1-U5

Server: HP ProLiant DL380 G5

CPU: Intel(R) Xeon(R) CPU X5450 @ 3.00GHz

RAM: 32728MB

Peripherals:

(1) NetApp 111-00341+B0 X2065A-R6 4-Port Copper 3/6GB PCI-E Host Bus Adapter

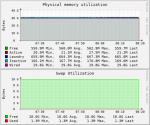

(2) LSI Logic MegaRAID SAS 9285CV-8e Storage Controller LSI00298 (not in use)

DAS: DS2246 NETAPP 24-BAY STORAGE ARRAY

Drives:

1TB HGST Travelstar (0S03563)[7200,32MB,6Gb/s,2.5"] x 5

1TB HGST Travelstar (0J22423)[7200,32MB,6Gb/s,2.5"] x 5

2TB WD Blue (WD20SPZX)[5400,6Gb/s 128MB,2.5"] x 5

1) Turns out part of the cause was the lagg interface. The server has two 1Gbps ports, so I thought it would be nice to utilize both. I was able to successfully combine both 1Gbps links into a single 10Mbps aggregate. After dismantling that some of the slowness cleared up.

2) Some of the instability cleared up with the 11.1 upgrade, and another part when I replaced the HBA card that was struck by lightning.

3) The data corruption was probably caused by the instability which turned my SSD L2ARC into an unidentified root level device on the zpool; it wouldn't have been as critical an issue if I had not foolishly overestimated the ZFS filesystem and added all of the drives to the same zpool (in separate raided vdevs). I was able to prevent any significant data loss by immediately blocking writes to it, rebooting (which caused it to roll back to the last stable point), and migrating the data.

4) There is not really anything I can do to fix the fragile nature of the ZFS filesystem caused by its projecting raid level limitations up to the filesystem layer, and compounding it with the inability to remove from the zpool. However, I can at least mitigate it by keeping the VDEV-to-zpool at a 1-to-1 ratio (never adding more then one raid set to a zpool).

5) The IO slowness really became obvious when I connected an external USB drive to the server in order to backup the data. I cannot bring the corrupting zpool online as shared which is why I decided to try using an external USB drive. I successfully mounted the USB drive as ntfs-3g (eventually), but when I tried to transfer the data it performed astonishingly slow. After the 6th day of the attempted 700GB transfer, I decided to abort it and try it from a different angle.

6) I reformatted the USB drive as ext4, then ext3, and finally ext2 (because ext4 and ext3 would not mount), mounted it and attempted the transfer again. I had previously thought my NetApp HBA+DAS was responsible for the IO slowness, but after monitoring the ntfs-3g and then the ext2 transfer using "iostat -x", I noticed that the DAS and HBA were working fine. The transfer in both cases was occurring halting bursts: (i) huge parallel reads from zpool drives, (ii) sudden huge write to the USB HDD, (iii) followed by the USB HDD write tapering off to 0Bps, (iv) then a prolonged period of nothing other than sporadic writes to the System Dataset; these periods of IO-lessness lengthened as the data transfer neared completion. Even so the 700GB transfer took about one day for the ext2.

7) After getting the backed-up data transferred to a new un-corrupted zpool, and bringing that online, I began a data verification and another file-sync over the network. This is when the last form of the IO issue presented clearly. Every atomic data transfer request that took less than 5-10 minutes always completes without any issue. Every transfer that took some extended period of time ran into issues. Those extended data transfers that I had done before dealing with the stability issues always froze and eventually failed. Those extended data transfers that I now perform after fixing the stability issue do not fail, but they all go into a server initiated sleep/hibernate; they only recover when I make a new data request to the server.

8) I thought I had found the issue when I checked the drive idle stats, and found that two of my 1TB drives were boned (way over the spin-up estimate). But after monitoring, I realized it was an old issue and none of the drives seemed to currently be effected by it (although most of them did show a recently high level of spin-up). None of them were set up for standby idle, or power-saving, but just in case I went through and explicitly turned off standby and power-save for all of them again from the command shell.

9) The slowness behavior is still happening, but I think it has nothing to do with the IO. I think something in the OS is throttling or sleeping the thread(s) that perform the transfer whenever they have to wait for IO. I think this is what then allows the drives to reach a point where they would spin down.

At this point, I'm not sure what to try next. Any help would be appreciated, thanks.

Ok, ok, just kidding. Thank you for your hard work, and for making your product freely available; it has been a big help even though it isn't perfect, and I am grateful to all who have contributed. I have an odd problem with processes idling that I cannot seem to find an answer for. Actually, I have not even been able to identify what exactly the cause of the problem is, just a set of symptoms; which include: slow IO, crashing server, disconnects, data corruption, and some other misc. stuff.

First, my specs:

OS: FreeNAS-11.1-U5

Server: HP ProLiant DL380 G5

CPU: Intel(R) Xeon(R) CPU X5450 @ 3.00GHz

RAM: 32728MB

Peripherals:

(1) NetApp 111-00341+B0 X2065A-R6 4-Port Copper 3/6GB PCI-E Host Bus Adapter

(2) LSI Logic MegaRAID SAS 9285CV-8e Storage Controller LSI00298 (not in use)

DAS: DS2246 NETAPP 24-BAY STORAGE ARRAY

Drives:

1TB HGST Travelstar (0S03563)[7200,32MB,6Gb/s,2.5"] x 5

1TB HGST Travelstar (0J22423)[7200,32MB,6Gb/s,2.5"] x 5

2TB WD Blue (WD20SPZX)[5400,6Gb/s 128MB,2.5"] x 5

1) Turns out part of the cause was the lagg interface. The server has two 1Gbps ports, so I thought it would be nice to utilize both. I was able to successfully combine both 1Gbps links into a single 10Mbps aggregate. After dismantling that some of the slowness cleared up.

2) Some of the instability cleared up with the 11.1 upgrade, and another part when I replaced the HBA card that was struck by lightning.

3) The data corruption was probably caused by the instability which turned my SSD L2ARC into an unidentified root level device on the zpool; it wouldn't have been as critical an issue if I had not foolishly overestimated the ZFS filesystem and added all of the drives to the same zpool (in separate raided vdevs). I was able to prevent any significant data loss by immediately blocking writes to it, rebooting (which caused it to roll back to the last stable point), and migrating the data.

4) There is not really anything I can do to fix the fragile nature of the ZFS filesystem caused by its projecting raid level limitations up to the filesystem layer, and compounding it with the inability to remove from the zpool. However, I can at least mitigate it by keeping the VDEV-to-zpool at a 1-to-1 ratio (never adding more then one raid set to a zpool).

5) The IO slowness really became obvious when I connected an external USB drive to the server in order to backup the data. I cannot bring the corrupting zpool online as shared which is why I decided to try using an external USB drive. I successfully mounted the USB drive as ntfs-3g (eventually), but when I tried to transfer the data it performed astonishingly slow. After the 6th day of the attempted 700GB transfer, I decided to abort it and try it from a different angle.

6) I reformatted the USB drive as ext4, then ext3, and finally ext2 (because ext4 and ext3 would not mount), mounted it and attempted the transfer again. I had previously thought my NetApp HBA+DAS was responsible for the IO slowness, but after monitoring the ntfs-3g and then the ext2 transfer using "iostat -x", I noticed that the DAS and HBA were working fine. The transfer in both cases was occurring halting bursts: (i) huge parallel reads from zpool drives, (ii) sudden huge write to the USB HDD, (iii) followed by the USB HDD write tapering off to 0Bps, (iv) then a prolonged period of nothing other than sporadic writes to the System Dataset; these periods of IO-lessness lengthened as the data transfer neared completion. Even so the 700GB transfer took about one day for the ext2.

7) After getting the backed-up data transferred to a new un-corrupted zpool, and bringing that online, I began a data verification and another file-sync over the network. This is when the last form of the IO issue presented clearly. Every atomic data transfer request that took less than 5-10 minutes always completes without any issue. Every transfer that took some extended period of time ran into issues. Those extended data transfers that I had done before dealing with the stability issues always froze and eventually failed. Those extended data transfers that I now perform after fixing the stability issue do not fail, but they all go into a server initiated sleep/hibernate; they only recover when I make a new data request to the server.

8) I thought I had found the issue when I checked the drive idle stats, and found that two of my 1TB drives were boned (way over the spin-up estimate). But after monitoring, I realized it was an old issue and none of the drives seemed to currently be effected by it (although most of them did show a recently high level of spin-up). None of them were set up for standby idle, or power-saving, but just in case I went through and explicitly turned off standby and power-save for all of them again from the command shell.

9) The slowness behavior is still happening, but I think it has nothing to do with the IO. I think something in the OS is throttling or sleeping the thread(s) that perform the transfer whenever they have to wait for IO. I think this is what then allows the drives to reach a point where they would spin down.

At this point, I'm not sure what to try next. Any help would be appreciated, thanks.