This will be my first go round with FreeNAS. Yes, I have read through the Noobs PowerPoint (which is awesome, I wish all technical communities could do something like that). This build will be for use in an Graduate research lab where their data consists of a lot of images and video (with minimal / no compression - they think avi is a good container, but I digress).

I'm one of the IT guys here, so they came to me asking about a better way to store data for long term. Their data is related to NIH grants, thus they need to keep their research data for 7 years. They have had one or two usb hard drives drop, hit the floor, and loose data, so they are now finally getting serious about data storage. I think their budget is around $3000.

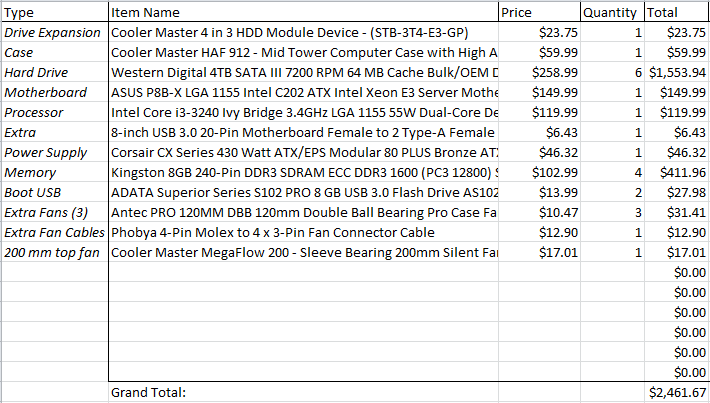

They were looking for a build with 12 - 16TB of storage out of the box, with the potential to expand at a later time (Thinking RaidZ2 for their first VDev, and adding another later on to the ZPool). Due to our campus having virtually no firewall, and the fact that cryptoware / crytoviruses scare the hell out of me, they will connect via SFTP. I've come up with the following hardware list, and was looking for suggestions / critiques. Any advise is welcome.

When and if they need to expand capacity, it sounds like the IBM ServeRAID M1015 is the way to go, but for the time being, I think it is out of scope for their intentions.

I see where the SuperMicro boards appear to be a front runner, and I am not opposed to using one, but I do have more familiarity with ASUS boards. If there are any big advantages I'm losing out on (I doubt I'll be able to utilize IPMI in this environment) please point them out. Thanks in advance for anyone who reads this far!

I'm one of the IT guys here, so they came to me asking about a better way to store data for long term. Their data is related to NIH grants, thus they need to keep their research data for 7 years. They have had one or two usb hard drives drop, hit the floor, and loose data, so they are now finally getting serious about data storage. I think their budget is around $3000.

They were looking for a build with 12 - 16TB of storage out of the box, with the potential to expand at a later time (Thinking RaidZ2 for their first VDev, and adding another later on to the ZPool). Due to our campus having virtually no firewall, and the fact that cryptoware / crytoviruses scare the hell out of me, they will connect via SFTP. I've come up with the following hardware list, and was looking for suggestions / critiques. Any advise is welcome.

- Processor: Intel Core i3-3240 Ivy Bridge 3.4GHz

- Motherboard: ASUS P8B-X LGA 1155 Intel C202

- Memory (ECC RAM) 4x: Kingston 8GB 240-Pin DDR3 SDRAM ECC DDR3 1600 VR16LE11/8EF

- Hard Drives (6x): Western Digital (Black) 4TB SATA III 7200 RPM WD4003FZEX

- Power Supply: Corsair CX Series 430 Watt ATX/EPS Modular 80 PLUS Bronze

- USB Flash Drives (Boot) 2x: ADATA Superior Series S102 PRO 8 GB USB 3.0 Flash Drive AS102P-8G-RGY

- USB 3 Header Adapter (For Boot): 8-inch USB 3.0 20-Pin Motherboard Female to 2 Type-A Female Connectors Y-Cable

- Case: Cooler Master HAF 912

- Hard Drive Adpater for 5.25" Bay: Cooler Master 4 in 3 HDD Module Device - (STB-3T4-E3-GP)

- Extra 120mm fans for case (3x): Antec PRO 120MM DBB 120mm Double Ball Bearing Pro Case Fan with 3-Pin & 4-Pin Connector

- 200 mm top fan:

Cooler Master MegaFlow 200 - Sleeve Bearing 200mm Silent Fan for Computer Cases (Black) - Extra Fan Cables: Phobya 4-Pin Molex to 4 x 3-Pin Fan Connector Cable

When and if they need to expand capacity, it sounds like the IBM ServeRAID M1015 is the way to go, but for the time being, I think it is out of scope for their intentions.

I see where the SuperMicro boards appear to be a front runner, and I am not opposed to using one, but I do have more familiarity with ASUS boards. If there are any big advantages I'm losing out on (I doubt I'll be able to utilize IPMI in this environment) please point them out. Thanks in advance for anyone who reads this far!