I am re configuring one of our SuperMicro boxes, which connects via 4x 1gbE to ESXi 6.0u2. We had a slow/crappy SSD SLOG for an 8 disk raid10, and decided to replace it as well as add in 2 Intel 750 PCIe NVMe cards. They do have their own full 8x PCIe channel, even though they only require 4x.

If I put on of them as SLOG for our 8x platter raid10, and perform a 5VM write test using VMware IOanaylzer fling (IOmeter with an interface), I am able to absolutely 100% saturate all 4 nics. The graph is one solid line at 500MBps.

However, if I make a mirror pool with both the 750s (and no SLOG, since they're so fast as per this thread I started: https://forums.freenas.org/index.ph...rc-recommended-with-very-fast-ssd-zpool.47382), the pool suffers hugely and my log is full of CTL_DATAMOVE (ctl_datamove: tag 0x6336669 on (9:3:6) aborted) errors on the FreeNAS side. Peak transfer rates are around 375MBps, but what is very worrying is that when the CTL_DATAMOVE errors hit, it absolutely freezes and drops. My ESXi graphs are bursts and complete pauses. Using a VM on the datastore is full of major hiccups. During this time of CTLs and pauses, gstat shows 100% busy on the devices, with 500iops (rock steady, does not fluctate) and 0 on all other counters. zpool iostat shows no activity. For the few moments that things are going well, gstat shows 4000+ iops and has typical (actual) values for other fields.

For testing, I put a Crucial MX550 as a SLOG to that 750 pool, and performance was great. The MX550 is only capable of about 150MBps, but its rock steady with 0 hiccups and no CTL_DATAMOVE errors.

Anything I can do/provide to help troubleshoot? This is latest 9.10 U4. I started with 0 autotune features, and then enabled it (plus 2 reboots to be positive they were in effect). No change.

We are past returning the 750s, and I would hate to have a blazing fast pool fronted by a crappy MX550 just to maintain it being up. Has anyone had these issues before with this drive, or SSDs in general? I am planning on trying 1 SSD pool no slog, 1 SSD pool with 1 SSD slog, and then trying the same configuration with the MX550 as a pool alone and then with a 750 SLOG. Unfortunately, we have a very slow vmotion environment and getting 500GB of test vms around multiple pools takes me hours. I am really hoping someone can help me out before I spend all that time testing.

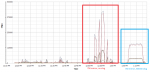

I have attached a picture of the pure 750 (no SLOG) and then the 750s with the MX550 SLOG, performing the same test. I don't have a cap of my 8x raid10 with a 750 SLOG, but it was as solid as the MX550 slog except at 525MBPs solid.

If I put on of them as SLOG for our 8x platter raid10, and perform a 5VM write test using VMware IOanaylzer fling (IOmeter with an interface), I am able to absolutely 100% saturate all 4 nics. The graph is one solid line at 500MBps.

However, if I make a mirror pool with both the 750s (and no SLOG, since they're so fast as per this thread I started: https://forums.freenas.org/index.ph...rc-recommended-with-very-fast-ssd-zpool.47382), the pool suffers hugely and my log is full of CTL_DATAMOVE (ctl_datamove: tag 0x6336669 on (9:3:6) aborted) errors on the FreeNAS side. Peak transfer rates are around 375MBps, but what is very worrying is that when the CTL_DATAMOVE errors hit, it absolutely freezes and drops. My ESXi graphs are bursts and complete pauses. Using a VM on the datastore is full of major hiccups. During this time of CTLs and pauses, gstat shows 100% busy on the devices, with 500iops (rock steady, does not fluctate) and 0 on all other counters. zpool iostat shows no activity. For the few moments that things are going well, gstat shows 4000+ iops and has typical (actual) values for other fields.

For testing, I put a Crucial MX550 as a SLOG to that 750 pool, and performance was great. The MX550 is only capable of about 150MBps, but its rock steady with 0 hiccups and no CTL_DATAMOVE errors.

Anything I can do/provide to help troubleshoot? This is latest 9.10 U4. I started with 0 autotune features, and then enabled it (plus 2 reboots to be positive they were in effect). No change.

We are past returning the 750s, and I would hate to have a blazing fast pool fronted by a crappy MX550 just to maintain it being up. Has anyone had these issues before with this drive, or SSDs in general? I am planning on trying 1 SSD pool no slog, 1 SSD pool with 1 SSD slog, and then trying the same configuration with the MX550 as a pool alone and then with a 750 SLOG. Unfortunately, we have a very slow vmotion environment and getting 500GB of test vms around multiple pools takes me hours. I am really hoping someone can help me out before I spend all that time testing.

I have attached a picture of the pure 750 (no SLOG) and then the 750s with the MX550 SLOG, performing the same test. I don't have a cap of my 8x raid10 with a 750 SLOG, but it was as solid as the MX550 slog except at 525MBPs solid.