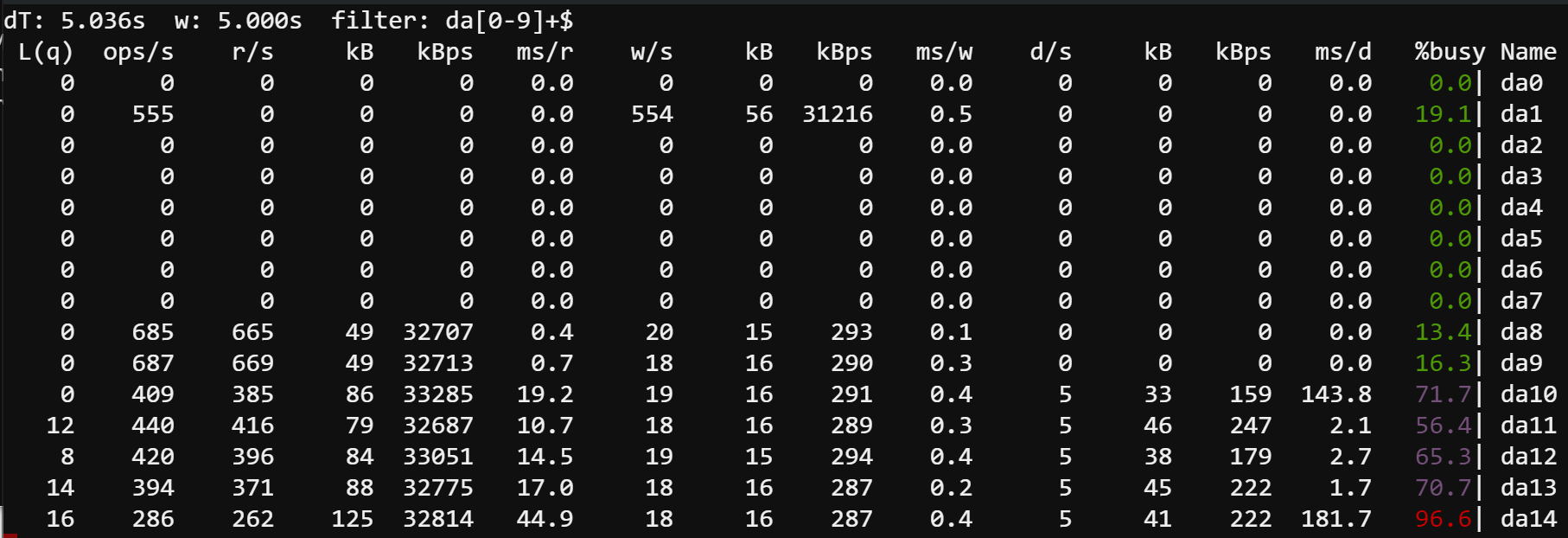

I've been unwinding the mistake I made buying a pile of 6TB WD Blues, and noticed this interesting snapshot of just how badly these drives perform, even on read operations.

In the gstat output below, I'm replacing da14 with da1 (a 7200 RPM NL-SAS drive). Some points of note:

In the gstat output below, I'm replacing da14 with da1 (a 7200 RPM NL-SAS drive). Some points of note:

- da10-da14 are SMR drives. da8 and da9 are PMR SATA drives.

- The only drives that exhibit "delete" operations are the SMR drives. Delete ops are issued by the OS to tell the drive that certain blocks are no longer in use; I don't know why this matters for an HDD, and why only the SMR drives are getting these ops. Might be a good question for the zfs devs? Based on the associated latency, I assume that the drive is actually doing writes for these ops. Seems unnecessary.

- Because of these slow delete ops (and destaging writes from cache, see below), read latency is awful, making ops stack up in the queue and causing %busy to stay very high. (They're typically all in the red.) Compare this to the %busy for the PMR drives, which don't have to do any housekeeping.

- As far as the OS is concerned, write latency is negligible because it's all going to cache. But the extra work required to destage those writes slows down reads, so the real write latency is shifted into the read latency.

- This is a resilvering operation, so these are all basically large, sequential ops, mostly 64K and 128K. Even so, the unpredictable behavior of the SMR drives keeps them throttled.

- This is the first disk replacement resilver I've seen where the bottleneck is on the read side. Usually the rate is limited by the disk being written to. You can see that in this case, da1 can easily handle what's being thrown at it, and is spending most of its time starving on an empty queue, while the SMR disks struggle to read data fast enough. The PMR drives aren't even breaking a sweat.

Last edited: