EB the FreeNAS Noob

Dabbler

- Joined

- May 19, 2017

- Messages

- 11

Hello All,

Long time lurker, first time poster. These forums have been extremely helpful when I encounter issues with FreeNAS in the past.

However, I'm running into an issue where I feel I need to ask a specific question. I did find some old threads similar to my question, but my situation feels just enough different that I need to ask for help.

A couple days ago i started getting error emails sent to me with a variation of these messages:

In one of the other threads I read, they requested the following output:

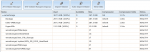

I see that a ton of the file system is reporting 100% full with extremely small capacities, but I have no idea how/why that would happen!

Nothing has physically changed. I enabled snapshoting a couple of days ago, but it has only done a few snapshots and the webgui doesn't show that it has used much space yet.

There should be many TBs of space available on the system. Everything still seems to be working, except I am having issues getting CIFS to start. However, I am afraid that issues may start to arise.

I have also included screenshots of the webgui that displays our volumes and disks. One strange thing that I've noticed is that wmsstorage/VMStorage and wmsstorage/XenVMStorage are missing from the output above. Not sure if that means anything, but it is an observation.

If anybody has any ideas, I am willing to try them. Please let me know if you need any other information from my system.

Thank you in advance! EB

Long time lurker, first time poster. These forums have been extremely helpful when I encounter issues with FreeNAS in the past.

However, I'm running into an issue where I feel I need to ask a specific question. I did find some old threads similar to my question, but my situation feels just enough different that I need to ask for help.

A couple days ago i started getting error emails sent to me with a variation of these messages:

Code:

newsyslog: chmod(/var/log/maillog.6.bz2) in change_attrs: No space left on device freenas changes in mounted filesystems: 11d10 < wmsstorage/.system/samba4 /mnt/wmsstorage/.system/samba4 zfs rw,nfsv4acls 0 0 12a12 > wmsstorage/Backups /mnt/wmsstorage/Backups zfs rw,nfsv4acls 0 0 mv: rename /var/log/mount.today to /var/log/mount.yesterday: No space left on device mv: /var/log/mount.today: No space left on device bzip2: Can't create output file /var/log/messages.0.bz2: No space left on device. newsyslog: `bzip2 -f /var/log/messages.0' terminated with a non-zero status (1)

In one of the other threads I read, they requested the following output:

Code:

[root@freenas] ~# du /mnt | sort -nr |head 14534422 /mnt 14534420 /mnt/wmsstorage 14528738 /mnt/wmsstorage/HyperVMs 5616 /mnt/wmsstorage/.system 5398 /mnt/wmsstorage/.system/syslog 5381 /mnt/wmsstorage/.system/syslog/log 4599 /mnt/wmsstorage/.system/syslog/log/samba4 169 /mnt/wmsstorage/.system/cores 17 /mnt/wmsstorage/ESXI-VMStorage 17 /mnt/wmsstorage/Backups [root@freenas] ~# df -ah Filesystem Size Used Avail Capacity Mounted on /dev/ufs/FreeNASs1a 926M 826M 26M 97% / devfs 1.0k 1.0k 0B 100% /dev /dev/md0 4.6M 3.3M 902k 79% /etc /dev/md1 823k 2.0k 756k 0% /mnt /dev/md2 149M 33M 103M 25% /var /dev/ufs/FreeNASs4 19M 3.3M 15M 18% /data wmsstorage 367k 367k 0B 100% /mnt/wmsstorage wmsstorage/.system 351k 351k 0B 100% /mnt/wmsstorage/.system wmsstorage/.system/XEN-GR-R510-Heartbeat 1.0G 287k 1.0G 0% /mnt/wmsstorage/.system/XEN-GR-R510-Heartbeat wmsstorage/.system/cores 439k 439k 0B 100% /mnt/wmsstorage/.system/cores wmsstorage/.system/syslog 5.7M 5.7M 0B 100% /mnt/wmsstorage/.system/syslog wmsstorage/HyperVMs 13G 13G 0B 100% /mnt/wmsstorage/HyperVMs wmsstorage/ESXI-VMStorage 287k 287k 0B 100% /mnt/wmsstorage/ESXI-VMStorage wmsstorage/Backups 287k 287k 0B 100% /mnt/wmsstorage/Backups [root@freenas] ~# mount /dev/ufs/FreeNASs1a on / (ufs, local, read-only) devfs on /dev (devfs, local, multilabel) /dev/md0 on /etc (ufs, local) /dev/md1 on /mnt (ufs, local) /dev/md2 on /var (ufs, local) /dev/ufs/FreeNASs4 on /data (ufs, local, noatime, soft-updates) wmsstorage on /mnt/wmsstorage (zfs, local, nfsv4acls) wmsstorage/.system on /mnt/wmsstorage/.system (zfs, local, nfsv4acls) wmsstorage/.system/XEN-GR-R510-Heartbeat on /mnt/wmsstorage/.system/XEN-GR-R510-Heartbeat (zfs, local, nfsv4acls) wmsstorage/.system/cores on /mnt/wmsstorage/.system/cores (zfs, local, nfsv4acls) wmsstorage/.system/syslog on /mnt/wmsstorage/.system/syslog (zfs, local, nfsv4acls) wmsstorage/HyperVMs on /mnt/wmsstorage/HyperVMs (zfs, local, nfsv4acls) wmsstorage/ESXI-VMStorage on /mnt/wmsstorage/ESXI-VMStorage (zfs, local, nfsv4acls) wmsstorage/Backups on /mnt/wmsstorage/Backups (zfs, local, nfsv4acls) [root@freenas] ~#

I see that a ton of the file system is reporting 100% full with extremely small capacities, but I have no idea how/why that would happen!

Nothing has physically changed. I enabled snapshoting a couple of days ago, but it has only done a few snapshots and the webgui doesn't show that it has used much space yet.

There should be many TBs of space available on the system. Everything still seems to be working, except I am having issues getting CIFS to start. However, I am afraid that issues may start to arise.

I have also included screenshots of the webgui that displays our volumes and disks. One strange thing that I've noticed is that wmsstorage/VMStorage and wmsstorage/XenVMStorage are missing from the output above. Not sure if that means anything, but it is an observation.

If anybody has any ideas, I am willing to try them. Please let me know if you need any other information from my system.

Thank you in advance! EB