Hello!

Before I get started, here's my system:

45 Drives Storinator

TrueNAS: TrueNAS-13.0-U5.3

MB: Supermicro X11DPL-i

HBA: LSI 9305 x 3

RAM: 128GB ECC

HD's: Mostly 8TB Seagate EXOS, some Seagate Ironwolf - all 8TB

Zpool is 4 vdevs of 10 drives as raidz3 with 5 drives as spares (I know, lol) - when I built this system, I was having multiple drive failures with the old system and decided to be overly cautious

Here's the problem:

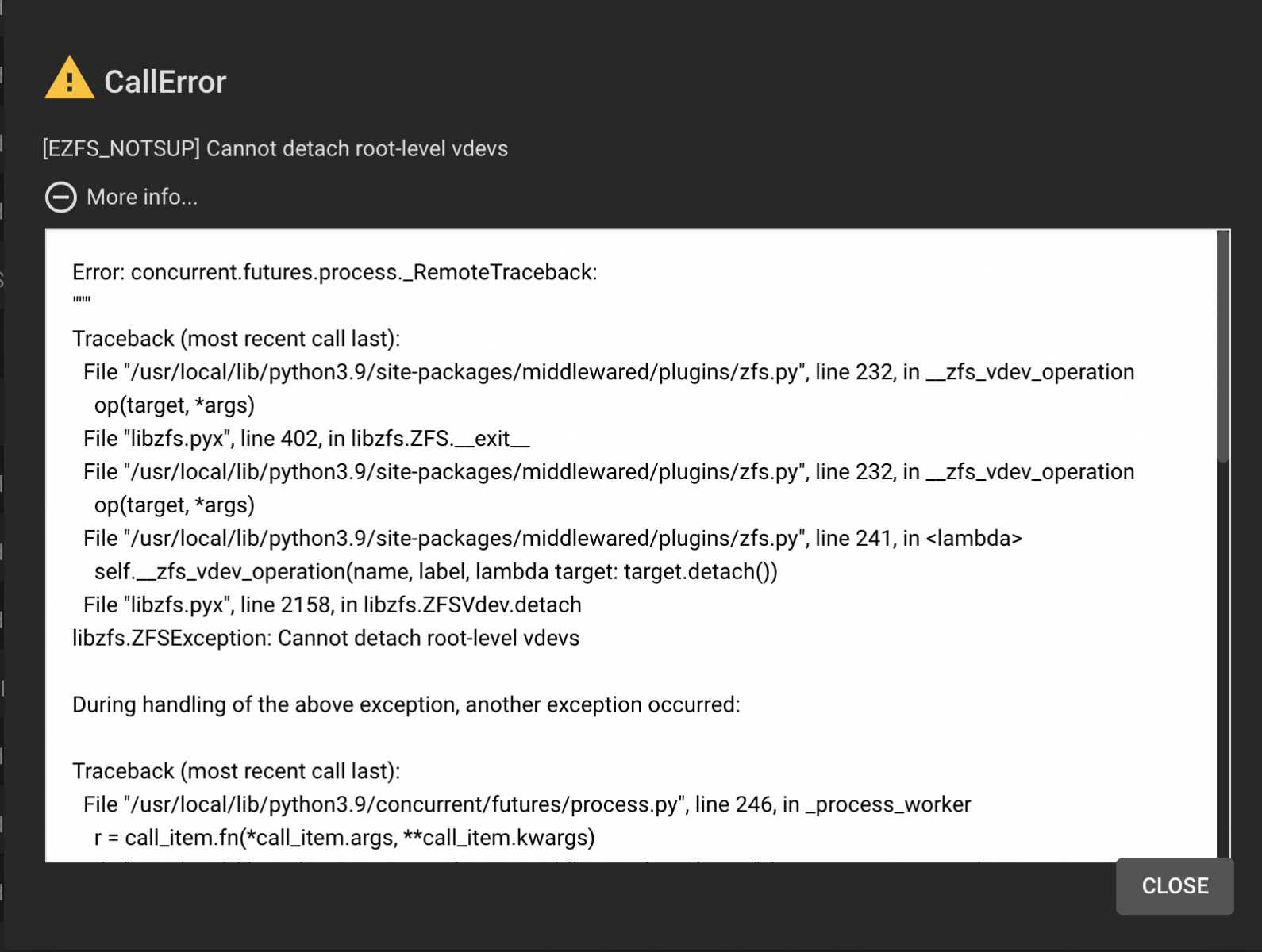

I recently had a drive fail (da16), and one of my hot spares jumped in (da40). I got the notification, so I went to the UI, put the failed drive offline, and then shut down to replace da16. I put the new drive in, and started back up and let it resilver. When it completed without problems, and both drives were online, the spare did not return automatically to the spare pool. Instead of detaching the spare, I put it offline (doh!). It did not return to the spare set, so I tried to detach it, and the UI gives this error:

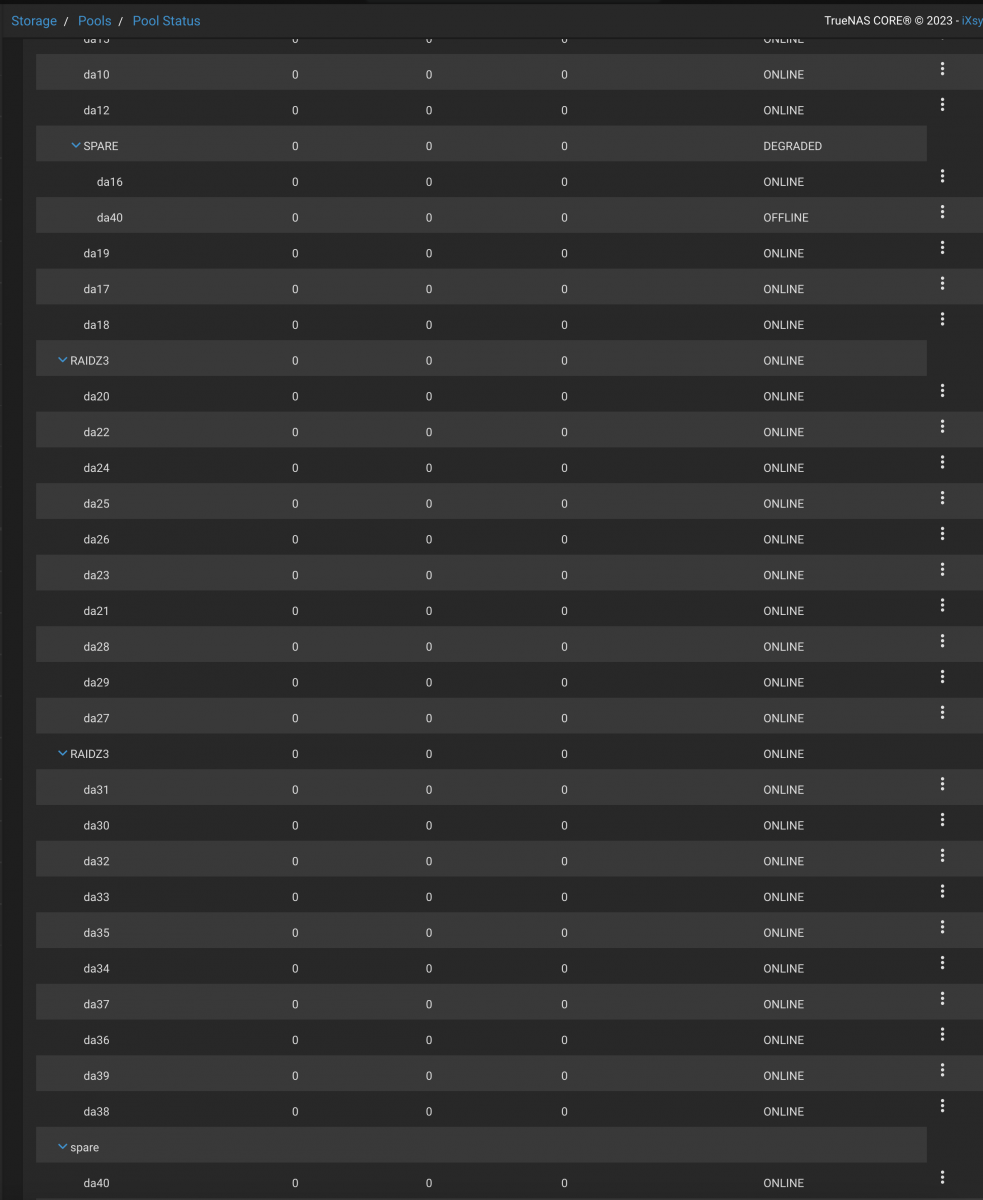

Even though the operation was unsuccessful, I do now see da40 back in the list of spares and ONLINE. However, it is also still in the pool where it originally jumped in as a spare:

I did some searching on the forums and the recommendation was to detach the drive from the CLI. Here's the pool:

The next thing I tried to was to online the drive with 'zpool online':

Got the same result if I try and detach:

So I'm a little stuck. da40 (gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba) is no longer part of the pool and is back in the list of spares, but zpool status still shows it in the output.

Do you guys have any ideas?

Thanks!

Before I get started, here's my system:

45 Drives Storinator

TrueNAS: TrueNAS-13.0-U5.3

MB: Supermicro X11DPL-i

HBA: LSI 9305 x 3

RAM: 128GB ECC

HD's: Mostly 8TB Seagate EXOS, some Seagate Ironwolf - all 8TB

Zpool is 4 vdevs of 10 drives as raidz3 with 5 drives as spares (I know, lol) - when I built this system, I was having multiple drive failures with the old system and decided to be overly cautious

Here's the problem:

I recently had a drive fail (da16), and one of my hot spares jumped in (da40). I got the notification, so I went to the UI, put the failed drive offline, and then shut down to replace da16. I put the new drive in, and started back up and let it resilver. When it completed without problems, and both drives were online, the spare did not return automatically to the spare pool. Instead of detaching the spare, I put it offline (doh!). It did not return to the spare set, so I tried to detach it, and the UI gives this error:

Even though the operation was unsuccessful, I do now see da40 back in the list of spares and ONLINE. However, it is also still in the pool where it originally jumped in as a spare:

I did some searching on the forums and the recommendation was to detach the drive from the CLI. Here's the pool:

Code:

root@goliath[~]# zpool status GOLIATH -f

cannot open '-f': name must begin with a letter

pool: GOLIATH

state: DEGRADED

status: One or more devices has been taken offline by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using 'zpool online' or replace the device with

'zpool replace'.

scan: resilvered 4.69T in 13:04:00 with 0 errors on Sat Aug 26 00:34:41 2023

config:

NAME STATE READ WRITE CKSUM

GOLIATH DEGRADED 0 0 0

raidz3-0 ONLINE 0 0 0

gptid/955cf876-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/a23ef856-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/96793d87-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/9cf24cd8-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/986b2ff2-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/a5bbaa71-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/7e3f0319-4375-11ee-aa23-3cecef6e76ba.eli ONLINE 0 0 0

gptid/add0e87d-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/b23ffb56-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/b1a2259c-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

raidz3-1 DEGRADED 0 0 0

gptid/9f55db7e-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/a3cb392f-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/a098b204-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/9ebdebc0-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/a4904562-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/5b63b7a9-4d49-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

spare-6 DEGRADED 0 0 0

gptid/f1d1d7af-d59c-11ed-a862-3cecef6e76ba.eli ONLINE 0 0 0

gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba.eli OFFLINE 0 0 0

gptid/d0f5e63f-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/d1f02e0f-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/dd660045-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

raidz3-2 ONLINE 0 0 0

gptid/d7875504-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/dc3d4213-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e092bb98-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e27dd2db-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e456ac7b-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/da30a26a-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/de1d2773-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e8b14a04-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e8165f17-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e084d613-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

raidz3-3 ONLINE 0 0 0

gptid/e68ae0f8-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/e7662f77-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/ed566e44-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/fa460ef2-4c56-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/05452e50-4c57-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/074b1868-4c57-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/0a02dac7-4c57-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/0c65792b-4c57-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/1349e22f-4c57-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

gptid/1445f15a-4c57-11eb-b083-3cecef6e76ba.eli ONLINE 0 0 0

spares

gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba.eli AVAIL

gptid/0ffba24a-4c57-11eb-b083-3cecef6e76ba.eli AVAIL

gptid/1430a462-4c57-11eb-b083-3cecef6e76ba.eli AVAIL

gptid/162bb3a8-4c57-11eb-b083-3cecef6e76ba.eli AVAIL

gptid/16a0022d-4c57-11eb-b083-3cecef6e76ba.eli AVAIL

errors: No known data errors

root@goliath[~]#

The next thing I tried to was to online the drive with 'zpool online':

Code:

root@goliath[~]# zpool online GOLIATH gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba cannot online gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba: no such device in pool root@goliath[~]#

Got the same result if I try and detach:

Code:

root@goliath[~]# zpool detach GOLIATH gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba cannot detach gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba: no such device in pool root@goliath[~]#

So I'm a little stuck. da40 (gptid/0d93ec08-4c57-11eb-b083-3cecef6e76ba) is no longer part of the pool and is back in the list of spares, but zpool status still shows it in the output.

Do you guys have any ideas?

Thanks!