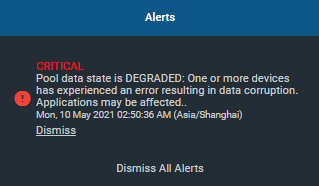

maybe caused by power off,my freenas pool status now is DEGRADED,I got this alert.

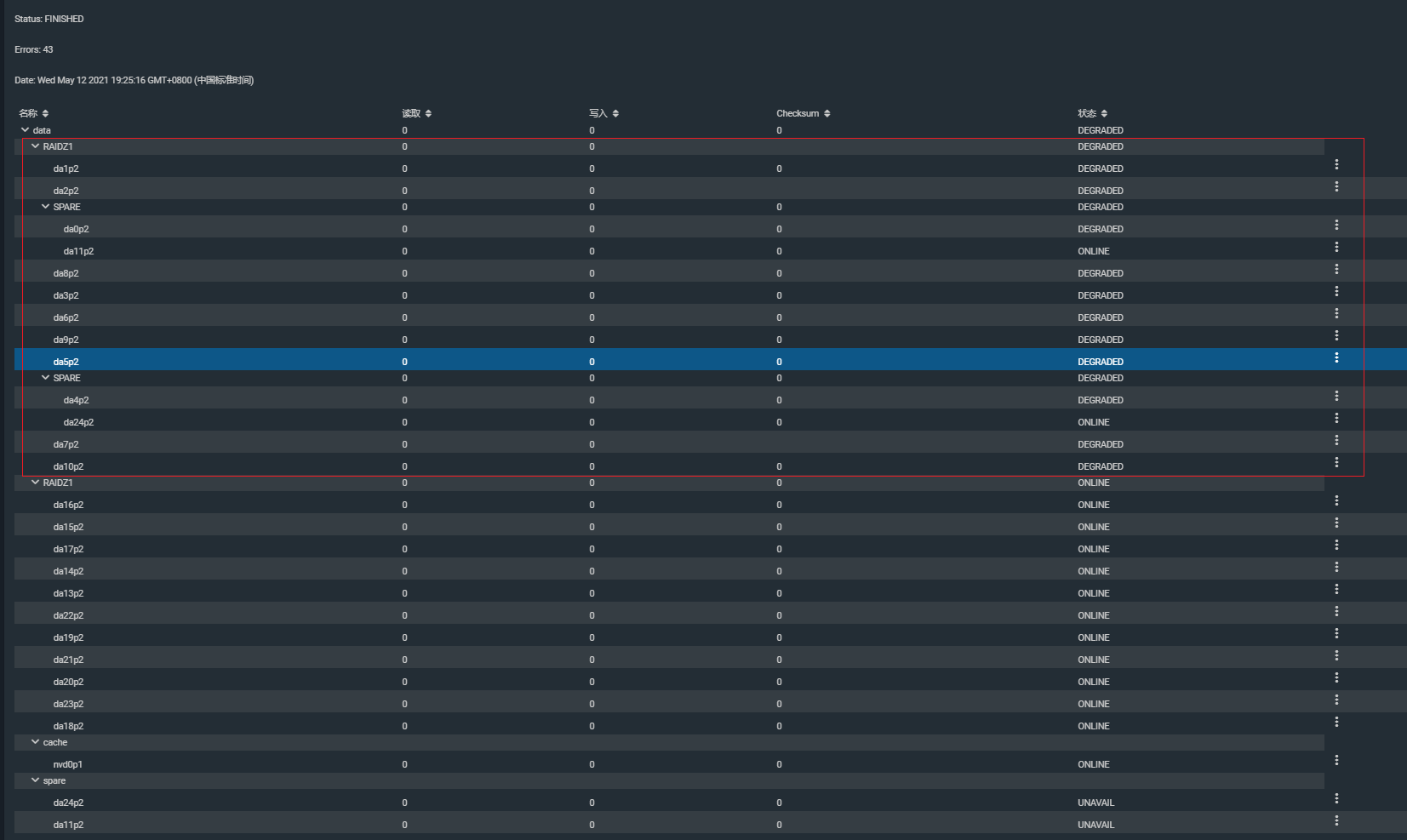

I used 24 WD HC320 8T hard disks to make two RAIDZ1s, one in group of 11, and the other as a hot spare disk.

Now my storage pool status is like this

zpool status -v

I can only suspect that there is a problem with the md1200 hard disk backplane or the pass-through card.

There is also a problem with the freenas system. I don’t know if I will have trouble rebuilding the system?

Please help me...

I used 24 WD HC320 8T hard disks to make two RAIDZ1s, one in group of 11, and the other as a hot spare disk.

Now my storage pool status is like this

zpool status -v

Code:

Last login: Thu May 13 16:35:29 on pts/10

FreeBSD 11.3-RELEASE-p5 (FreeNAS.amd64) #0 r325575+8ed1cd24b60(HEAD): Mon Jan 27 18:07:23 UTC 2020

FreeNAS (c) 2009-2020, The FreeNAS Development Team

All rights reserved.

FreeNAS is released under the modified BSD license.

For more information, documentation, help or support, go here:

http://freenas.org

Welcome to FreeNAS

Warning: settings changed through the CLI are not written to

the configuration database and will be reset on reboot.

root@freenas[~]# zpool status -v

pool: data

state: DEGRADED

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: http://illumos.org/msg/ZFS-8000-8A

scan: scrub repaired 0 in 0 days 16:33:56 with 43 errors on Thu May 13 11:59:12 2021

config:

NAME STATE READ WRITE CKSUM

data DEGRADED 0 0 646

raidz1-0 DEGRADED 0 0 1.26K

gptid/9b62fdd0-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/9cd7d634-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 10 too many errors

spare-2 DEGRADED 0 0 0

gptid/9e632a1f-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/a598f8d2-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/9c9af145-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/9ddfcc73-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/9c0290be-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/9e9a5805-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/9f1dcff4-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

spare-8 DEGRADED 0 0 0

gptid/9e983fc6-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

gptid/a4ee4817-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/9c34855b-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 1 too many errors

gptid/9d8816a7-809e-11eb-b94b-b8ca3a662088 DEGRADED 0 0 0 too many errors

raidz1-1 ONLINE 0 0 0

gptid/9c30b969-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/9bb77da2-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/9c7c15a5-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/9e31bff1-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/9e0ced9f-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/a4f449e2-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/a4f14cc5-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/a4cec044-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/a43f7953-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/a553a780-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

gptid/a57982de-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

cache

gptid/a3b16d04-809e-11eb-b94b-b8ca3a662088 ONLINE 0 0 0

spares

15162080852368633587 INUSE was /dev/gptid/a4ee4817-809e-11eb-b94b-b8ca3a662088

8425619802607042845 INUSE was /dev/gptid/a598f8d2-809e-11eb-b94b-b8ca3a662088

errors: Permanent errors have been detected in the following files:

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da11/disk_io_time.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da11/disk_ops.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da11/disk_time.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da13/disk_io_time.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da13/disk_time.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da15/disk_octets.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da15/disk_ops.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da15/disk_time.rrd

/var/db/system/rrd-116db32ddb2646ea8241a0246a497227/localhost/disk-da8/disk_io_time.rrd

/mnt/data/projects/.zfs/snapshot/projects-auto-2021-05-12_00-00/g37dg/Production/Department/CFX/shots/Ep003/sc002/sh038/movies/g37dg_Ep003_sc002_sh038_cfx_v048_Qcloth_v001.avi

/mnt/data/projects/.zfs/snapshot/projects-auto-2021-05-12_00-00/g37dg/Production/Department/CFX/shots/Ep003/sc002/sh038/cache/QUANZIA.0242

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0 days 00:00:03 with 0 errors on Thu May 6 18:45:03 2021

config:

Code:

root@freenas[~]# root@freenas[~]# root@freenas[~]# root@freenas[~]# zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT data 160T 71.8T 88.2T - - 5% 44% 1.00x DEGRADED /mnt freenas-boot 232G 1.00G 231G - - 0% 0% 1.00x ONLINE - root@freenas[~]# zfs list NAME USED AVAIL REFER MOUNTPOINT data 63.8T 74.0T 156K /mnt/data data/.system 770M 74.0T 650M legacy data/.system/configs-116db32ddb2646ea8241a0246a497227 10.7M 74.0T 10.7M legacy data/.system/configs-5aa37175611b480aa1ee1aee411ae28f 313K 74.0T 313K legacy data/.system/cores 1.23M 74.0T 1.23M legacy data/.system/rrd-116db32ddb2646ea8241a0246a497227 75.3M 74.0T 75.3M legacy data/.system/rrd-5aa37175611b480aa1ee1aee411ae28f 27.8M 74.0T 27.8M legacy data/.system/samba4 2.18M 74.0T 2.18M legacy data/.system/syslog-116db32ddb2646ea8241a0246a497227 2.20M 74.0T 2.20M legacy data/.system/syslog-5aa37175611b480aa1ee1aee411ae28f 405K 74.0T 405K legacy data/.system/webui 156K 74.0T 156K legacy data/cache 142G 74.0T 142G /mnt/data/cache data/manager 3.30T 74.0T 3.07T /mnt/data/manager data/projects 55.1T 74.0T 54.9T /mnt/data/projects data/share 5.21T 74.0T 4.83T /mnt/data/share freenas-boot

I can only suspect that there is a problem with the md1200 hard disk backplane or the pass-through card.

There is also a problem with the freenas system. I don’t know if I will have trouble rebuilding the system?

Please help me...