PLAN

Im trying to create a storage cluster using the release version of scale and truecommand. Im setting this all up with Hetzner. Currently I have 3 storage servers that I need to migrate to scale. So the plan was to

1. buy 1 x new storage server + 2 x temporary small servers to just achieve the minimum of 3 servers for the initial cluster (as stated clustering needs 3 servers)

2. move files from older servers and add it to the cluster after

3. remove the 2 temporary servers (I know truecommand doesnt allow removing servers yet, but probably soon?)

SETUP

Specs of the server are

New storage server (old servers have the same specs as this)

Intel W-2145, 128gb ram, 2 x NVMe, 10 x 16TB HDD, 1 x 10gbps nic

Temporary Servers

Intel i7-4770. 32gb ram, 2 x 240gb SSD

As said, plan was to remove them after all servers has been joined to the cluster.

Network info is as follows

Main interface is using ipv4 address included in server.

vlan is setup using hetzner's vswitch setup and hooked up to main interface. Each server has 1 x private network IP with something like 10.0.1.XXX/24

Truecommand Node is on a hetzner cloud server with 2 x AMD vCPU, 2gb ram, 40gb nvme and connected to the vswitch private network same as the dedicated servers. Using truecommand v.2.1

ISSUES:

I followed the guide posted here

www.truenas.com

www.truenas.com

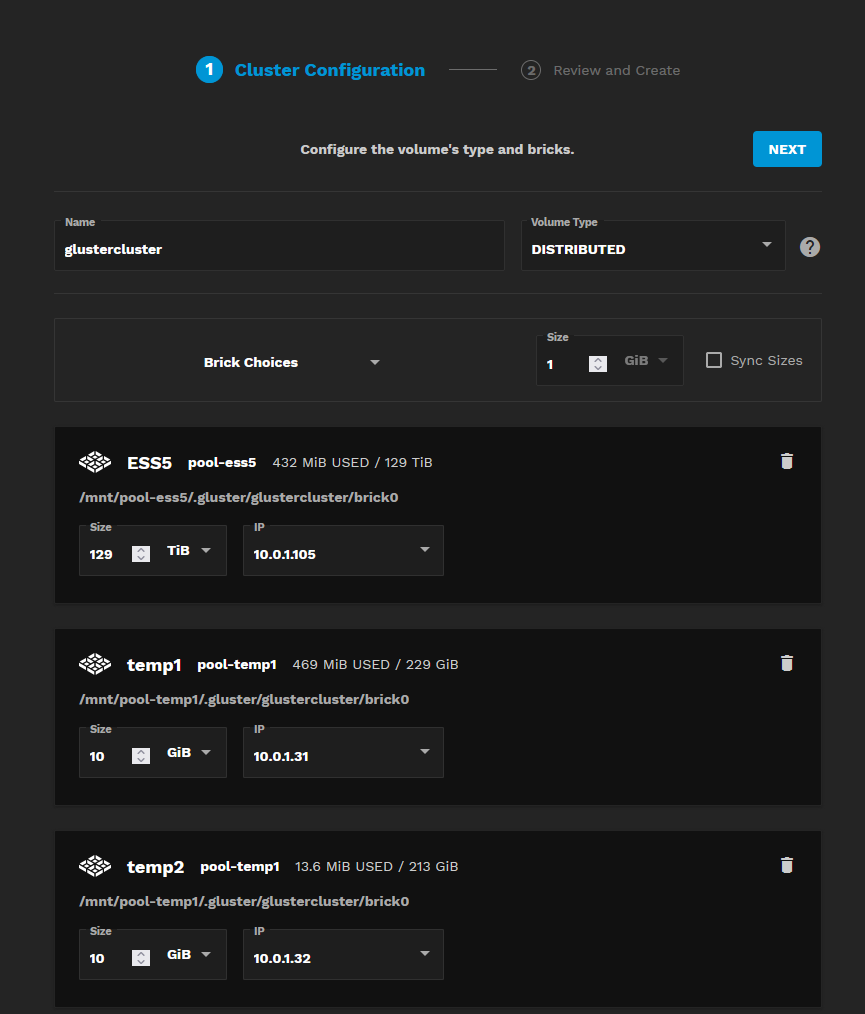

I got to the part that I was able to add all the 3 servers and started to create the cluster. I used the below configuration for the cluster,

After clicking create was it said "Cluster volume xxxxxxx created successfully." but after that it was just blank on the Cluster Volume page of truecommand. As if nothing happened.

Hope someone can point me to the right direction on getting this to work.

Im trying to create a storage cluster using the release version of scale and truecommand. Im setting this all up with Hetzner. Currently I have 3 storage servers that I need to migrate to scale. So the plan was to

1. buy 1 x new storage server + 2 x temporary small servers to just achieve the minimum of 3 servers for the initial cluster (as stated clustering needs 3 servers)

2. move files from older servers and add it to the cluster after

3. remove the 2 temporary servers (I know truecommand doesnt allow removing servers yet, but probably soon?)

SETUP

Specs of the server are

New storage server (old servers have the same specs as this)

Intel W-2145, 128gb ram, 2 x NVMe, 10 x 16TB HDD, 1 x 10gbps nic

Temporary Servers

Intel i7-4770. 32gb ram, 2 x 240gb SSD

As said, plan was to remove them after all servers has been joined to the cluster.

Network info is as follows

Main interface is using ipv4 address included in server.

vlan is setup using hetzner's vswitch setup and hooked up to main interface. Each server has 1 x private network IP with something like 10.0.1.XXX/24

Truecommand Node is on a hetzner cloud server with 2 x AMD vCPU, 2gb ram, 40gb nvme and connected to the vswitch private network same as the dedicated servers. Using truecommand v.2.1

ISSUES:

I followed the guide posted here

TrueNAS SCALE - Clustered SMB HOWTO

TrueNAS SCALE Testers, As many of you know, one of the major new features we’ve been hard at work on with SCALE is the ability to create scale-out clustered SMB shares. Much of this functionality has already landed in 22.02, and the upcoming TrueCommand 2.2 version will provide a more...

I got to the part that I was able to add all the 3 servers and started to create the cluster. I used the below configuration for the cluster,

After clicking create was it said "Cluster volume xxxxxxx created successfully." but after that it was just blank on the Cluster Volume page of truecommand. As if nothing happened.

Hope someone can point me to the right direction on getting this to work.

Last edited: