Running TrueNAS-SCALE-23.10.0.1

PowerEdge R740xd

2x Intel Xeon Silver 4216 CPU @ 2.1 GHz

384 GB RAM

Boot Drives:

2x Dell 480 GB SSD (MZ7LH480HBHQ0D3)

Storage Drives:

9x WD Gold 12 TB HD (WD121KRYZ-01)

Read Cache:

2x WD Black 1TB SN750 NVMe SSD

SLOG:

3x Intel OPTANE SSD P1600X Series 118GB M.2

Hard Drive Controller:

Dell HBA330 Firmware 16.17.01.00

Storage Configuration:

4x Mirror, 2 Wide (12 TB WD Gold drives)

Log VDEVs 1x 118 GB Mirror, 3 wide (Optane)

Cache VDEVs 2x 1 TB (WD Black SSD)

Spare VDEVs 1x 12 TB (WD Gold)

Network Cards:

Onboard NIC (Intel X550 4 Port 10 GB)

PCI-E Card (Intel X710-T 4 Port 10 GB)

Using system as an iSCSI target for VMware. Two VMware ESXi hosts are connected via 10 GB iSCSI (each host has two links, MPIO on, Jumbo Frames are on). Two Zvols have been setup (Spare). Both Zvols have been set to "Sync always".

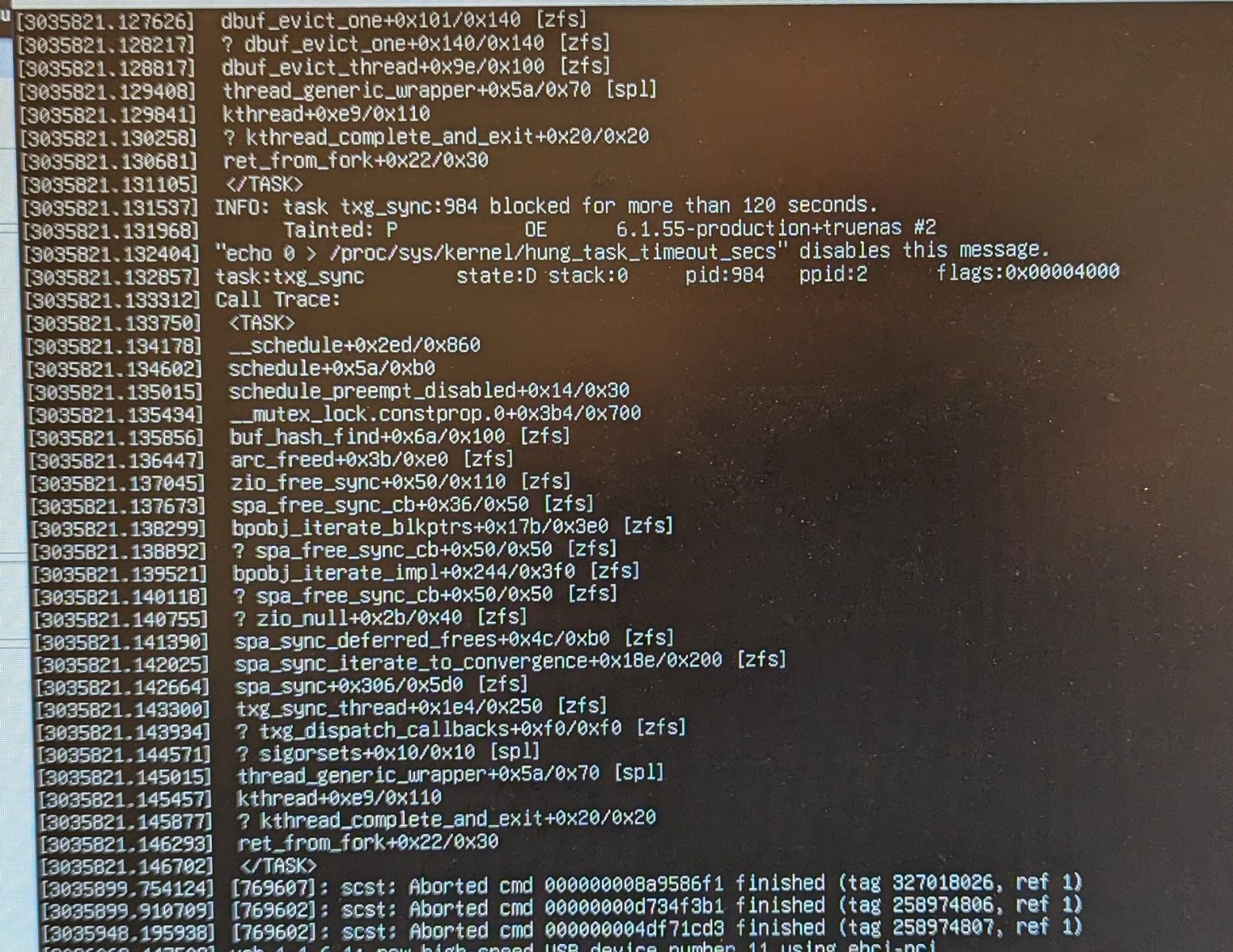

I've had this particular issue happen 3 times now between two different TrueNAS Scale setups setup as listed above. Under heavy write utilization (large storage vMotion), the iSCSI target will stop functioning, along with with web interface. Ended up needing to reboot the TrueNAS server to restore iSCSI connectivity.

Image below is what shows on the console.

Utilization on the storage array is around 15% when this happens.

We are running ZFS Encryption on this volume.

Any suggestions?

Thank you for your time!

PowerEdge R740xd

2x Intel Xeon Silver 4216 CPU @ 2.1 GHz

384 GB RAM

Boot Drives:

2x Dell 480 GB SSD (MZ7LH480HBHQ0D3)

Storage Drives:

9x WD Gold 12 TB HD (WD121KRYZ-01)

Read Cache:

2x WD Black 1TB SN750 NVMe SSD

SLOG:

3x Intel OPTANE SSD P1600X Series 118GB M.2

Hard Drive Controller:

Dell HBA330 Firmware 16.17.01.00

Storage Configuration:

4x Mirror, 2 Wide (12 TB WD Gold drives)

Log VDEVs 1x 118 GB Mirror, 3 wide (Optane)

Cache VDEVs 2x 1 TB (WD Black SSD)

Spare VDEVs 1x 12 TB (WD Gold)

Network Cards:

Onboard NIC (Intel X550 4 Port 10 GB)

PCI-E Card (Intel X710-T 4 Port 10 GB)

Using system as an iSCSI target for VMware. Two VMware ESXi hosts are connected via 10 GB iSCSI (each host has two links, MPIO on, Jumbo Frames are on). Two Zvols have been setup (Spare). Both Zvols have been set to "Sync always".

I've had this particular issue happen 3 times now between two different TrueNAS Scale setups setup as listed above. Under heavy write utilization (large storage vMotion), the iSCSI target will stop functioning, along with with web interface. Ended up needing to reboot the TrueNAS server to restore iSCSI connectivity.

Image below is what shows on the console.

Utilization on the storage array is around 15% when this happens.

We are running ZFS Encryption on this volume.

Any suggestions?

Thank you for your time!