NickF

Guru

- Joined

- Jun 12, 2014

- Messages

- 763

Hi All,

I just wanted to circle back to this since I haven't seen any update on this topic in a while. Is there an actual ticket number I can reference @morganL ?

Tom Lawrence (@LawrenceSystems on here) Brought this up in his video on Bluefin today (and in previous videos):

www.youtube.com

www.youtube.com

And Wendell from Level1Techs brought this up back like 4 months ago:

www.youtube.com

www.youtube.com

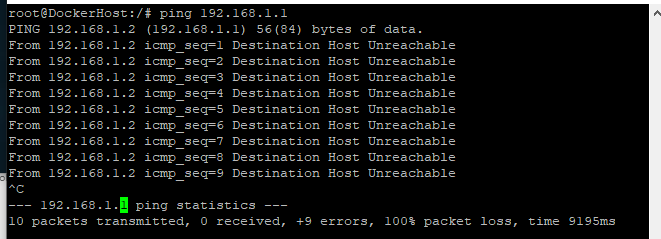

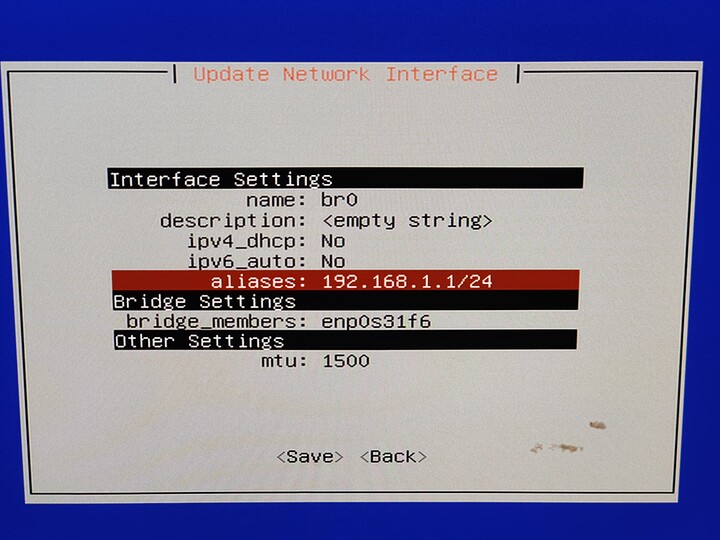

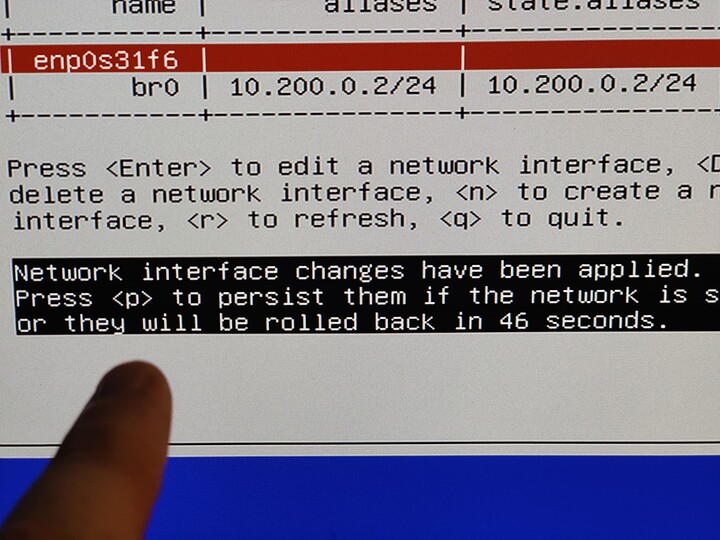

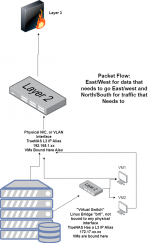

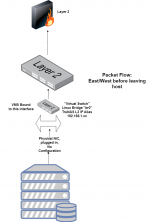

Basically, I am just complaining that VMs can't access the NAS they are running on without a network bridge (most folks followed this: https://www.truenas.com/docs/scale/scaletutorials/virtualization/accessingnasfromvm/) being manually created, or unless they go out to the switch and back over a different VLAN.

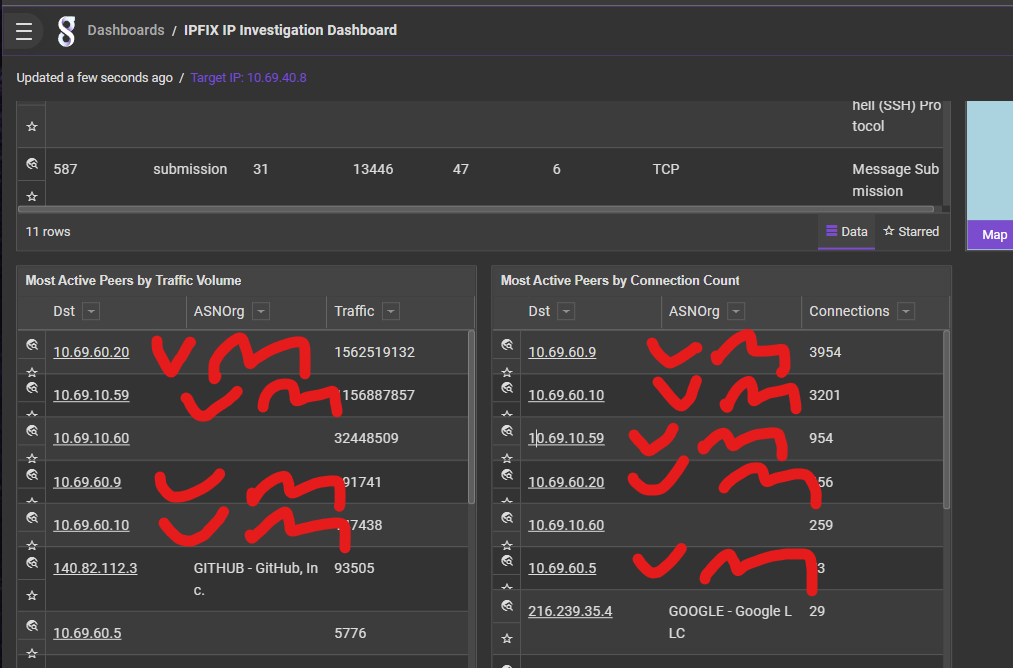

In my configuration I am doing the latter currently, which I think is totally silly. Looking at the past 24 hours, 5 out of 6 of the top addresses talking THROUGH my switch back to my NAS are VMs running on my NAS.

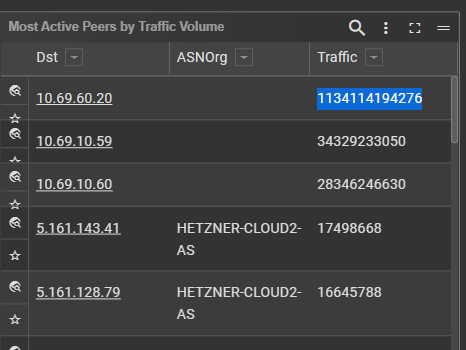

If I expand the resolution out to a month, my Plex server literally used a Terrabyte of network bandwidth to talk to the server it lives on.....

What's going on? Is this really not something folks other than me and a few tech YouTubers care about?

I just wanted to circle back to this since I haven't seen any update on this topic in a while. Is there an actual ticket number I can reference @morganL ?

Tom Lawrence (@LawrenceSystems on here) Brought this up in his video on Bluefin today (and in previous videos):

TrueNAS SCALE 22.12.0 Bluefin Is a Whale of an Update!

TrueNAS SCALE Beta vs TrueNAS CORE Performance Comparisonhttps://youtu.be/uFJaYXUWEb8Release noteshttps://www.truenas.com/docs/scale/scale22.12/Adding admin ...

And Wendell from Level1Techs brought this up back like 4 months ago:

TrueNAS: Full Setup Guide for Setting Up Portainer, Containers and Tailscale #Ultimatehomeserver

Thank you to Fractal for sponsoring this video! Check out the Fractal Meshify 2 Lite here: https://www.fractal-design.com/products/cases/meshify/meshify-2-li...

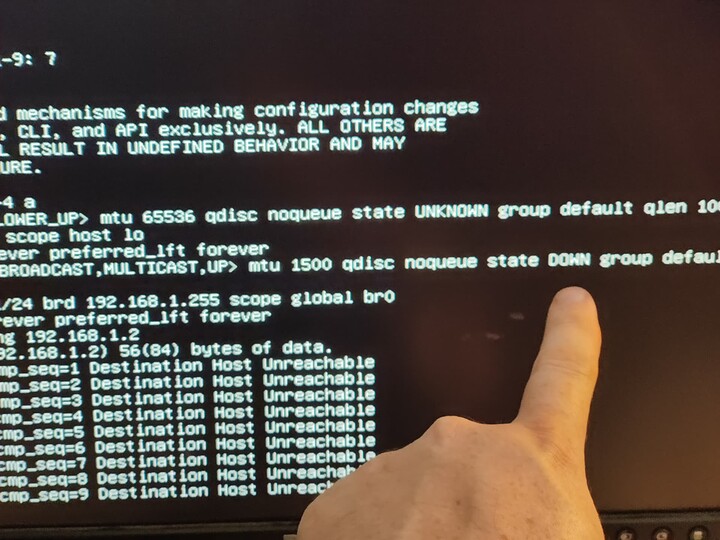

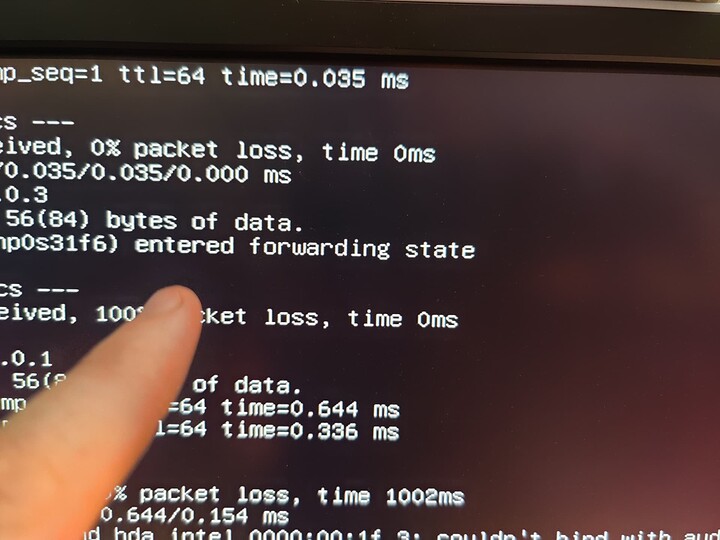

Basically, I am just complaining that VMs can't access the NAS they are running on without a network bridge (most folks followed this: https://www.truenas.com/docs/scale/scaletutorials/virtualization/accessingnasfromvm/) being manually created, or unless they go out to the switch and back over a different VLAN.

In my configuration I am doing the latter currently, which I think is totally silly. Looking at the past 24 hours, 5 out of 6 of the top addresses talking THROUGH my switch back to my NAS are VMs running on my NAS.

If I expand the resolution out to a month, my Plex server literally used a Terrabyte of network bandwidth to talk to the server it lives on.....

What's going on? Is this really not something folks other than me and a few tech YouTubers care about?