danb35

Hall of Famer

- Joined

- Aug 16, 2011

- Messages

- 15,504

I've started having some trouble with da0 in my system--a few bad sectors, messages in the daily security report below, etc.

I have a replacement on the way and will get it replaced. What's confusing me, though, is the pool status. If I check at the shell, I get this:

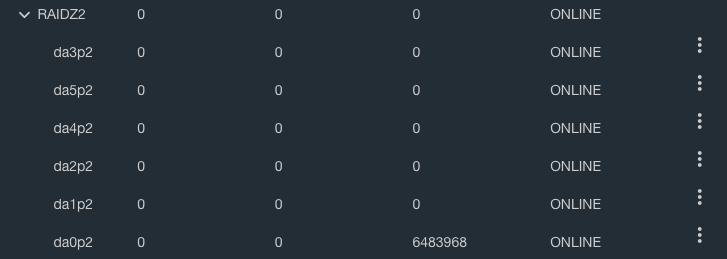

In the GUI, though, I get this from the relevant vdev:

The columns are, of course, the same as in the CLI output, so the figure of 6.4M is checksum errors on da0.

I'm kind of confused by the apparent discrepancy. SMART errors not being reflected in

Edit: I don't think hardware is really relevant here, but in case it is and you can't see my sig, here it is:

FreeNAS 11.2

SuperMicro SuperStorage Server 6047R-E1R36L (Motherboard: X9DRD-7LN4F-JBOD, Chassis: SuperChassis 847E16-R1K28LPB)

2 x Xeon E5-2670, 128 GB RAM, Chelsio T420E-CR

Pool: 6 x 6 TB RAIDZ2, 6 x 4 TB RAIDZ2, (2 x 2 TB + 4 x 3 TB) RAIDZ2

All the spinners are connected via a SAS2 expander backplane to the onboard LSI 2308 controller.

Code:

(da0:mps0:0:8:0): READ(10). CDB: 28 00 d5 46 1a e8 00 00 10 00 (da0:mps0:0:8:0): CAM status: SCSI Status Error (da0:mps0:0:8:0): SCSI status: Check Condition (da0:mps0:0:8:0): SCSI sense: MEDIUM ERROR asc:11,0 (Unrecovered read error) (da0:mps0:0:8:0): Info: 0xd5461ae8 (da0:mps0:0:8:0): Error 5, Unretryable error

I have a replacement on the way and will get it replaced. What's confusing me, though, is the pool status. If I check at the shell, I get this:

Code:

root@freenas2:~ # zpool status tank

pool: tank

state: ONLINE

scan: scrub repaired 5.98M in 1 days 00:17:22 with 0 errors on Tue Dec 18 00:19:04 2018

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/9a85d15f-8d5c-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/9afa89ae-8d5c-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/9b6cc00b-8d5c-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/9c501d57-8d5c-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/9cc41939-8d5c-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/9d39e31d-8d5c-11e4-8732-0cc47a01304d ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/f5b737a6-8e41-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/7e2d9269-8a4e-11e5-bec2-002590de8695 ONLINE 0 0 0

gptid/f68f4fa9-8e41-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/f722e509-8e41-11e4-8732-0cc47a01304d ONLINE 0 0 0

gptid/56c2074c-657f-11e6-877d-002590caf340 ONLINE 0 0 0

gptid/82d5cbf5-2a41-11e6-a151-002590caf340 ONLINE 0 0 0

raidz2-2 ONLINE 0 0 0

gptid/2c854638-212c-11e6-881c-002590caf340 ONLINE 0 0 0

gptid/2dc4f155-212c-11e6-881c-002590caf340 ONLINE 0 0 0

gptid/b7625ade-6980-11e6-877d-002590caf340 ONLINE 0 0 0

gptid/1abefcac-acb9-11e6-8df3-002590caf340 ONLINE 0 0 0

gptid/3d317e50-67dd-11e6-877d-002590caf340 ONLINE 0 0 0

gptid/192328aa-fc8e-11e6-aef3-002590caf340 ONLINE 0 0 0

errors: No known data errors

In the GUI, though, I get this from the relevant vdev:

The columns are, of course, the same as in the CLI output, so the figure of 6.4M is checksum errors on da0.

I'm kind of confused by the apparent discrepancy. SMART errors not being reflected in

zpool status is nothing new, of course, but one pool status output showing no errors, and a different output showing 6.4 million, seems like a significant issue. Thoughts?Edit: I don't think hardware is really relevant here, but in case it is and you can't see my sig, here it is:

FreeNAS 11.2

SuperMicro SuperStorage Server 6047R-E1R36L (Motherboard: X9DRD-7LN4F-JBOD, Chassis: SuperChassis 847E16-R1K28LPB)

2 x Xeon E5-2670, 128 GB RAM, Chelsio T420E-CR

Pool: 6 x 6 TB RAIDZ2, 6 x 4 TB RAIDZ2, (2 x 2 TB + 4 x 3 TB) RAIDZ2

All the spinners are connected via a SAS2 expander backplane to the onboard LSI 2308 controller.