Hi all,

I'm a bit new with FreeNAS and inherited a system that last week started getting Pool Degraded warnings. I saw that FreeNAS started re-silvering a disk with the hot spare in the system, however that resilver failed with the spare drive reporting a number of write errors.

I took the bad spare drive offline after the resilver failed and put in a brand new replacement spare, which resilvered over the weekend successfully, however I'm still seeing a pool degraded error.

The original drive that failed had a gptid of gptid/f1c8aca9-3990-11e6-804c-0cc47a204d1c and used multipath/disk19p2

The serial number of the original failed drive was identified as 1EH7Z0TC and this was what was replaced with the brand new spare.

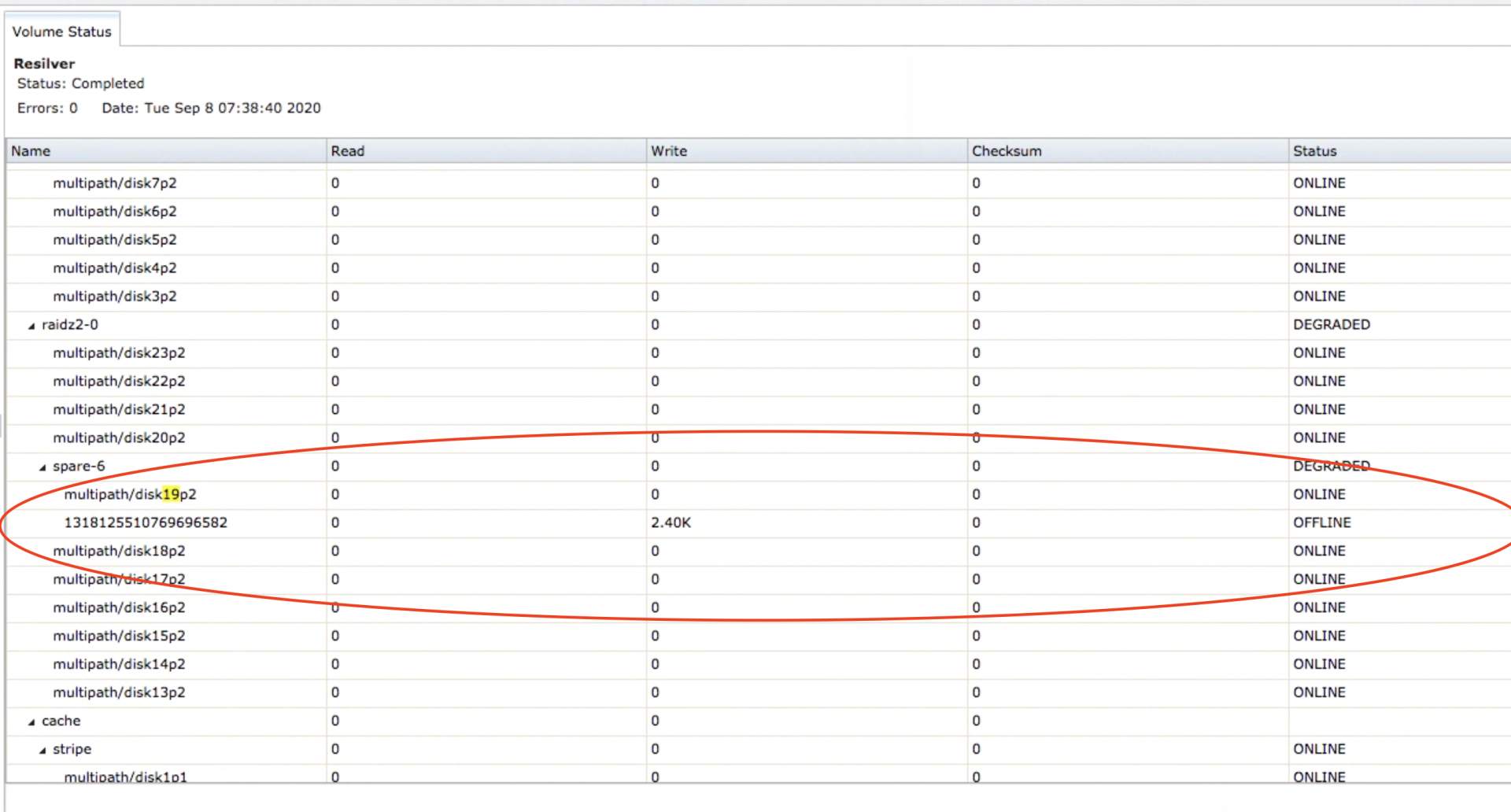

As you can see in the zpool status below, raidz2-0 is showing a "spare-6 degraded" lined with a number representing an offline disk 1318125510769696582 with a former gptid of f1c8aca9-3990-11e6-804c-0cc47a2, which was the original bad disk that was removed. This is also shown in the "Volume Status" in the Gui, with the new, successfully resilvered disk taking on mulitpath/disk19p2 and that same off-line disk number 1318125510769696582 in a subset of spare-6 (image attached).

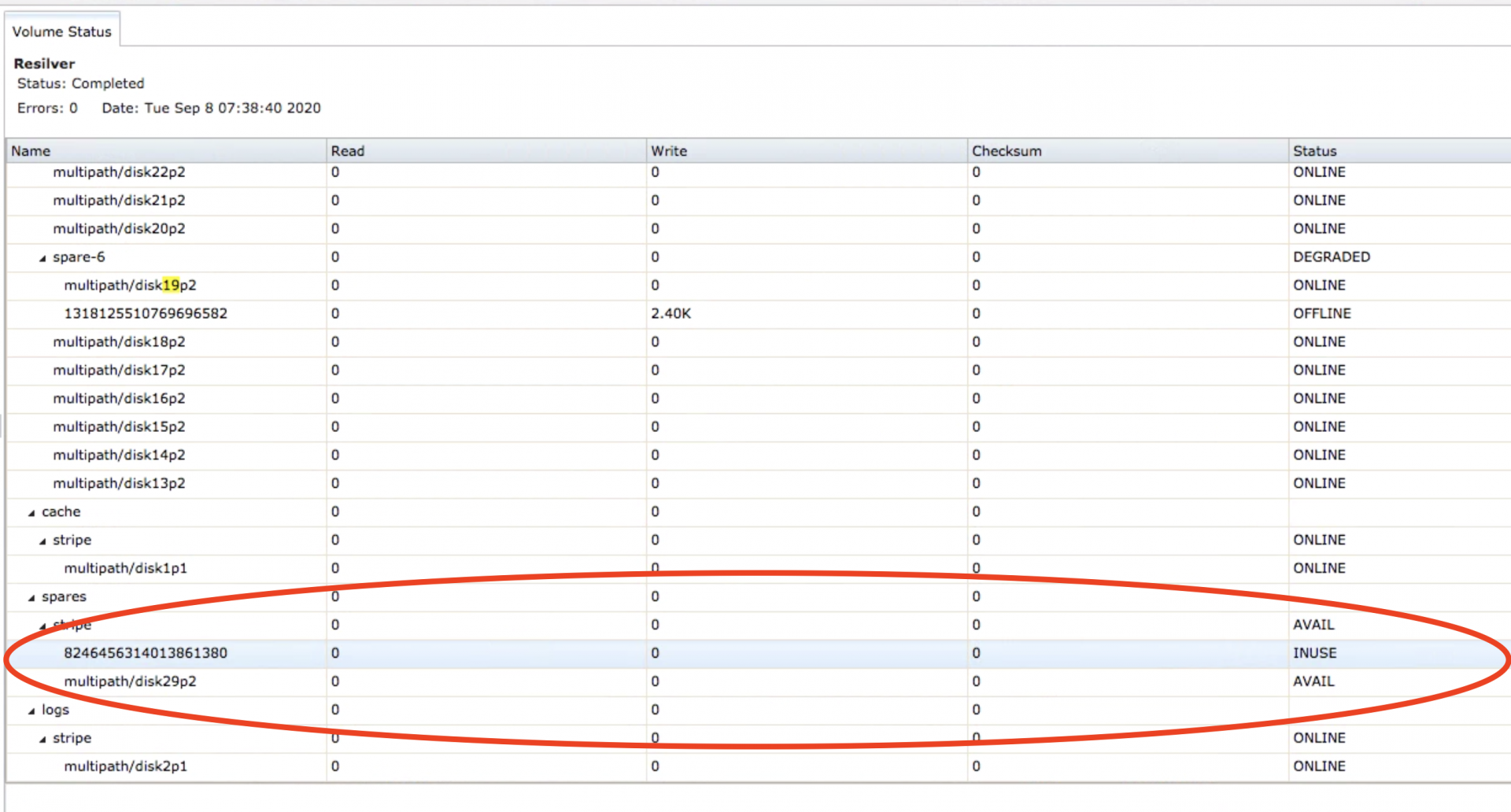

The other strange thing is that under the "spares" in my zpool status I'm seeing another number representing a disk "8246456314013861380" that says it was in use.

I'm a bit confused as to what is going on here. How can I safely remove the offline drive 1318125510769696582 and return the pool to a non-degraded status? What is that IN USE spare 8246456314013861380?

Thanks for the assistance!

zpool status:

I'm a bit new with FreeNAS and inherited a system that last week started getting Pool Degraded warnings. I saw that FreeNAS started re-silvering a disk with the hot spare in the system, however that resilver failed with the spare drive reporting a number of write errors.

I took the bad spare drive offline after the resilver failed and put in a brand new replacement spare, which resilvered over the weekend successfully, however I'm still seeing a pool degraded error.

The original drive that failed had a gptid of gptid/f1c8aca9-3990-11e6-804c-0cc47a204d1c and used multipath/disk19p2

The serial number of the original failed drive was identified as 1EH7Z0TC and this was what was replaced with the brand new spare.

As you can see in the zpool status below, raidz2-0 is showing a "spare-6 degraded" lined with a number representing an offline disk 1318125510769696582 with a former gptid of f1c8aca9-3990-11e6-804c-0cc47a2, which was the original bad disk that was removed. This is also shown in the "Volume Status" in the Gui, with the new, successfully resilvered disk taking on mulitpath/disk19p2 and that same off-line disk number 1318125510769696582 in a subset of spare-6 (image attached).

The other strange thing is that under the "spares" in my zpool status I'm seeing another number representing a disk "8246456314013861380" that says it was in use.

I'm a bit confused as to what is going on here. How can I safely remove the offline drive 1318125510769696582 and return the pool to a non-degraded status? What is that IN USE spare 8246456314013861380?

Thanks for the assistance!

zpool status:

Code:

pool: Tank

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-9P

scan: resilvered 3.63T in 3 days 21:04:28 with 0 errors on Tue Sep 8 07:38:40 2020

config:

NAME STATE READ WRITE CKSUM

Tank DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

gptid/ede678b9-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/ee8b136c-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/ef34ca56-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/efd9e177-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f07b5c6a-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f121e271-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

spare-6 DEGRADED 0 0 0

1318125510769696582 OFFLINE 0 2.40K 0 was /dev/gptid/f1c8aca9-3990-11e6-804c-0cc47a2

04d1c

gptid/13137e2b-eed4-11ea-9178-0cc47a204d1c ONLINE 0 0 0 block size: 512B configured, 4096B native

gptid/f2725ab1-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f31c7aab-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f3c86f37-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f46fcd47-3990-11e6-804c-0cc47a204d1c ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/24c3de4a-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/7fe3ea2a-c43a-11e9-9fa2-0cc47a204d1c ONLINE 0 0 0 block size: 512B configured, 4096B native

gptid/2617b858-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/26c2dd04-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/276eafc6-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/281dacf3-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/28cb6c3b-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/29741f68-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/2a2398d8-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/2acfd601-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/2b7e2ef4-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

raidz2-2 ONLINE 0 0 0

gptid/f1b884d7-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f265f262-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f31fb68d-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f3d28ab5-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/41e92778-3992-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f530fd26-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f5dea36f-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f696d1e4-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f752f395-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f752f395-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f8052677-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

gptid/f8b4df4b-3991-11e6-804c-0cc47a204d1c ONLINE 0 0 0

logs

gptid/bc393478-399c-11e6-804c-0cc47a204d1c ONLINE 0 0 0

cache

gptid/84d9db25-3992-11e6-804c-0cc47a204d1c ONLINE 0 0 0

spares

gptid/9caa3663-c9eb-11e9-9fa2-0cc47a204d1c AVAIL

8246456314013861380 INUSE was /dev/gptid/13137e2b-eed4-11ea-9178-0cc47a204d1c

errors: No known data errors

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0 days 00:00:20 with 0 errors on Tue Sep 8 03:45:20 2020

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ada0p2 ONLINE 0 0 0

ada1p2 ONLINE 0 0 0

errors: No known data errors