Borja Marcos

Contributor

- Joined

- Nov 24, 2014

- Messages

- 125

Hi,

I posted this to freebsd-fs but I imagine it's of interest here as well.

On one of my systems I noticed a severe performance hit for scrubs since the sequential scrub/resilver was introduced. Digging into the issue I found out that the system was stressing the l2arc severely during the scrub. The symptom was, a scrub kept running for several days with negligible progress. There were no hardware problems, none of the disks was misbehaving (I graph the response times and they were uniform).

I am not 100% sure it's related to the new scrub code because I have just started a scrub using the legacy scrub mode and it seems to be happening as well.

Did I somewhat hit a worst case or should vfs.zfs.no_scrub_prefetch default to 1 (disabled)? For now I am setting it to 1 on my systems.

The server is running FreeBSD 12 but this happened with FreeBSD 11 as well (hence I think it's pertinent to FreeNAS). I just hadn't investigated it before I updated to 12.

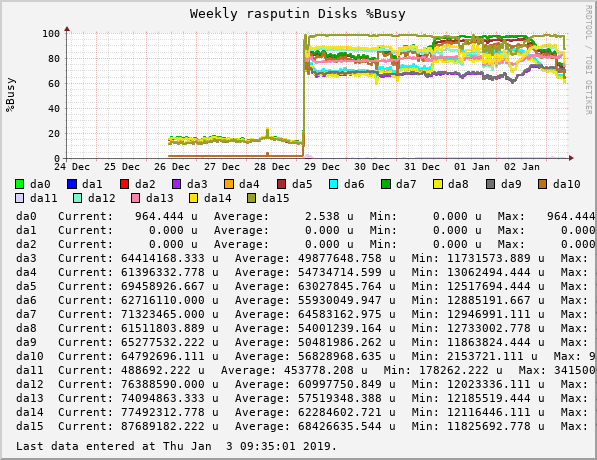

Exhibit one: disk bandwidth.

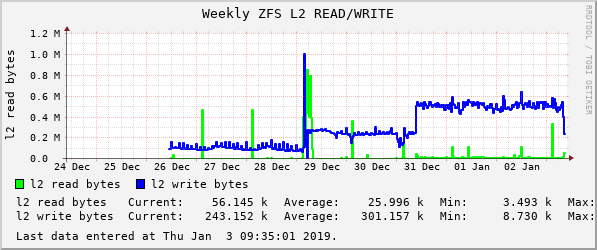

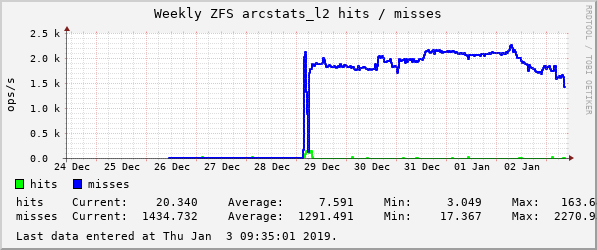

Despite the long time, on Jan 3rd it had completed less than 20% of the scrub. Looking at other ZFS stats I noticed stress on the l2arc. There were increased writes and l2arc misses.

Suspecting something fishy with the scrub prefetches I tried disabling scrub prefetch. And the effect couldn't be more dramatic. Setting the vfs.zfs.no_scrub_prefetch to 1 the scrub begun progressing healthily. The busy percent of the disks went down and performance improved as well.

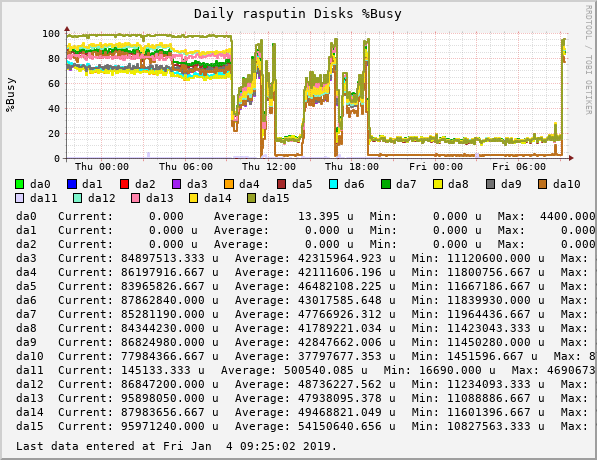

The busy% went from almost 100 to 40% when I disable the scrub prefetch. At the same time the scrub begun progressing normally and it actually finished in about three hours. The two peaks after around 13:30 are another scrub I started with scrub prefetch disabled. As you can see it worked normally.

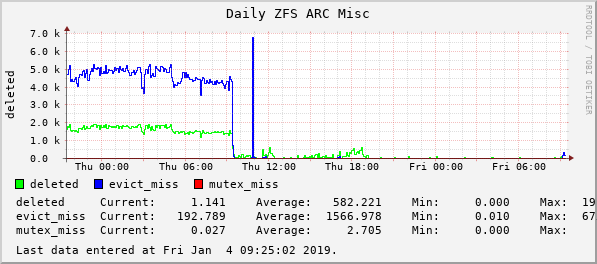

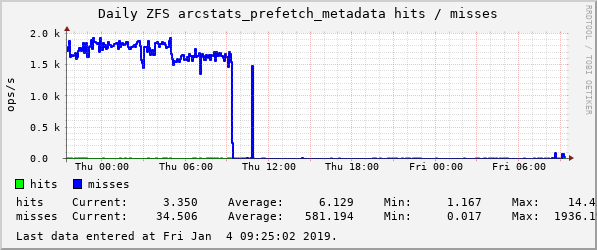

Other stats show that the l2arc was really relieved when I disabled scrub prefetch.

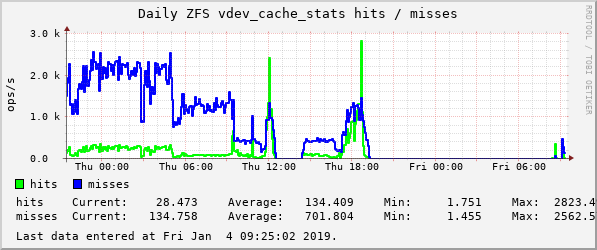

And the prefetch also hurt the vdev cache. It behaved much better during the second scrub with prefetch disabled.

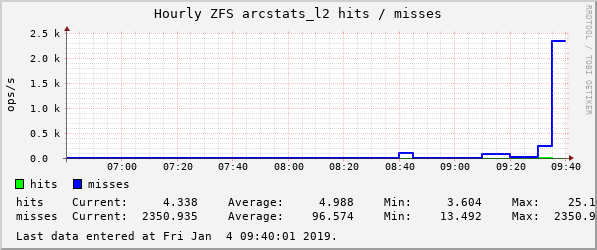

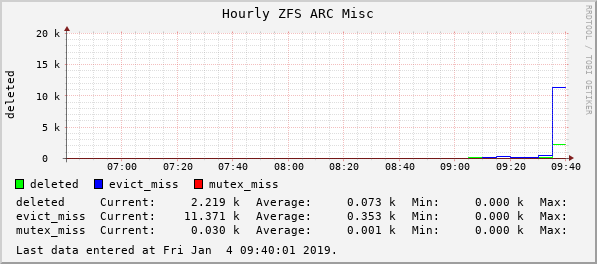

I have started another scrub today with scrub prefetch enabled but using the legacy scrub code and it has stalled again. Looking at the cache stats I see

that it's suffering as well. I include just a couple of graphs.

Now, the system configuration. It's a Sun X4240 (yep, old kit!). I replaced the RAID card with a LSI2008. It's running the IR firmware (I didn´t bother to cross flash it) but of course I am using it just as a plain HBA.

The pool was created in 2012. I know, raidz2 would have been better! And yes, both l2arc and zil are not the best choice on a single SSD, but given that the latency is so low it still helps a lot.

# zpool status

pool: pool

state: ONLINE

scan: scrub in progress since Fri Jan 4 09:10:06 2019

46.5G scanned at 15.5M/s, 46.5G issued at 15.5M/s, 537G total

0 repaired, 8.66% done, 0 days 09:01:09 to go

config:

NAME STATE READ WRITE CKSUM

pool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

da12 ONLINE 0 0 0

da13 ONLINE 0 0 0

da14 ONLINE 0 0 0

da9 ONLINE 0 0 0

da15 ONLINE 0 0 0

da3 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

da10 ONLINE 0 0 0

da4 ONLINE 0 0 0

da5 ONLINE 0 0 0

da6 ONLINE 0 0 0

da7 ONLINE 0 0 0

da8 ONLINE 0 0 0

logs

da11p2 ONLINE 0 0 0

cache

da11p3 ONLINE 0 0 0

errors: No known data errors

I posted this to freebsd-fs but I imagine it's of interest here as well.

On one of my systems I noticed a severe performance hit for scrubs since the sequential scrub/resilver was introduced. Digging into the issue I found out that the system was stressing the l2arc severely during the scrub. The symptom was, a scrub kept running for several days with negligible progress. There were no hardware problems, none of the disks was misbehaving (I graph the response times and they were uniform).

I am not 100% sure it's related to the new scrub code because I have just started a scrub using the legacy scrub mode and it seems to be happening as well.

Did I somewhat hit a worst case or should vfs.zfs.no_scrub_prefetch default to 1 (disabled)? For now I am setting it to 1 on my systems.

The server is running FreeBSD 12 but this happened with FreeBSD 11 as well (hence I think it's pertinent to FreeNAS). I just hadn't investigated it before I updated to 12.

Exhibit one: disk bandwidth.

Despite the long time, on Jan 3rd it had completed less than 20% of the scrub. Looking at other ZFS stats I noticed stress on the l2arc. There were increased writes and l2arc misses.

Suspecting something fishy with the scrub prefetches I tried disabling scrub prefetch. And the effect couldn't be more dramatic. Setting the vfs.zfs.no_scrub_prefetch to 1 the scrub begun progressing healthily. The busy percent of the disks went down and performance improved as well.

The busy% went from almost 100 to 40% when I disable the scrub prefetch. At the same time the scrub begun progressing normally and it actually finished in about three hours. The two peaks after around 13:30 are another scrub I started with scrub prefetch disabled. As you can see it worked normally.

Other stats show that the l2arc was really relieved when I disabled scrub prefetch.

And the prefetch also hurt the vdev cache. It behaved much better during the second scrub with prefetch disabled.

I have started another scrub today with scrub prefetch enabled but using the legacy scrub code and it has stalled again. Looking at the cache stats I see

that it's suffering as well. I include just a couple of graphs.

Now, the system configuration. It's a Sun X4240 (yep, old kit!). I replaced the RAID card with a LSI2008. It's running the IR firmware (I didn´t bother to cross flash it) but of course I am using it just as a plain HBA.

The pool was created in 2012. I know, raidz2 would have been better! And yes, both l2arc and zil are not the best choice on a single SSD, but given that the latency is so low it still helps a lot.

# zpool status

pool: pool

state: ONLINE

scan: scrub in progress since Fri Jan 4 09:10:06 2019

46.5G scanned at 15.5M/s, 46.5G issued at 15.5M/s, 537G total

0 repaired, 8.66% done, 0 days 09:01:09 to go

config:

NAME STATE READ WRITE CKSUM

pool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

da12 ONLINE 0 0 0

da13 ONLINE 0 0 0

da14 ONLINE 0 0 0

da9 ONLINE 0 0 0

da15 ONLINE 0 0 0

da3 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

da10 ONLINE 0 0 0

da4 ONLINE 0 0 0

da5 ONLINE 0 0 0

da6 ONLINE 0 0 0

da7 ONLINE 0 0 0

da8 ONLINE 0 0 0

logs

da11p2 ONLINE 0 0 0

cache

da11p3 ONLINE 0 0 0

errors: No known data errors