Erik Carlson

Dabbler

- Joined

- Aug 2, 2012

- Messages

- 19

Hello All,

I have an HP N40L microserver now with 16GB of RAM & 3 hard disks, 2 x 1TB drives which are mirrored, and 1 x 3TB drive which is on its own.

I have run the system on 8.0.4 without issue, and for most of the time on only 8GB RAM. However i have wanted some of the features provided in later releases. I previously tried to upgrade to freenas 8.2.0 but when i did I had issues where my physical memory would fill up, then swap would fill up and then the machine would die. It was discussed briefly in another thread, but i rolled back to 8.0.4 before we could spend time figuring out the problem.

I recently discovered that I could upgrade my machine to 16GB of RAM so i did that, and decided to give the upgrade another try, this time with 8.3.0.

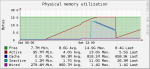

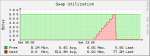

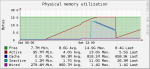

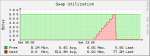

Unfortunately I am seeing similar behaviour, only this time the system seems to get close to falling over (all physical memory and swap is full) and something then happens to reset memory usage before it rises again. Behold the graphs below for physical memory and swap usage:

top shows at the time of the graph snapshot:

last pid: 24559; load averages: 0.30, 0.27, 0.28 up 1+11:40:41 22:55:31

33 processes: 2 running, 31 sleeping

CPU: 3.9% user, 0.0% nice, 6.2% system, 0.0% interrupt, 89.8% idle

Mem: 5800M Active, 31M Inact, 1467M Wired, 620K Cache, 172M Buf, 8445M Free

Swap: 6144M Total, 51M Used, 6092M Free

PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND

21371 root 1 52 0 5743M 5712M select 0 46:08 15.19% smbd

17606 root 2 44 0 54432K 7116K select 1 11:29 0.10% python

2091 root 6 44 0 191M 87700K CPU1 1 10:49 0.00% python

2295 root 7 44 0 70304K 6672K ucond 1 1:11 0.00% collectd

17230 root 1 44 0 37696K 2940K select 0 0:08 0.00% afpd

1836 root 1 44 0 11672K 1880K select 1 0:06 0.00% ntpd

2774 root 1 76 0 90868K 16748K ttyin 1 0:02 0.00% python

23810 www 1 44 0 14400K 2568K kqread 1 0:02 0.00% nginx

2046 root 1 44 0 40340K 3904K select 1 0:01 0.00% nmbd

2206 avahi 1 44 0 16804K 2104K select 1 0:01 0.00% avahi-daem

2217 root 2 76 0 25208K 2784K select 0 0:01 0.00% afpd

1622 root 1 44 0 6784K 1044K select 1 0:01 0.00% syslogd

2048 root 1 44 0 47920K 5496K select 1 0:01 0.00% smbd

2415 root 1 76 0 7840K 492K nanslp 1 0:01 0.00% cron

17231 root 1 44 0 32416K 2992K select 1 0:00 0.00% cnid_dbd

2643 root 1 44 0 7844K 1008K select 0 0:00 0.00% rpcbind

2054 root 1 44 0 13292K 732K nanslp 1 0:00 0.00% smartd

if i were to sample again later you would see smbd's memory usage spiral.

the system doesn't do anything clever, it just serves media around the house for me and my family who are away (hence my having time to try the upgrade).

While it's good that the system doesn't fall over completely I need to get this fixed or I will have to rollback to 8.0.4 and restore my old config.

I have not upgraded my zfs pools to the new version , i am not using autotune, dedup, or anything clever (as far as i know), the conifguration is currently pretty much untouched from after the upgrade apart from a minor tweak discussed in another thread to restore 2GB of the swap that wasn't being picked up.

Anyone have any ideas please?

Thanks

Erik

I have an HP N40L microserver now with 16GB of RAM & 3 hard disks, 2 x 1TB drives which are mirrored, and 1 x 3TB drive which is on its own.

I have run the system on 8.0.4 without issue, and for most of the time on only 8GB RAM. However i have wanted some of the features provided in later releases. I previously tried to upgrade to freenas 8.2.0 but when i did I had issues where my physical memory would fill up, then swap would fill up and then the machine would die. It was discussed briefly in another thread, but i rolled back to 8.0.4 before we could spend time figuring out the problem.

I recently discovered that I could upgrade my machine to 16GB of RAM so i did that, and decided to give the upgrade another try, this time with 8.3.0.

Unfortunately I am seeing similar behaviour, only this time the system seems to get close to falling over (all physical memory and swap is full) and something then happens to reset memory usage before it rises again. Behold the graphs below for physical memory and swap usage:

top shows at the time of the graph snapshot:

last pid: 24559; load averages: 0.30, 0.27, 0.28 up 1+11:40:41 22:55:31

33 processes: 2 running, 31 sleeping

CPU: 3.9% user, 0.0% nice, 6.2% system, 0.0% interrupt, 89.8% idle

Mem: 5800M Active, 31M Inact, 1467M Wired, 620K Cache, 172M Buf, 8445M Free

Swap: 6144M Total, 51M Used, 6092M Free

PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND

21371 root 1 52 0 5743M 5712M select 0 46:08 15.19% smbd

17606 root 2 44 0 54432K 7116K select 1 11:29 0.10% python

2091 root 6 44 0 191M 87700K CPU1 1 10:49 0.00% python

2295 root 7 44 0 70304K 6672K ucond 1 1:11 0.00% collectd

17230 root 1 44 0 37696K 2940K select 0 0:08 0.00% afpd

1836 root 1 44 0 11672K 1880K select 1 0:06 0.00% ntpd

2774 root 1 76 0 90868K 16748K ttyin 1 0:02 0.00% python

23810 www 1 44 0 14400K 2568K kqread 1 0:02 0.00% nginx

2046 root 1 44 0 40340K 3904K select 1 0:01 0.00% nmbd

2206 avahi 1 44 0 16804K 2104K select 1 0:01 0.00% avahi-daem

2217 root 2 76 0 25208K 2784K select 0 0:01 0.00% afpd

1622 root 1 44 0 6784K 1044K select 1 0:01 0.00% syslogd

2048 root 1 44 0 47920K 5496K select 1 0:01 0.00% smbd

2415 root 1 76 0 7840K 492K nanslp 1 0:01 0.00% cron

17231 root 1 44 0 32416K 2992K select 1 0:00 0.00% cnid_dbd

2643 root 1 44 0 7844K 1008K select 0 0:00 0.00% rpcbind

2054 root 1 44 0 13292K 732K nanslp 1 0:00 0.00% smartd

if i were to sample again later you would see smbd's memory usage spiral.

the system doesn't do anything clever, it just serves media around the house for me and my family who are away (hence my having time to try the upgrade).

While it's good that the system doesn't fall over completely I need to get this fixed or I will have to rollback to 8.0.4 and restore my old config.

I have not upgraded my zfs pools to the new version , i am not using autotune, dedup, or anything clever (as far as i know), the conifguration is currently pretty much untouched from after the upgrade apart from a minor tweak discussed in another thread to restore 2GB of the swap that wasn't being picked up.

Anyone have any ideas please?

Thanks

Erik