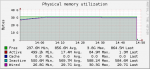

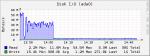

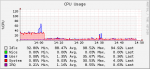

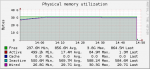

Hi. Just bought my first FreeNAS box and build it. But run into some kind of problem. When the RAM is getting full, the read speed of disks get hard limited. If this is the right term.

At the moment there is scrub working in the background.

MOBO: AsRock C2550D4I

RAM: ECC 4X8GB

Disks: 10X3TB WD Red (WD30EFRX)

Storage: 1 pool in Raid-Z2

Boot: SanDisk Cruzer Fit 16G

At the moment there is scrub working in the background.

MOBO: AsRock C2550D4I

RAM: ECC 4X8GB

Disks: 10X3TB WD Red (WD30EFRX)

Storage: 1 pool in Raid-Z2

Boot: SanDisk Cruzer Fit 16G

Last edited: