I can't get the windows iso to boot when I setup my vm to use a GPU.

If I configure it to only use VNC the iso boots, I can install and use it but as soon as I remove the VNC monitor and setup my GPU it doesn't boot.

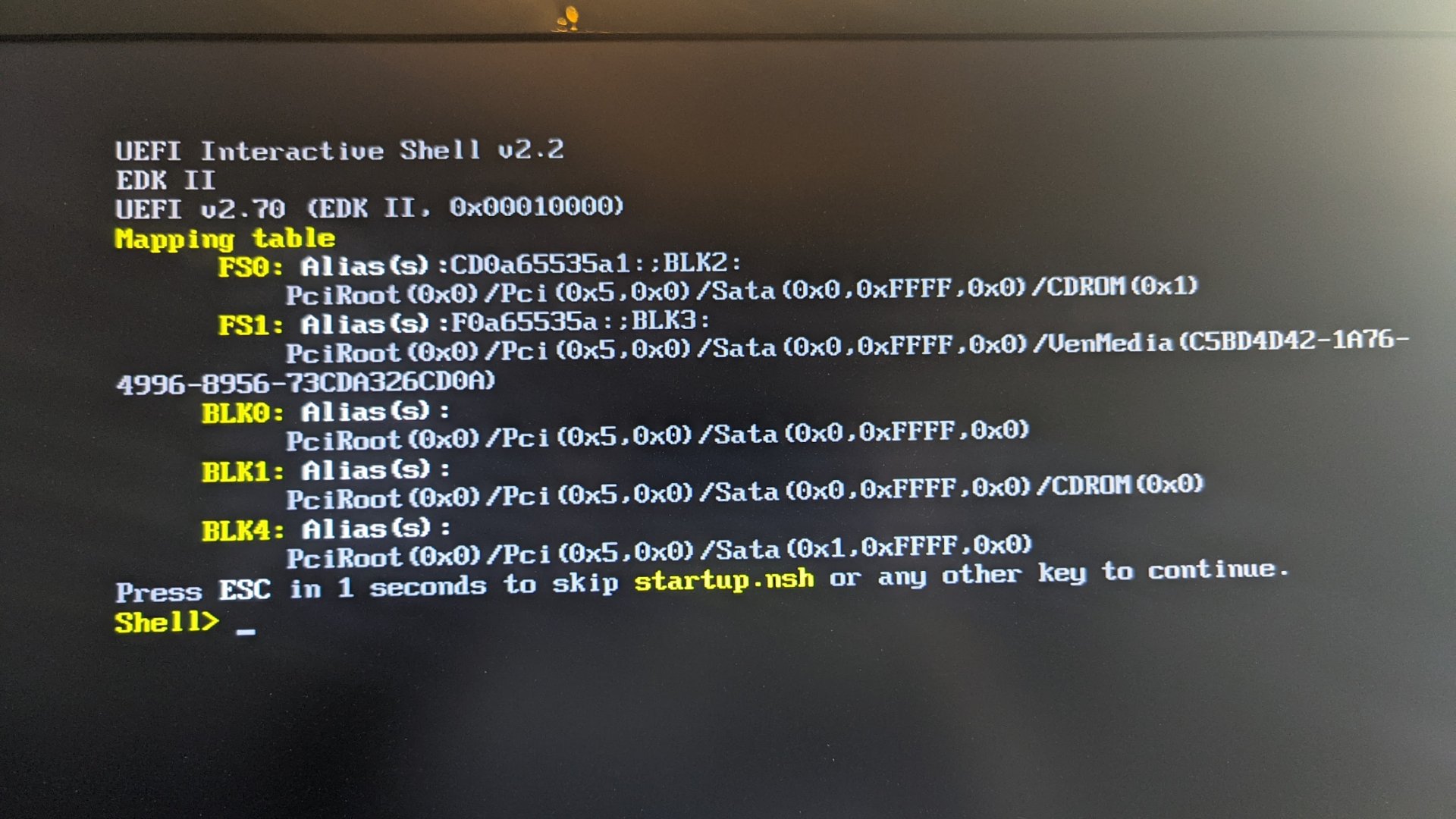

I see a uefi shell, when I exit that and try to boot from cd the screen goes black and comes back to the uefi.

Same thing happens when I install through VNC, if I exit the uefi shell and try to boot from the virtual disk the screen goes black and comes back to uefi.

I have tried various windows iso's (10 & 11 both x64)

System details:

Version: TrueNAS-SCALE-22.12.3.3

CPU: I7-13700k

Motherboard: MSI MAG Z690 (ddr5)

RAM: Corsair VENGEANCE DDR5 RAM 64GB (2x32GB) 5200MHz

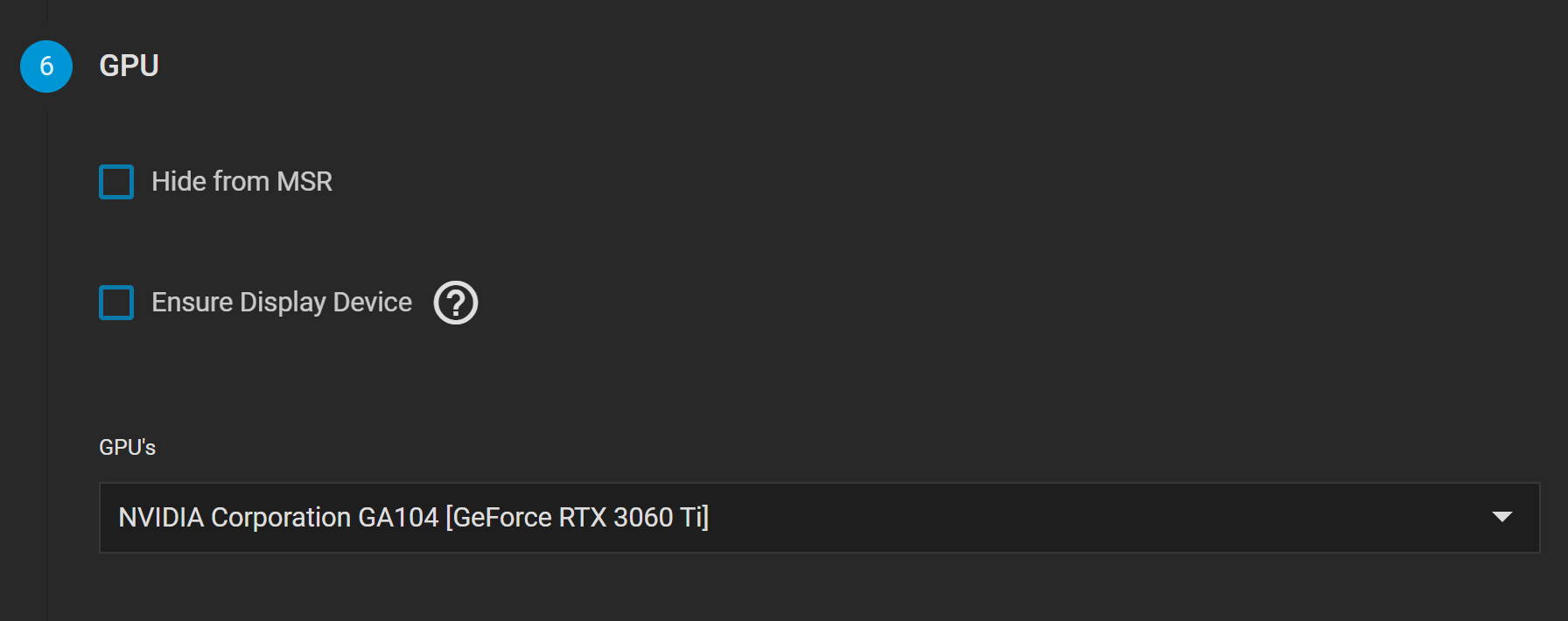

GPU: GeForce RTX 3060 Ti

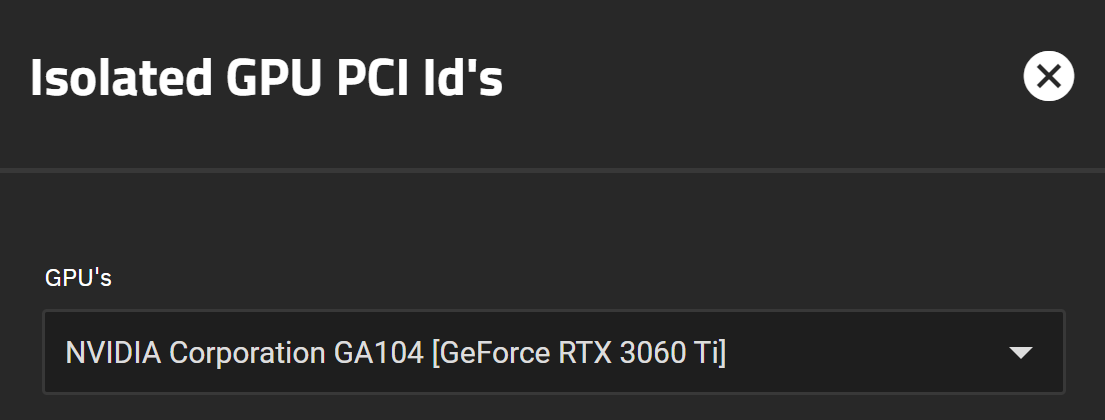

The GPU is set up as isolated

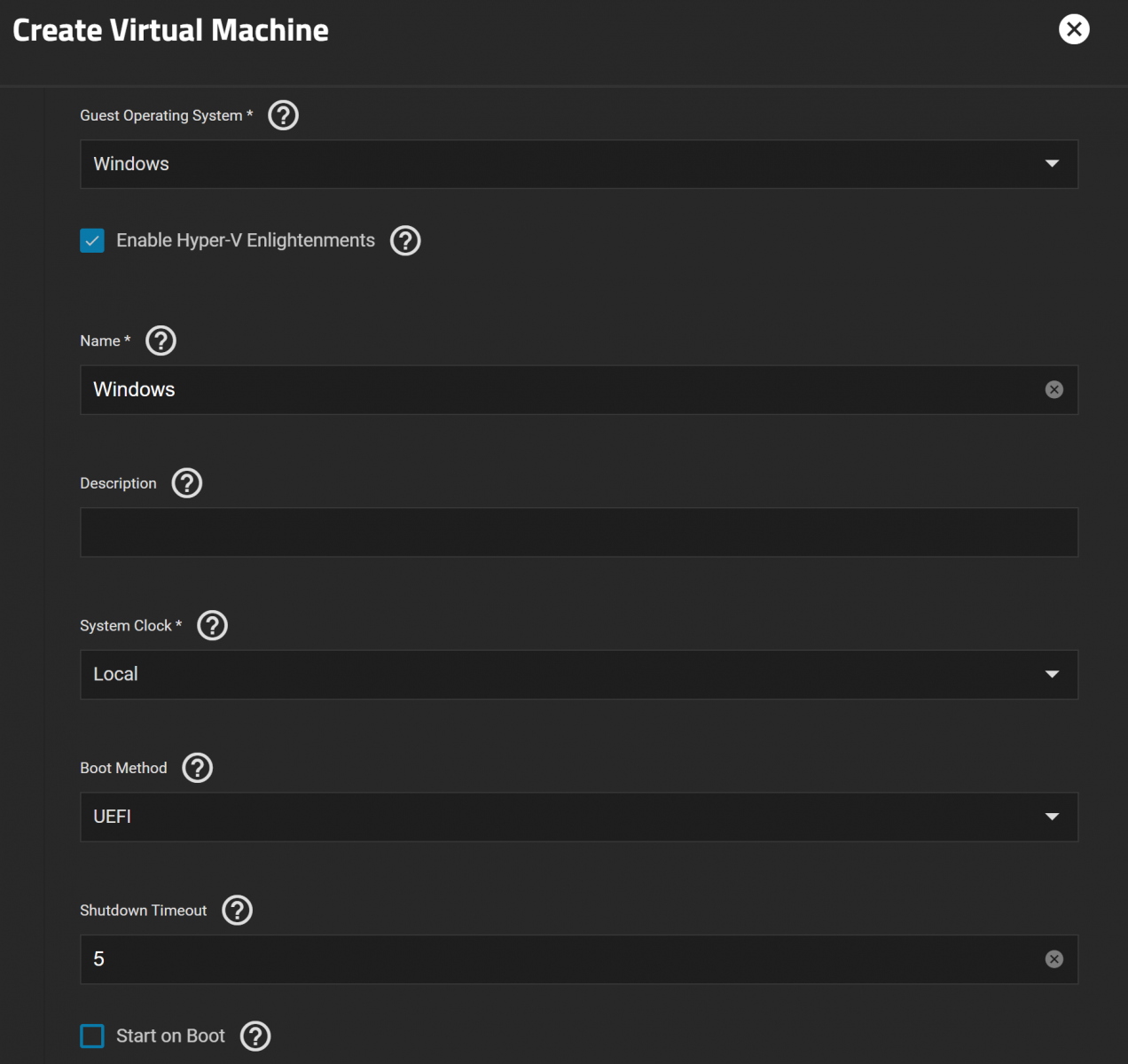

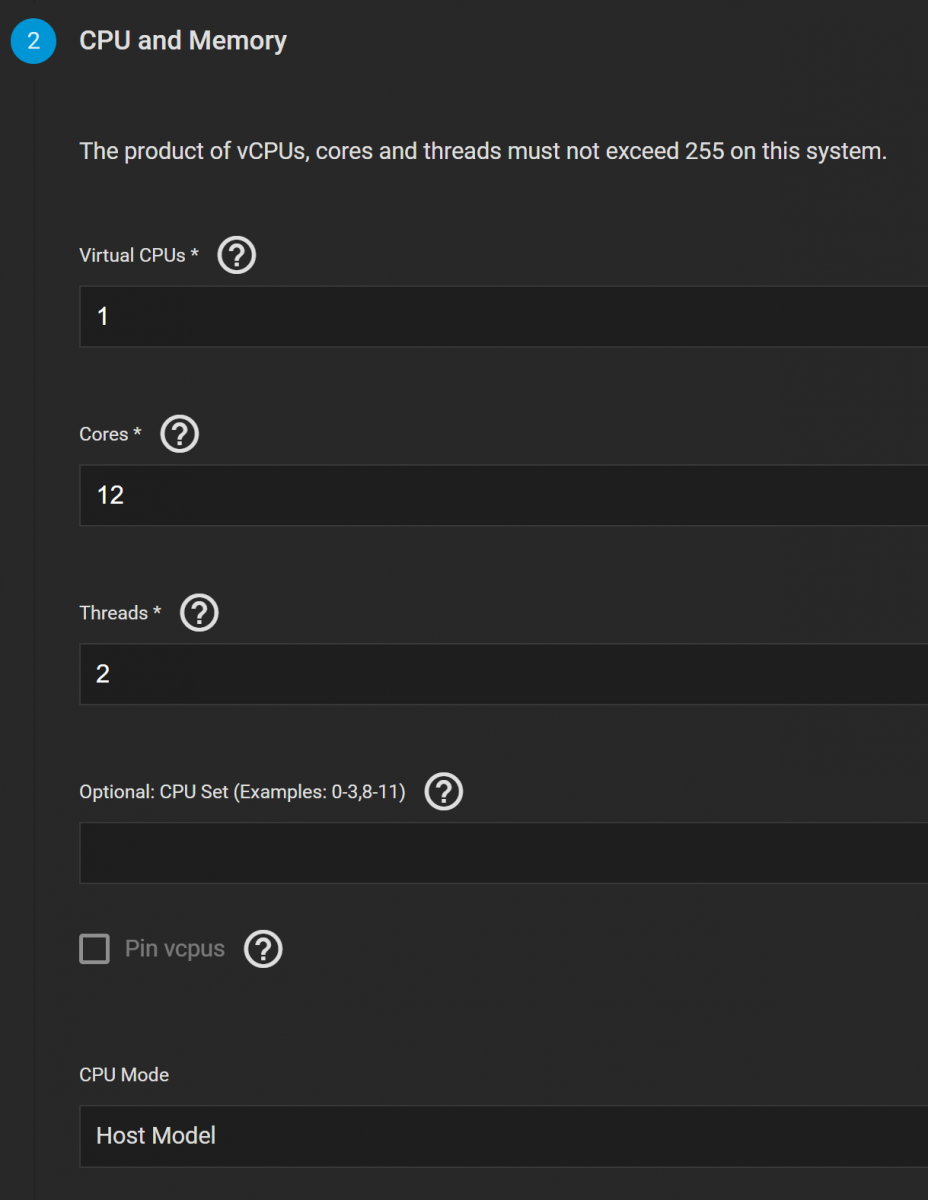

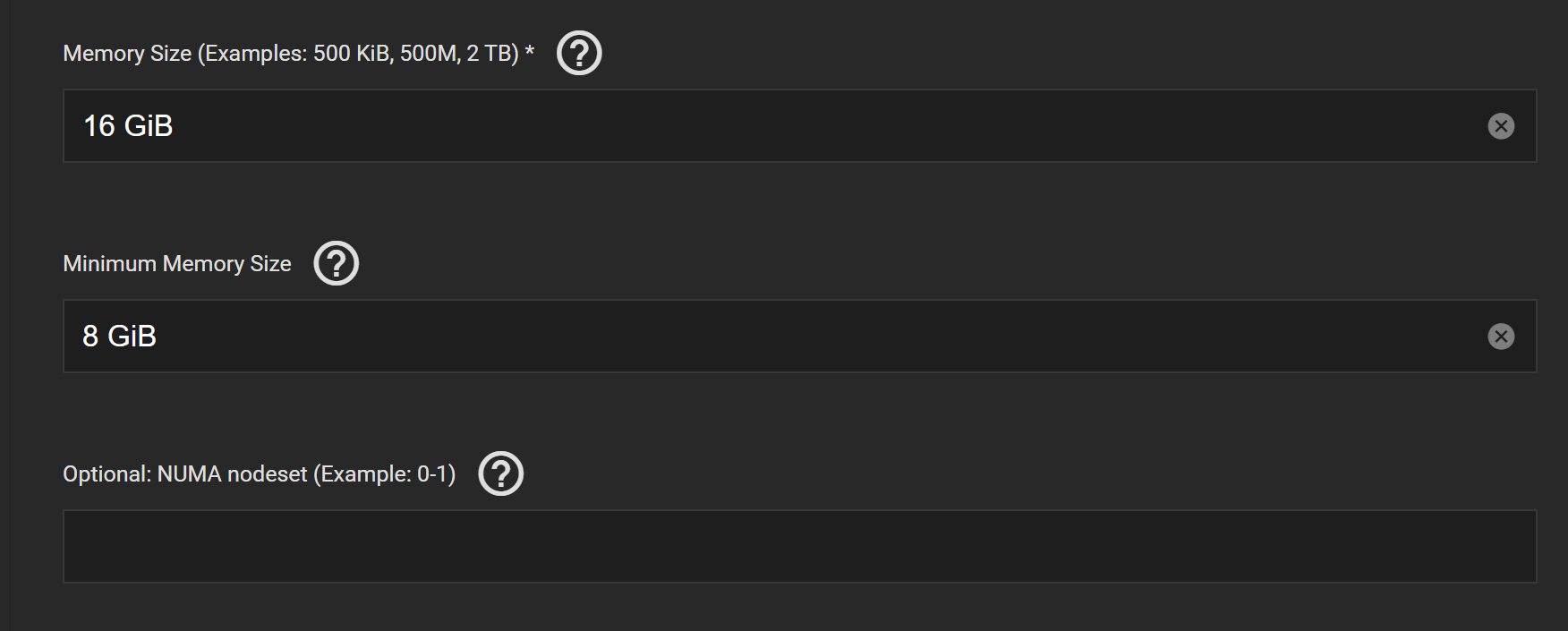

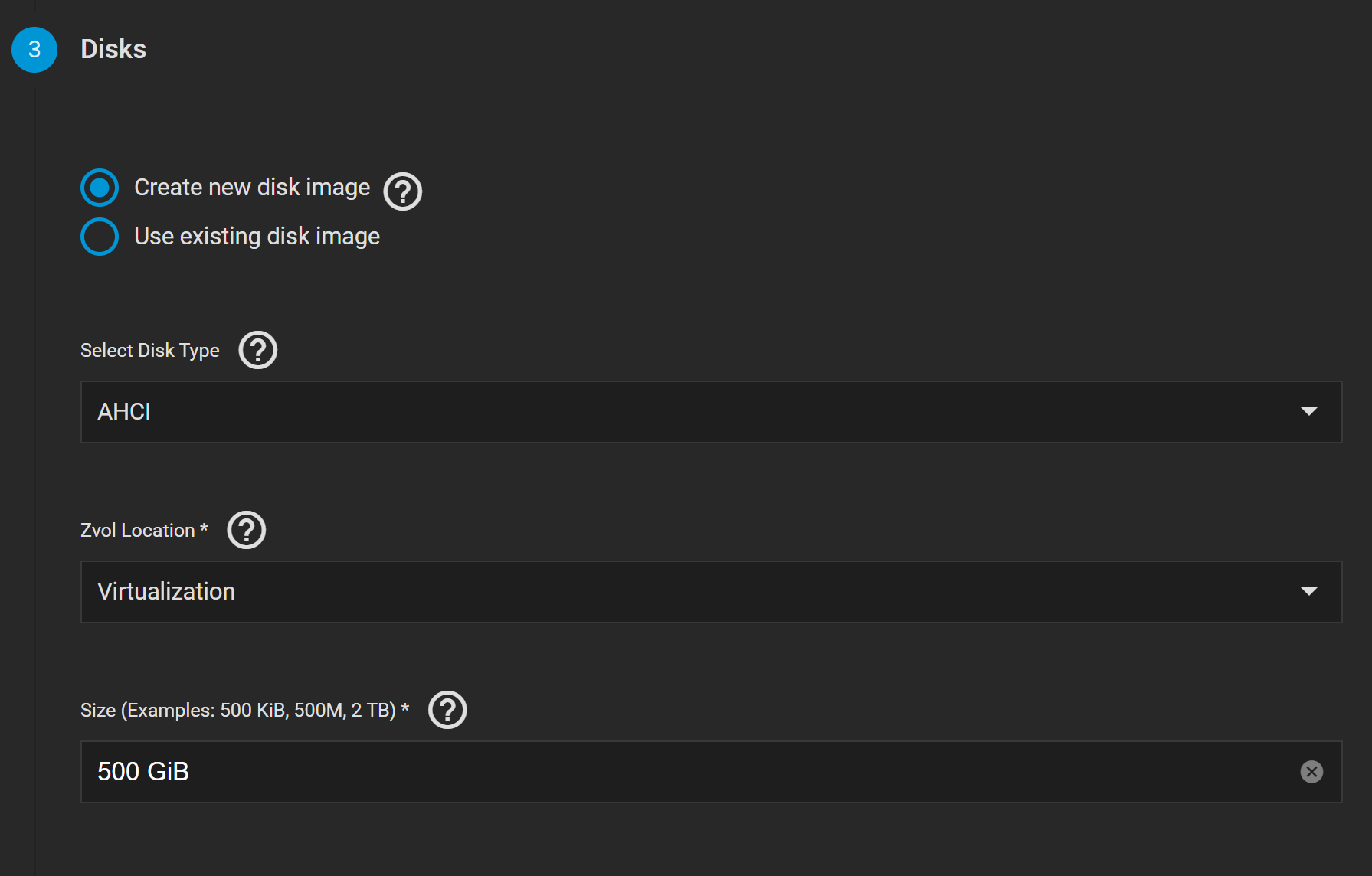

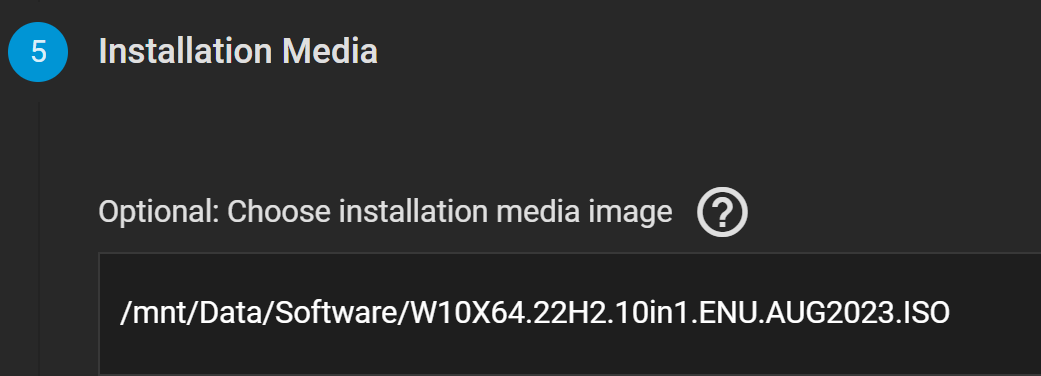

And this are the vm settings:

What I get when I boot the machine

With the last iso I tried it seems to start it but gets stuck on "Press any key to boot from CD or DVD...."

Am I missing something? What else could I try?

If I configure it to only use VNC the iso boots, I can install and use it but as soon as I remove the VNC monitor and setup my GPU it doesn't boot.

I see a uefi shell, when I exit that and try to boot from cd the screen goes black and comes back to the uefi.

Same thing happens when I install through VNC, if I exit the uefi shell and try to boot from the virtual disk the screen goes black and comes back to uefi.

I have tried various windows iso's (10 & 11 both x64)

System details:

Version: TrueNAS-SCALE-22.12.3.3

CPU: I7-13700k

Motherboard: MSI MAG Z690 (ddr5)

RAM: Corsair VENGEANCE DDR5 RAM 64GB (2x32GB) 5200MHz

GPU: GeForce RTX 3060 Ti

The GPU is set up as isolated

And this are the vm settings:

What I get when I boot the machine

With the last iso I tried it seems to start it but gets stuck on "Press any key to boot from CD or DVD...."

Am I missing something? What else could I try?