I was happy to see that TN Scale was listing my SAS HBA, an HP SAS9207-8e H3-25280-01c which i want to use to connect my HP Tape Library.

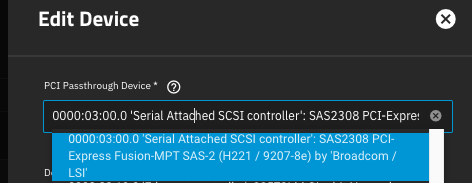

So i reconfigured a VM with a PCI passthrough device.

The VM won't start anymore. I tried to reconfigure the device order.

My Problem is, i don't really understand the error message:

In detail:

Error: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start if self.domain.create() < 0: File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create raise libvirtError('virDomainCreate() failed') libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2024-01-20T23:15:38.141317Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141321Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:38.141805Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-register [bit 8] 2024-01-20T23:15:38.141810Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141814Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:39.132668Z qemu-system-x86_64: -device vfio-pci,host=0000:03:00.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:00.0: group 1 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver. During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 184, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1317, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1379, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1247, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start await self.middleware.run_in_thread(self._start, vm['name']) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1234, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1231, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name)) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start raise CallError('\n'.join(errors)) middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2024-01-20T23:15:38.141317Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141321Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:38.141805Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-register [bit 8] 2024-01-20T23:15:38.141810Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141814Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:39.132668Z qemu-system-x86_64: -device vfio-pci,host=0000:03:00.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:00.0: group 1 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver.

Could somebody please help me in what to do to get this functional?

Thank you.

So i reconfigured a VM with a PCI passthrough device.

The VM won't start anymore. I tried to reconfigure the device order.

My Problem is, i don't really understand the error message:

Error: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start if self.domain.create() < 0: File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create raise libvirtError('virDomainCreate() failed') libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2024-01-20T23:15:38.141317Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141321Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:38.141805Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-register [bit 8] 2024-01-20T23:15:38.141810Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141814Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:39.132668Z qemu-system-x86_64: -device vfio-pci,host=0000:03:00.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:00.0: group 1 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver. During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 184, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1317, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1379, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1247, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start await self.middleware.run_in_thread(self._start, vm['name']) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1234, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1231, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name)) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start raise CallError('\n'.join(errors)) middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2024-01-20T23:15:38.141317Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141321Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:38.141805Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-register [bit 8] 2024-01-20T23:15:38.141810Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48BH).vmx-apicv-vid [bit 9] 2024-01-20T23:15:38.141814Z qemu-system-x86_64: warning: host doesn't support requested feature: MSR(48DH).vmx-posted-intr [bit 7] 2024-01-20T23:15:39.132668Z qemu-system-x86_64: -device vfio-pci,host=0000:03:00.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:00.0: group 1 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver.

Could somebody please help me in what to do to get this functional?

Thank you.