u-watanabe

Cadet

- Joined

- Aug 15, 2023

- Messages

- 1

TrueNAS-SCALE-22.12.3.3

ASUS H170-PRO / Core i5-6500 CPU / 16GB RAM

PCI-Express Slot 1 : DELL PERC H310 SAS adapter

PCI Slot 1 : Earthsoft PT2 (Tv Tuner board)

03:01.0 Multimedia controller: Xilinx Corporation Device 222a (rev 01)

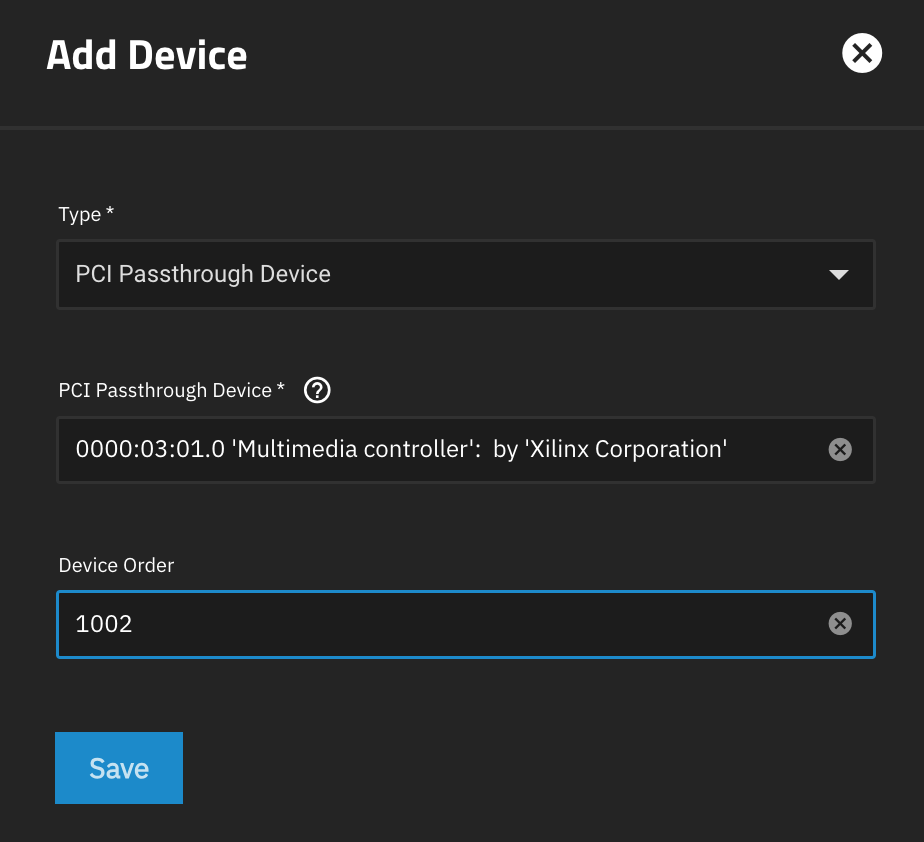

I want to pass through this device to a virtual machine Ubuntu Desktop 22.04.2

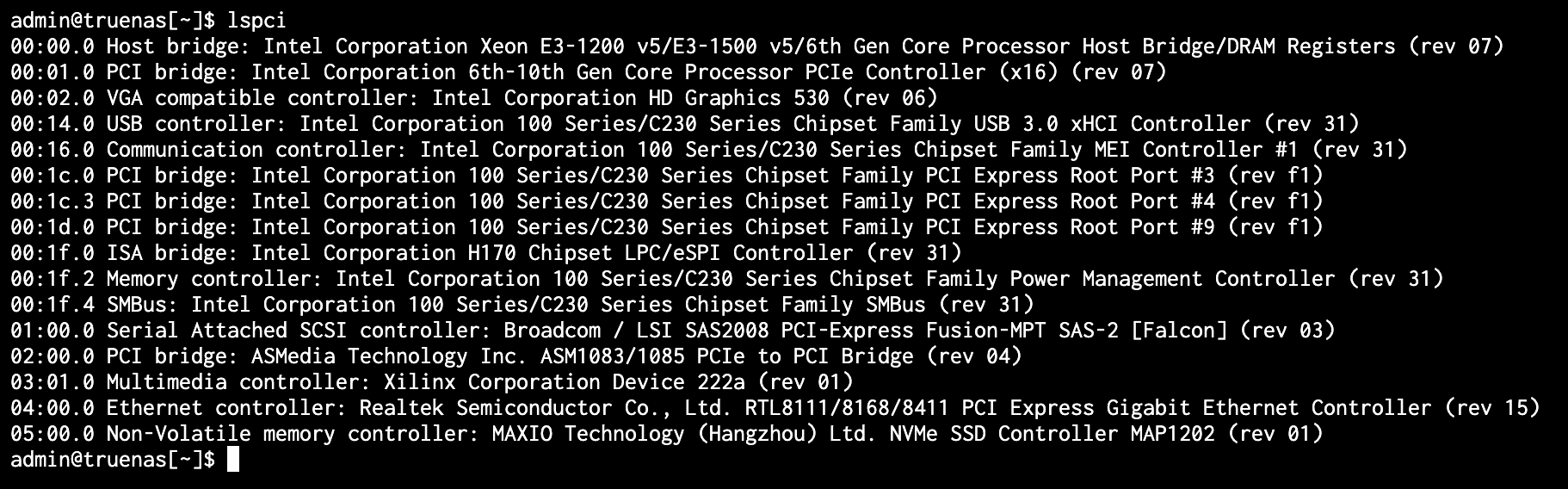

lspci result

When I add this device to the virtual machine and boot it, I get the following error message

[EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-08-15T14:11:28.613474Z

qemu-system-x86_64: -device vfio-pci,host=0000:03:01.0,id=hostdev0,bus=pci.0,addr=0x7:

vfio 0000:03:01.0: Failed to set up TRIGGER eventfd signaling for interrupt INTX-0: VFIO_DEVICE_SET_IRQS failure:Invalid argument

More info...

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start

if self.domain.create() < 0:

File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create

raise libvirtError('virDomainCreate() failed')

libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2023-08-15T14:11:28.613474Z qemu-system-x86_64: -device vfio-pci,host=0000:03:01.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:01.0: Failed to set up TRIGGER eventfd signaling for interrupt INTX-0: VFIO_DEVICE_SET_IRQS failure: Invalid argument

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 204, in call_method

result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self)

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1344, in _call

return await methodobj(*prepared_call.args)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1378, in nf

return await func(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1246, in nf

res = await f(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start

await self.middleware.run_in_thread(self._start, vm['name'])

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1261, in run_in_thread

return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1258, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start

self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name))

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start

raise CallError('\n'.join(errors))

middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-08-15T14:11:28.613474Z qemu-system-x86_64: -device vfio-pci,host=0000:03:01.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:01.0: Failed to set up TRIGGER eventfd signaling for interrupt INTX-0: VFIO_DEVICE_SET_IRQS failure: Invalid argument

Do you have any tips on how to solve the problem? Best regards.

ASUS H170-PRO / Core i5-6500 CPU / 16GB RAM

PCI-Express Slot 1 : DELL PERC H310 SAS adapter

PCI Slot 1 : Earthsoft PT2 (Tv Tuner board)

03:01.0 Multimedia controller: Xilinx Corporation Device 222a (rev 01)

I want to pass through this device to a virtual machine Ubuntu Desktop 22.04.2

lspci result

When I add this device to the virtual machine and boot it, I get the following error message

[EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-08-15T14:11:28.613474Z

qemu-system-x86_64: -device vfio-pci,host=0000:03:01.0,id=hostdev0,bus=pci.0,addr=0x7:

vfio 0000:03:01.0: Failed to set up TRIGGER eventfd signaling for interrupt INTX-0: VFIO_DEVICE_SET_IRQS failure:Invalid argument

More info...

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start

if self.domain.create() < 0:

File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create

raise libvirtError('virDomainCreate() failed')

libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2023-08-15T14:11:28.613474Z qemu-system-x86_64: -device vfio-pci,host=0000:03:01.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:01.0: Failed to set up TRIGGER eventfd signaling for interrupt INTX-0: VFIO_DEVICE_SET_IRQS failure: Invalid argument

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 204, in call_method

result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self)

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1344, in _call

return await methodobj(*prepared_call.args)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1378, in nf

return await func(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1246, in nf

res = await f(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start

await self.middleware.run_in_thread(self._start, vm['name'])

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1261, in run_in_thread

return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1258, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start

self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name))

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start

raise CallError('\n'.join(errors))

middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-08-15T14:11:28.613474Z qemu-system-x86_64: -device vfio-pci,host=0000:03:01.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:03:01.0: Failed to set up TRIGGER eventfd signaling for interrupt INTX-0: VFIO_DEVICE_SET_IRQS failure: Invalid argument

Do you have any tips on how to solve the problem? Best regards.