It looks like you elected to use the default LVM disk layout when you installed your Ubuntu VM via the live server iso. See this example with a 20GB virtual disk device:

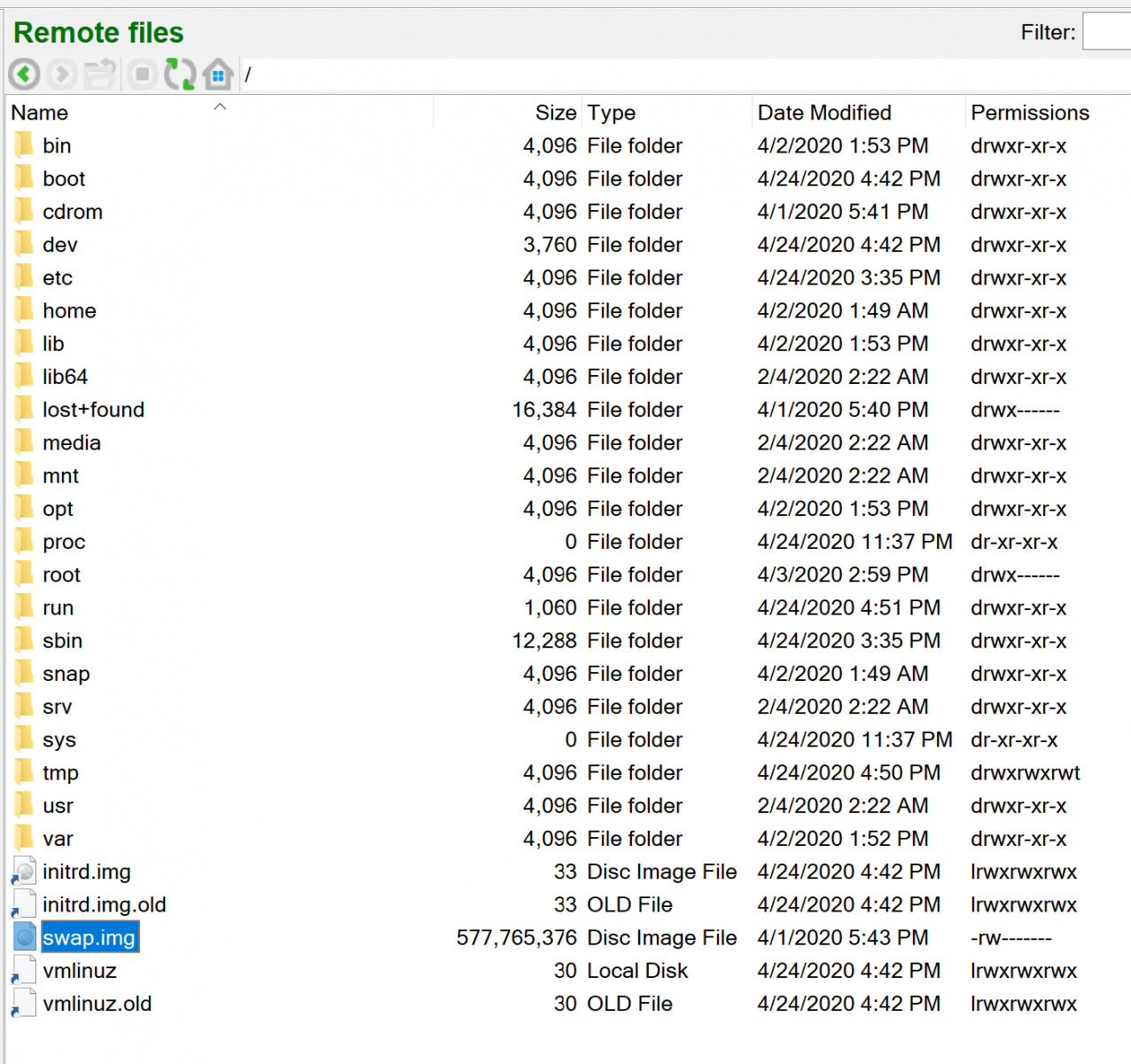

View attachment 37955

Notice how partition /dev/vda3 is LVM2 member with a volume group of a matching size (in your case it is 98GB). But the root logical volume has only been given 4GB, leaving a lot of free space on the volume group.

Post-install inside my example VM:

Code:

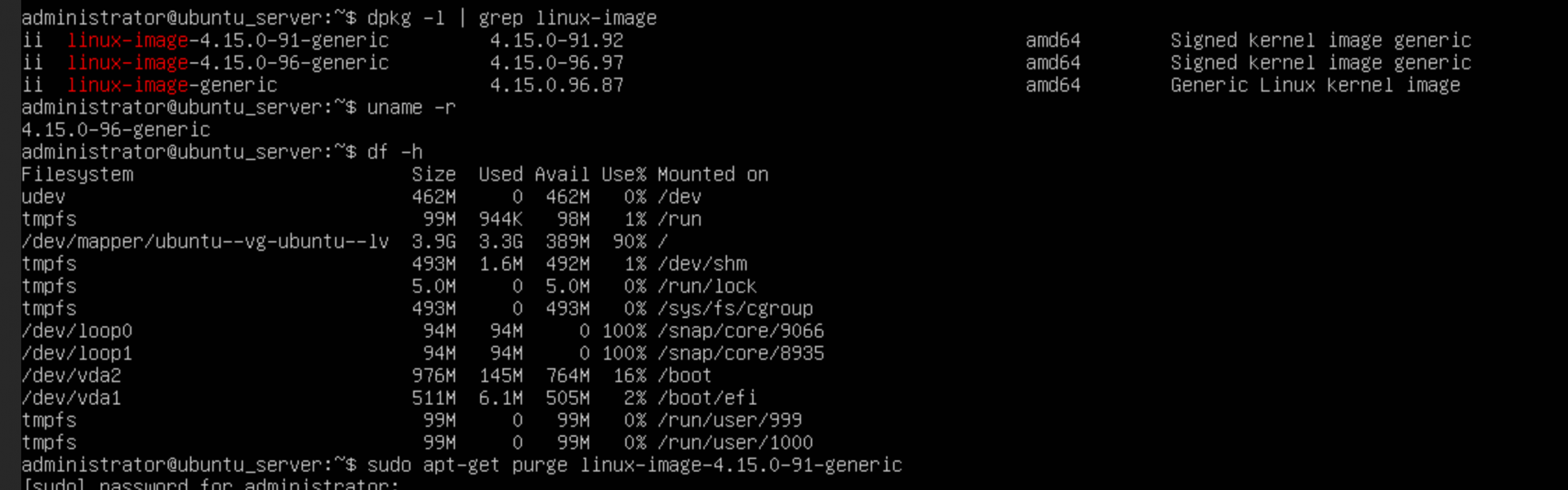

root@u18vm:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 966M 0 966M 0% /dev

tmpfs 200M 1.1M 199M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 3.9G 1.8G 1.9G 49% /

tmpfs 997M 0 997M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 997M 0 997M 0% /sys/fs/cgroup

/dev/loop0 90M 90M 0 100% /snap/core/8268

/dev/vda2 976M 77M 832M 9% /boot

tmpfs 200M 0 200M 0% /run/user/1000

root@u18vm:~# vgdisplay

--- Volume group ---

VG Name ubuntu-vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size <19.00 GiB

PE Size 4.00 MiB

Total PE 4863

Alloc PE / Size 1024 / 4.00 GiB

Free PE / Size 3839 / <15.00 GiB

VG UUID y33HEq-VweL-VAfK-H4ja-xQAh-W55d-cULkny

root@u18vm:~# lvdispaly

--- Logical volume ---

LV Path /dev/ubuntu-vg/ubuntu-lv

LV Name ubuntu-lv

VG Name ubuntu-vg

LV UUID aaV1Ez-UOq1-rjZs-vtj4-1NTE-Comz-ewzYEF

LV Write Access read/write

LV Creation host, time ubuntu-server, 2020-04-26 07:44:39 +0000

LV Status available

# open 1

LV Size <19.00 GiB

Current LE 4863

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

root@u18vm:~#

To expand the logical volume ubuntu-lv to use all the available free space on the volume group ubuntu-vg, you need to add in my example 3839 extents and resize the filesystem on ubuntu-lv. You can do that in one command : "lvresize -l +3839 --resizefs ubuntu-vg/ubuntu-lv" , eg:

Code:

root@u18vm:~# lvresize -l +3839 --resizefs ubuntu-vg/ubuntu-lv

Size of logical volume ubuntu-vg/ubuntu-lv changed from 4.00 GiB (1024 extents) to <19.00 GiB (4863 extents).

Logical volume ubuntu-vg/ubuntu-lv successfully resized.

resize2fs 1.44.1 (24-Mar-2018)

Filesystem at /dev/mapper/ubuntu--vg-ubuntu--lv is mounted on /; on-line resizing required

old_desc_blocks = 1, new_desc_blocks = 3

The filesystem on /dev/mapper/ubuntu--vg-ubuntu--lv is now 4979712 (4k) blocks long.

root@u18vm:~# lvdisplay

--- Logical volume ---

LV Path /dev/ubuntu-vg/ubuntu-lv

LV Name ubuntu-lv

VG Name ubuntu-vg

LV UUID aaV1Ez-UOq1-rjZs-vtj4-1NTE-Comz-ewzYEF

LV Write Access read/write

LV Creation host, time ubuntu-server, 2020-04-26 07:44:39 +0000

LV Status available

# open 1

LV Size <19.00 GiB

Current LE 4863

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

root@u18vm:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 966M 0 966M 0% /dev

tmpfs 200M 1.1M 199M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 19G 1.8G 17G 11% /

tmpfs 997M 0 997M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 997M 0 997M 0% /sys/fs/cgroup

/dev/loop0 90M 90M 0 100% /snap/core/8268

/dev/vda2 976M 77M 832M 9% /boot

tmpfs 200M 0 200M 0% /run/user/1000

root@u18vm:~#

Adjust the 3839 number for your case.

A couple of useful refs:

LVM, or Logical Volume Management, is a storage device management technology that gives users the power to pool and abstract the physical layout of component…

www.digitalocean.com

LVM, or Logical Volume Management, is a storage device management technology that gives users the power to pool and abstract the physical layout of component…

www.digitalocean.com