So I have a bit of a weird issue. I updated my Plex VM (sudo apt update/upgrade) that runs Ubuntu Server 18.04.3 LTS. After the updates completed and the VM rebooted, I started having issues with logging in via the vnc connection as messages continue to pop up that interrupt the login process. Plex no longer loads and I can't access the system. I have since tried installing Ubuntu Server 18.04 LTS, 18.04.4 LTS, and 19.10 and they are all throwing the same/similar error messages before I can even login. At this time I am unable to login to even a completely fresh install. However, I have other un-updated VMs running 18.04.3 LTS that are running just fine because I haven't updated them. (I assume.) They aren't throwing any errors and are fully operational. It appears to me that there is some kind of incompatibility with newer versions of Ubuntu and running it in a Freenas VM? I also went ahead and updated Freenas from 11.2 U8 to 11.3 U2 and upgraded both of my pools. The pool in question here is the 22 x 4TB drives in a RAID10 setup. The VM images are stored on this pool. I have checked for errors under the status tab and it shows zero errors. I am currently running a scrub as well.

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

- Forums

- Archives

- FreeNAS (Legacy Software Releases)

- FreeNAS Help & support

- General Questions and Help

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ubuntu Virtual Machine No Longer Works

- Thread starter kaamady

- Start date

Basil Hendroff

Wizard

- Joined

- Jan 4, 2014

- Messages

- 1,644

FYI, I had Ubuntu Desktop 18.04.4 running successfully in a FreeNAS 11.3-U1 VM. Today, I updated FreeNAS to 11.3-U2. Everything is still working as it should be. Could there be an issue with the zvol the VM uses? Do you have an earlier snapshot of the zvol you could test against?

Sadly I'm dumb and didn't create any snapshots. Not only does this issue show up on the updated 18.04.3 zvol vm, but it's also showing up on fresh installs of the same version and newer. I have 3 other VMs running 18.04.3 that are working completley fine as I haven't issued the sudo apt update command yet.FYI, I had Ubuntu Desktop 18.04.4 running successfully in a FreeNAS 11.3-U1 VM. Today, I updated FreeNAS to 11.3-U2. Everything is still working as it should be. Could there be an issue with the zvol the VM uses? Do you have an earlier snapshot of the zvol you could test against?

Stage5-F100

Dabbler

- Joined

- Feb 29, 2020

- Messages

- 26

Same issue on my end! All Ubuntu VMs are broken. I either get the same errors as OP, or the mouse cursor won't respond and the machine won't get past the login/greeter page.

Edit:

I can verify that CentOS 8 and Windows 10 work.

Manjaro, and *all* versions of Ubuntu (18.04, 19.10, 20.04-Beta) do not work. These problems started this morning, when I noticed that nearly all of my VMs were down.

Edit:

I can verify that CentOS 8 and Windows 10 work.

Manjaro, and *all* versions of Ubuntu (18.04, 19.10, 20.04-Beta) do not work. These problems started this morning, when I noticed that nearly all of my VMs were down.

Last edited:

Stage5-F100

Dabbler

- Joined

- Feb 29, 2020

- Messages

- 26

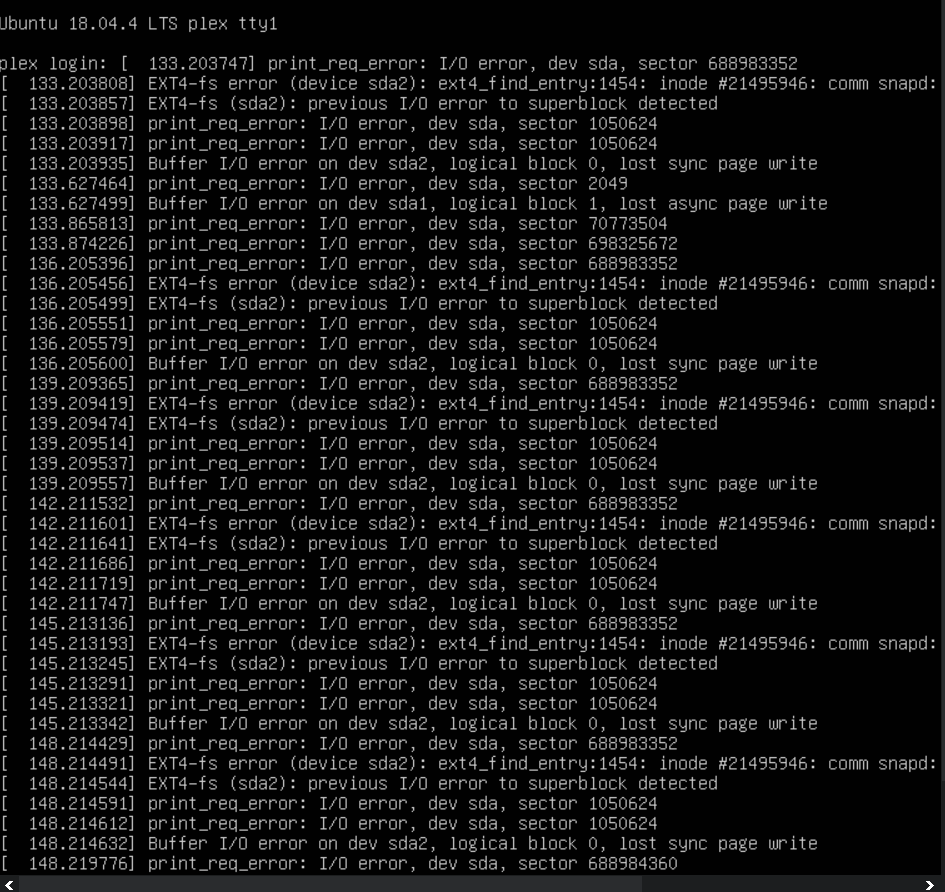

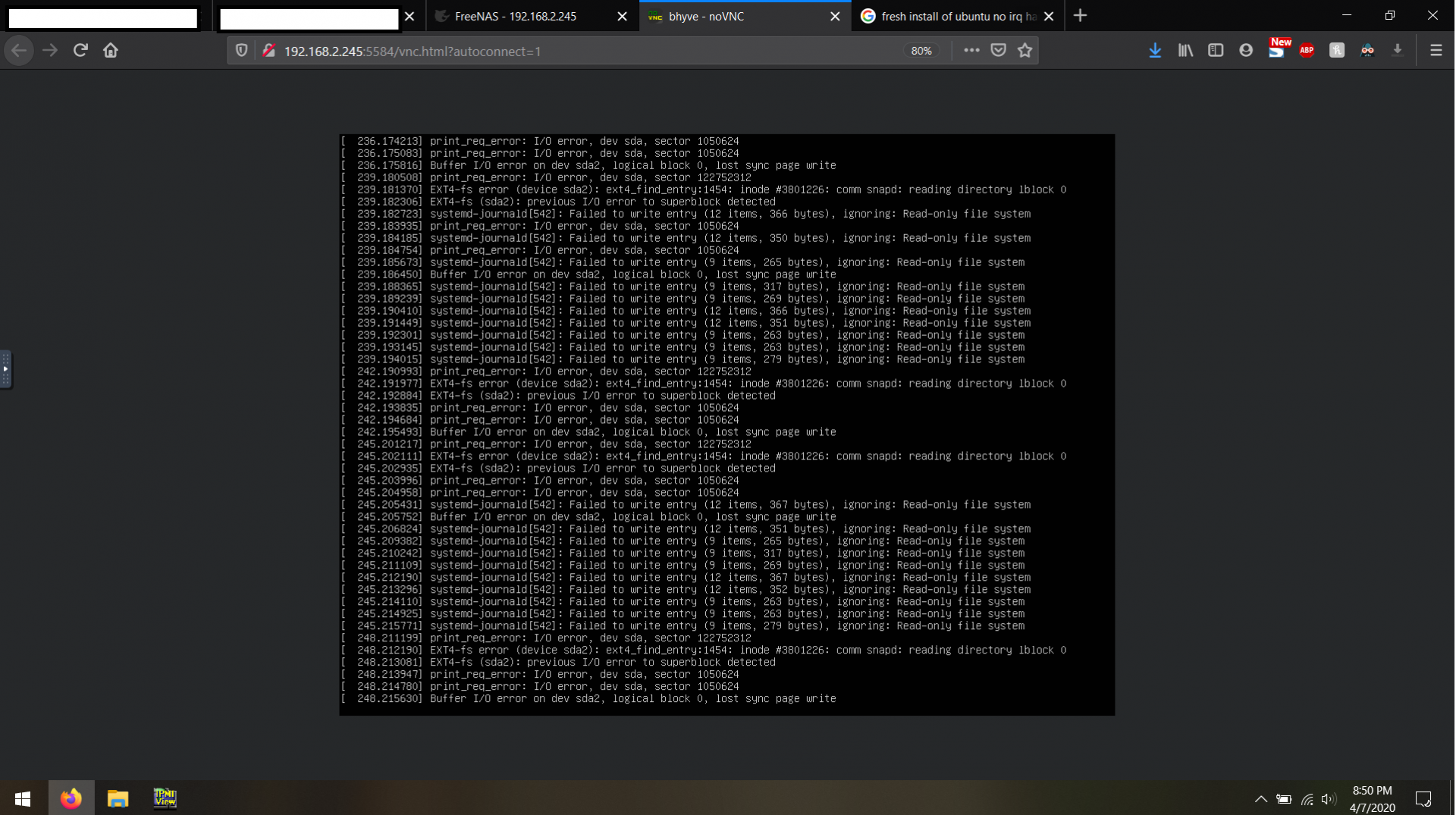

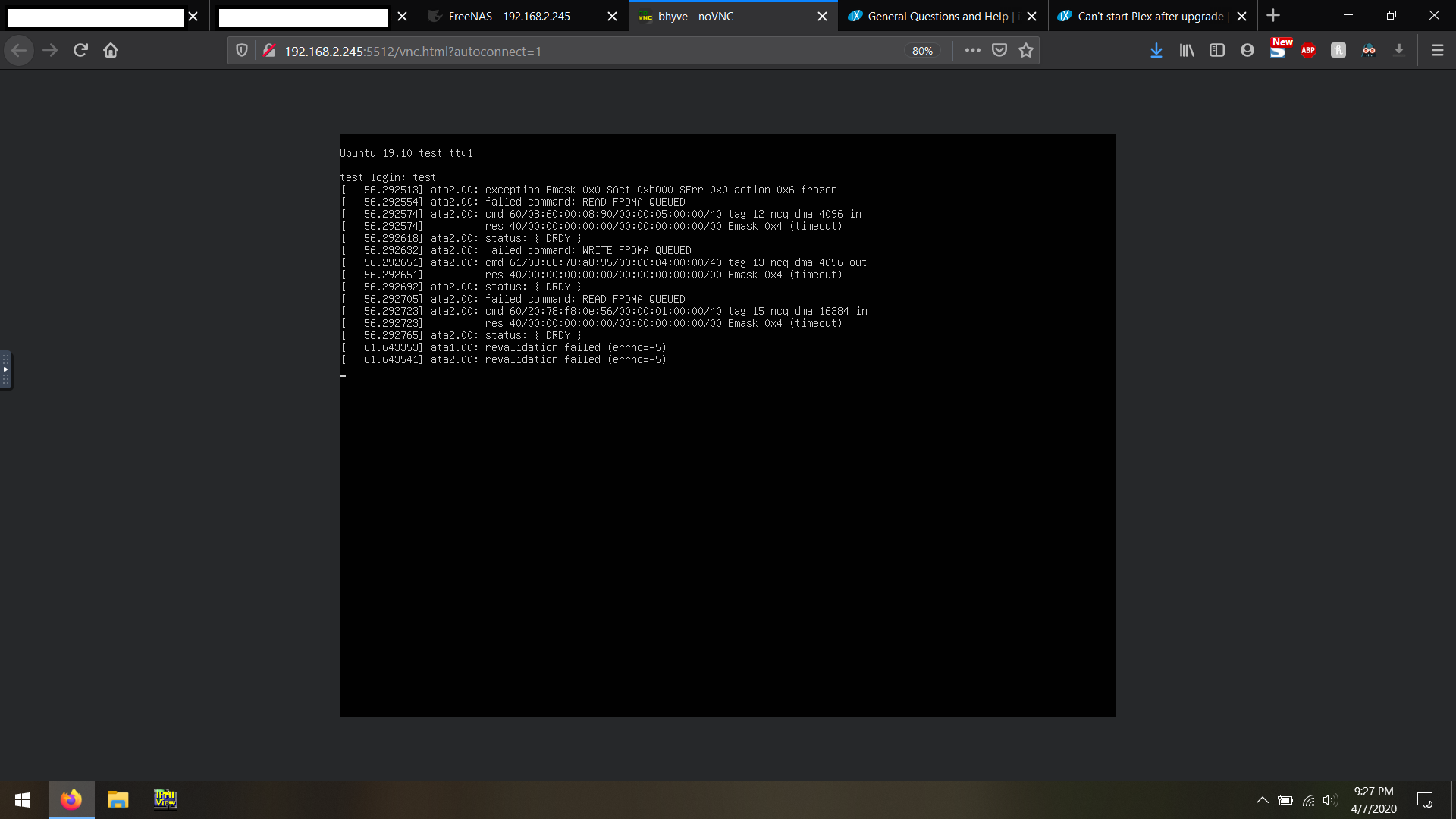

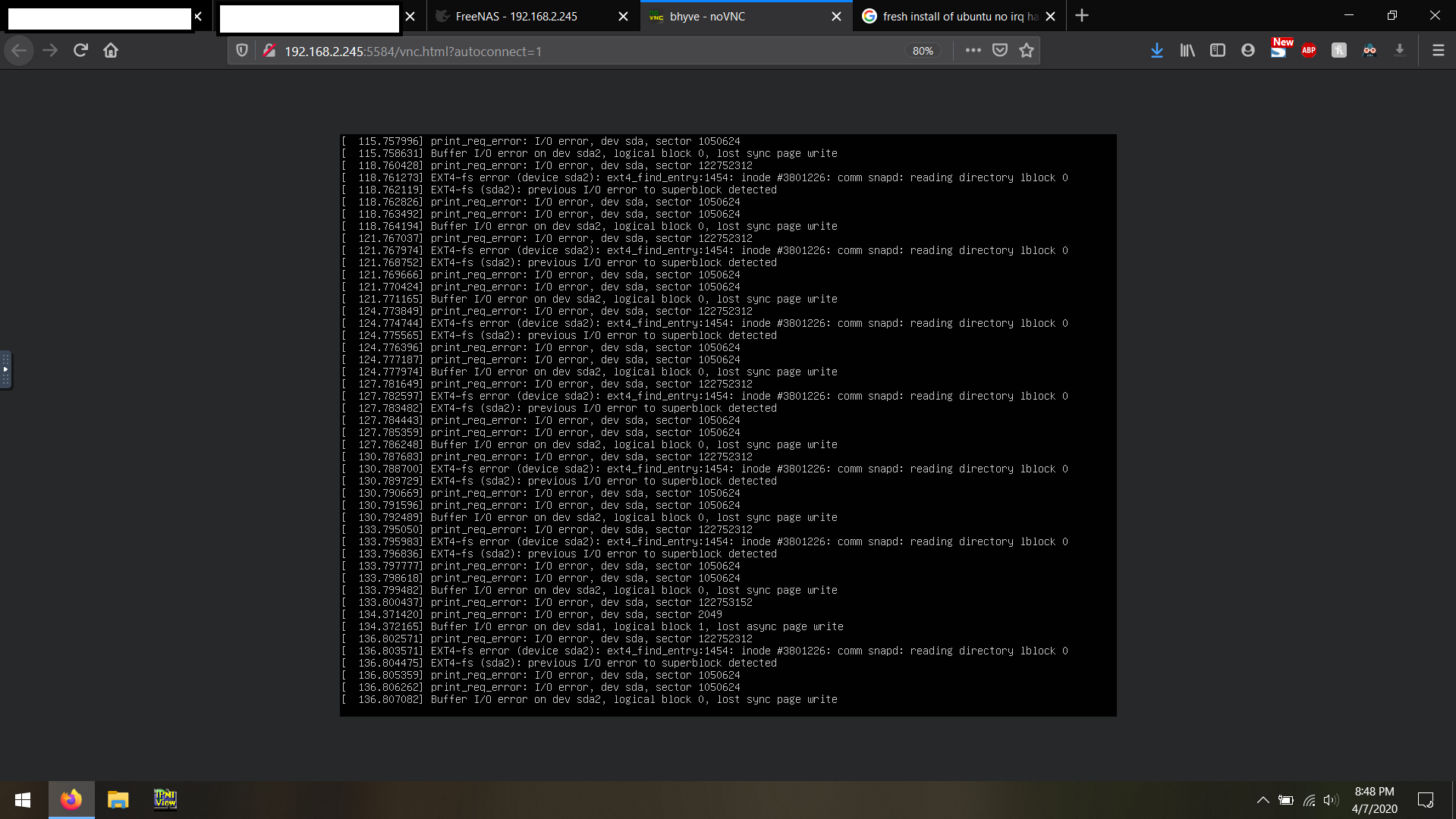

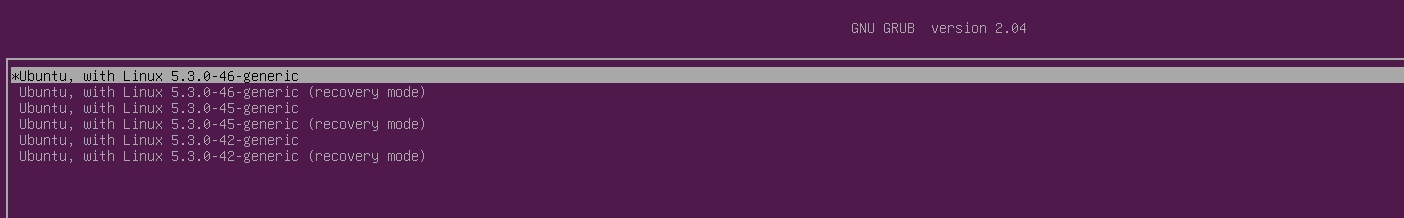

Managed to grab some screenshots from my FreeNAS-based Ubuntu VMs.

This is what all of my Ubuntu VMs do, ~30 seconds after powering-up (some screencaps I grabbed immediately, some I grabbed after some time, just in case the difference in time/messages helps)

Just to be clear, this is on the same dataset that my Windows 10 test VM is on, and that my CentOS VMs are on. All of those are working fine with no hiccups.

EDIT: Shortly before these errors (from the attached screencaps) are seen, around half the time the VM will boot to the desktop, at which point everything is frozen and the mouse pointer does not respond.

This is what all of my Ubuntu VMs do, ~30 seconds after powering-up (some screencaps I grabbed immediately, some I grabbed after some time, just in case the difference in time/messages helps)

Just to be clear, this is on the same dataset that my Windows 10 test VM is on, and that my CentOS VMs are on. All of those are working fine with no hiccups.

EDIT: Shortly before these errors (from the attached screencaps) are seen, around half the time the VM will boot to the desktop, at which point everything is frozen and the mouse pointer does not respond.

Attachments

Basil Hendroff

Wizard

- Joined

- Jan 4, 2014

- Messages

- 1,644

What changed for you overnight?These problems started this morning, when I noticed that nearly all of my VMs were down.

Stage5-F100

Dabbler

- Joined

- Feb 29, 2020

- Messages

- 26

I updated the Ubuntu VMs last night (every Monday/Tuesday, depending on when I get to it) before bed. Everything seemed fine, on a quick glance at least. When I woke up, I went to check a few things and noticed everything was just... gone.What changed for you overnight?

~ ~ ~ ~ ~ ~

Some more background:

My first round of troubleshooting, I noticed I had no cursor control on every noVNC session I started (where "every" just meant all my Ubuntu-based VMs, a pure coincidence until I created some CentOS and Windows VMs to check) no matter which browser or system I used, and even across reboots. Then, I also noticed that I had no way of interacting with the VMs at all, and if I let them sit for ~30 seconds, they would all crash-out and show the same errors as my/OP's screenshots.

Also this week, went from 11.3-U1 to 11.3-U2. The FreeNAS box rebooted a couple times during the update (judging from the POST beeps) which I didn't expect, but 5 minutes later the update was done. (I was expecting just a single reboot.)

Some extra things worth noting, in case it help with the troubleshooting of the errors that I and OP are seeing:

- This is a relatively clean installation of FreeNAS. Only a month old, one zpool of 5 drives in a RAIDz2, and 6TB of 25TB used.

Boot drive is a 970 Pro 256GB. The machine is a bog-standard Z800 with 2x X5650 and 24GB ECC. - Only 7 VMs ever used, 5 of which are Ubuntu (MATE and server mostly), one Windows 10, and one CentOS

- No jails. No custom config. Just a stock install of 11.3-U1, with an encrypted pool set up, a few datasets made, a few SMB shares made, and the above VMs spun up.

- Machine is on a robust live UPS. BIOS reports good vitals. Hardware check good. I don't think it's a power issue.

- Just did a full pool scrub (took most of the day!) over the weekend, no errors on any of the drives

- EDIT: Live-boots of Ubuntu install media (18.04, 19.10, 20.04-beta) all work fine. When I reboot the VM on the post-install prompt, is when everything immediately crashes and burns

- EDIT: Just to emphasize, Windows 10 and CentOS still work beautifully. All Ubuntu versions and their derivatives don't.

Last edited:

I updated the Ubuntu VMs last night (every Monday/Tuesday, depending on when I get to it) before bed. Everything seemed fine, on a quick glance at least. When I woke up, I went to check a few things and noticed everything was just... gone.

~ ~ ~ ~ ~ ~

Some more background:

My first round of troubleshooting, I noticed I had no cursor control on every noVNC session I started (where "every" just meant all my Ubuntu-based VMs, a pure coincidence until I created some CentOS and Windows VMs to check) no matter which browser or system I used, and even across reboots. Then, I also noticed that I had no way of interacting with the VMs at all, and if I let them sit for ~30 seconds, they would all crash-out and show the same errors as my/OP's screenshots.

Also this week, went from 11.3-U1 to 11.3-U2. The FreeNAS box rebooted a couple times during the update (judging from the POST beeps) which I didn't expect, but 5 minutes later the update was done. (I was expecting just a single reboot.)

Some extra things worth noting, in case it help with the troubleshooting of the errors that I and OP are seeing:

- This is a relatively clean installation of FreeNAS. Only a month old, one zpool of 5 drives in a RAIDz2, and 6TB of 25TB used.

Boot drive is a 970 Pro 256GB. The machine is a bog-standard Z800 with 2x X5650 and 24GB ECC.- Only 7 VMs ever used, 5 of which are Ubuntu (MATE and server mostly), one Windows 10, and one CentOS

- No jails. No custom config. Just a stock install of 11.3-U1, with an encrypted pool set up, a few datasets made, a few SMB shares made, and the above VMs spun up.

- Machine is on a robust live UPS. BIOS reports good vitals. Hardware check good. I don't think it's a power issue.

- Just did a full pool scrub (took most of the day!) over the weekend, no errors on any of the drives

- EDIT: Live-boots of Ubuntu install media (18.04, 19.10, 20.04-beta) all work fine. When I reboot the VM on the post-install prompt, is when everything immediately crashes and burns

- EDIT: Just to emphasize, Windows 10 and CentOS still work beautifully. All Ubuntu versions and their derivatives don't.

My FreeNAS box also rebooted twice which kinda surprised me as well, but everything seems to be fine on the FreeNAS side.

1. My installation is about a year old now, along with both pools of drives (In signature.) My boot drive is a little sketchy but has been running strong. I don't see any errors under it either.

2. I only have 4 VMs that are all running Ubuntu Server 18.04.3 and 2 jails still running on 11.2.

3. 11.3 U2 which I updated from 11.2 U8 AFTER this issue started on my Plex VM and temporary test installs. I've got a few SMB shares datasets as well.

4. I'm also on a UPS and all of my hardware also seems to pass.

5. I ran a scrub yesterday after all of these issues started and I have no errors on any drives.

6. Any new installation of Ubuntu Server immediately has issues AFTER rebooting from the installer.

7. Ditto. Haven't tried windows or CentOS.

I also notice that the server is now using A LOT more RAM than it has in the past.

- Joined

- Nov 25, 2013

- Messages

- 7,776

Does this happen with VirtIO disks as well as AHCI?

tr0ubl3

Cadet

- Joined

- Jul 7, 2017

- Messages

- 2

I had the same issue with 2 Ubuntu 18.04 LTS VM and a Ubuntu desktop VM.

The issue happened after the upgrade to 11.3 and after i upgraded the servers to GNU/Linux 4.15.0-96-generic x86_64

I managed to get the servers back up by using an older image - They are running now with GNU/Linux 4.15.0-91-generic x86_64

The zpool where they are running doesn't have any issues, i think it's more of a BHYVE problem.

All VMs were using AHCI disks. Don't know if VirtIO is affected.

The issue happened after the upgrade to 11.3 and after i upgraded the servers to GNU/Linux 4.15.0-96-generic x86_64

I managed to get the servers back up by using an older image - They are running now with GNU/Linux 4.15.0-91-generic x86_64

The zpool where they are running doesn't have any issues, i think it's more of a BHYVE problem.

All VMs were using AHCI disks. Don't know if VirtIO is affected.

- Joined

- Nov 25, 2013

- Messages

- 7,776

Code:

root@truecommand:~# uname -a Linux truecommand 4.15.0-96-generic #97-Ubuntu SMP Wed Apr 1 03:25:46 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

Absolutely no problem here - all disks VirtIO.

If I am not mistaken you can change the setting without a reinstall. Ubuntu uses UUIDs in

/etc/fstab, so the disks should be found regardless of device naming. Why would you use AHCI, anyway?Kind regards,

Patrick

Stage5-F100

Dabbler

- Joined

- Feb 29, 2020

- Messages

- 26

To make a long story short, because it's the default setting and I've no idea *why* to select the other option.Why would you use AHCI, anyway?

Googling (...DuckDuckGoing?) this seems to tell me that AHCI emulates a traditional disk interface, and VirtIO... doesn't work with a lot of things, but emulates a... network? Lets the kernel talk directly to storage? IDK, I'll have to find somewhere where this is broken-down Barney-style.

TLDR: all my VMs are AHCI because it's the default and I trust iX

~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~

EDIT: work is a bit crazy today, but when I get a break I'll make a new Ubuntu VM on AHCI, confirm it's completely broken, switch to virtIO, and see what happens. I will also create a new VM with virtIO from the start and see how that responds.

Will this also fix my issue of having no control over noVNC? Doubtful, but will report back on this too. :)

Last edited:

tr0ubl3

Cadet

- Joined

- Jul 7, 2017

- Messages

- 2

Hi Patrick, thanks for your reply!

I installed those VMs a long time ago and I think I went with what was default in the drive selector so that's why it's AHCI.

In my investigation of the issue i did try to change the drive type from AHCI to VirtIO for a desktop but i was just pushing buttons to see if i get any response from the vm and obviously that's not how it works.

Initially i thought something was wrong with the zvol/VM config and I tried a reinstall. I had backups of what was running in that vm so it wasn't a big deal. However i noticed the freshly installed VM was doing the same thing and I then tried to investigate what was the root cause. On the second machine I had Grafana stuff that wasn't backed up and although it's nothing special i didn't want to lose everything if I could so i dug deeper and found out that booting an older version there were no issues. This is from the desktop vm and none work correctly. They all work in recovery mode and I was even able to start networking from there and ssh some files out.

The drive is ok it's just the new kernel that has issues with the storage for some reason.

I installed those VMs a long time ago and I think I went with what was default in the drive selector so that's why it's AHCI.

In my investigation of the issue i did try to change the drive type from AHCI to VirtIO for a desktop but i was just pushing buttons to see if i get any response from the vm and obviously that's not how it works.

Initially i thought something was wrong with the zvol/VM config and I tried a reinstall. I had backups of what was running in that vm so it wasn't a big deal. However i noticed the freshly installed VM was doing the same thing and I then tried to investigate what was the root cause. On the second machine I had Grafana stuff that wasn't backed up and although it's nothing special i didn't want to lose everything if I could so i dug deeper and found out that booting an older version there were no issues. This is from the desktop vm and none work correctly. They all work in recovery mode and I was even able to start networking from there and ssh some files out.

The drive is ok it's just the new kernel that has issues with the storage for some reason.

- Joined

- Nov 25, 2013

- Messages

- 7,776

OK ...

With AHCI the hypervisor painstakingly emulates a hardware device that is not there while the guest OS drives a HW interface that is not there.

So instead of saying "please give me block N from disk X" the guest OS has to fill in HW registers (that are not there) with e.g. a block address, triggers an interrupt, all this stuff that OSes do. The hypervisor then takes this data, reconstructs what the guest OS really wanted (read block N) and carries out the request. The hypervisor then triggers a request completion interrupt to wake up the part in the guest OS kernel that is waiting on the I/O ...

It's like you and me talking but instead of a direct connection in English you give your speech to an interpreter who translates it into Mandarin, gives it to another interpreter who translates it back and finally tells me.

VirtIO is an API specifically created for hypervisor environments where the guest OS can simply say "please give me block N". Similar for networking and a couple of other devices.

Long story short: if you run VMs you almost always want "paravirtualization" if available. All current open source guest OS's support it so there is really no reason not to use it. Try changing the setting and tell me how that goes ...

HTH,

Patrick

With AHCI the hypervisor painstakingly emulates a hardware device that is not there while the guest OS drives a HW interface that is not there.

So instead of saying "please give me block N from disk X" the guest OS has to fill in HW registers (that are not there) with e.g. a block address, triggers an interrupt, all this stuff that OSes do. The hypervisor then takes this data, reconstructs what the guest OS really wanted (read block N) and carries out the request. The hypervisor then triggers a request completion interrupt to wake up the part in the guest OS kernel that is waiting on the I/O ...

It's like you and me talking but instead of a direct connection in English you give your speech to an interpreter who translates it into Mandarin, gives it to another interpreter who translates it back and finally tells me.

VirtIO is an API specifically created for hypervisor environments where the guest OS can simply say "please give me block N". Similar for networking and a couple of other devices.

Long story short: if you run VMs you almost always want "paravirtualization" if available. All current open source guest OS's support it so there is really no reason not to use it. Try changing the setting and tell me how that goes ...

HTH,

Patrick

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Ubuntu Virtual Machine No Longer Works"

Similar threads

- Replies

- 4

- Views

- 4K

- Replies

- 11

- Views

- 16K