pixelwave

Contributor

- Joined

- Jan 26, 2022

- Messages

- 174

This is a follow-up from my NVMe TrueNAS Scale storage build. If you want to read about my journey so far start here:

www.truenas.com

www.truenas.com

———

I am trying to post my journey and learnings about 40GbE QSFP in this separate thread because it might be of general use for some. Disclamer I am no expert but I learn as I go so take everything with a grain of salt - input welcome!

In general I chose to go with Mellanox ConnectX-3 PCIe cards because they are dirt cheap (purchased two for 35€ each) on eBay and other platforms. And for homelab / server use they have an amazing cost/value proposition.

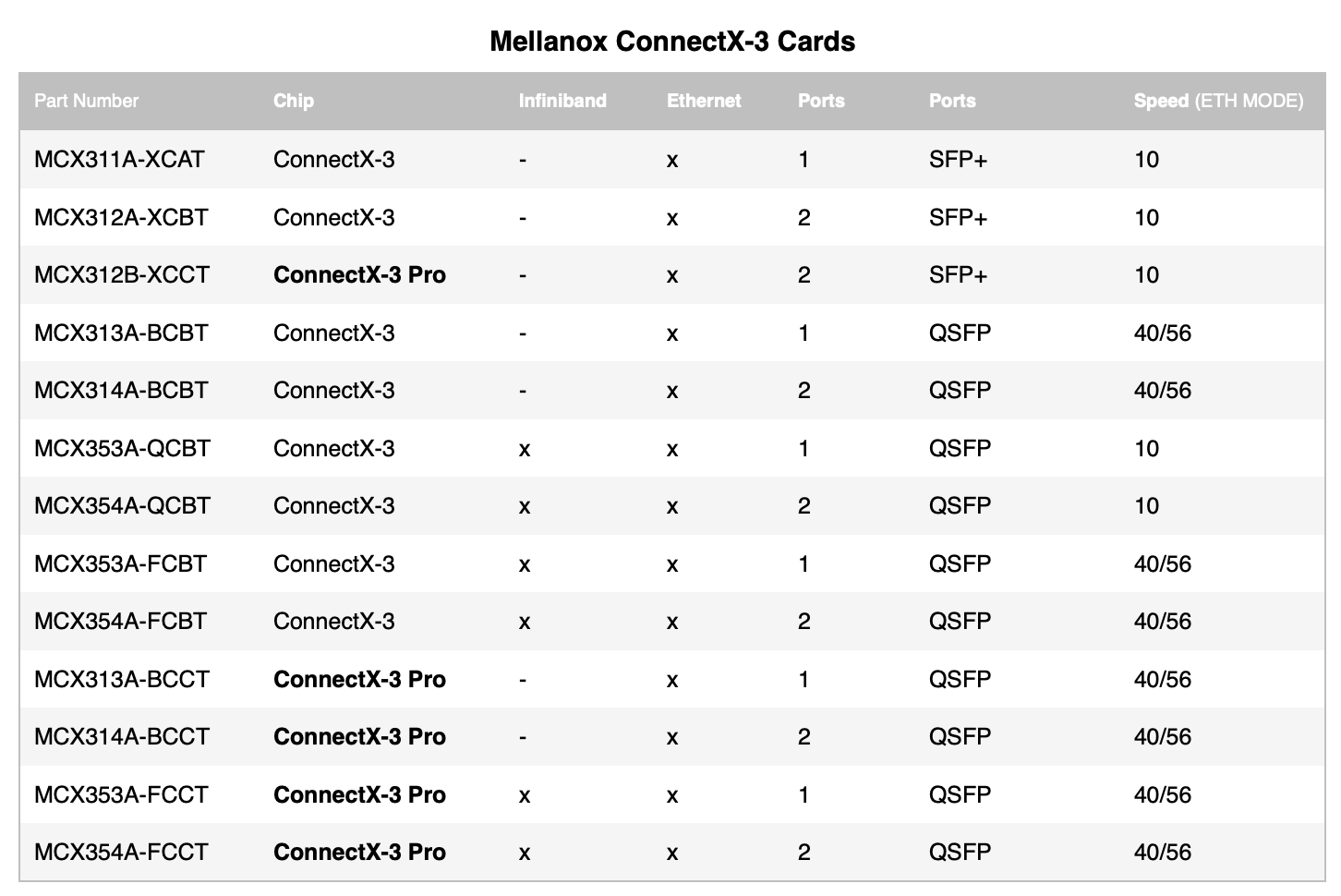

There are a ton of different variants out there (not addressing individual OEM variants from HP, Lenovo ..) so I browsed through the official tech specs and tried to assemble an overview for myself:

According to most guides and forums best compatibility is when using those cards in ETH (Ethernet) mode instead of the IB (Infiniband) setup. So this is what I am going to try first.

Another important finding is .. that it does not seem to matter which card you get because all have pretty much the same hardware and there is a way to flash each card with the latest 40GbE firmware. Even from different vendors (IBM, HP...). Only the „Pro“ cards have some special hardware that offers a bit more features (RDMA/RoCE v2) and of course you can not flash a physical SFP+ port to a QSFP port. ;) Keeping that in mind I purchased two „MCX354A-FCBT“ cards on eBay.

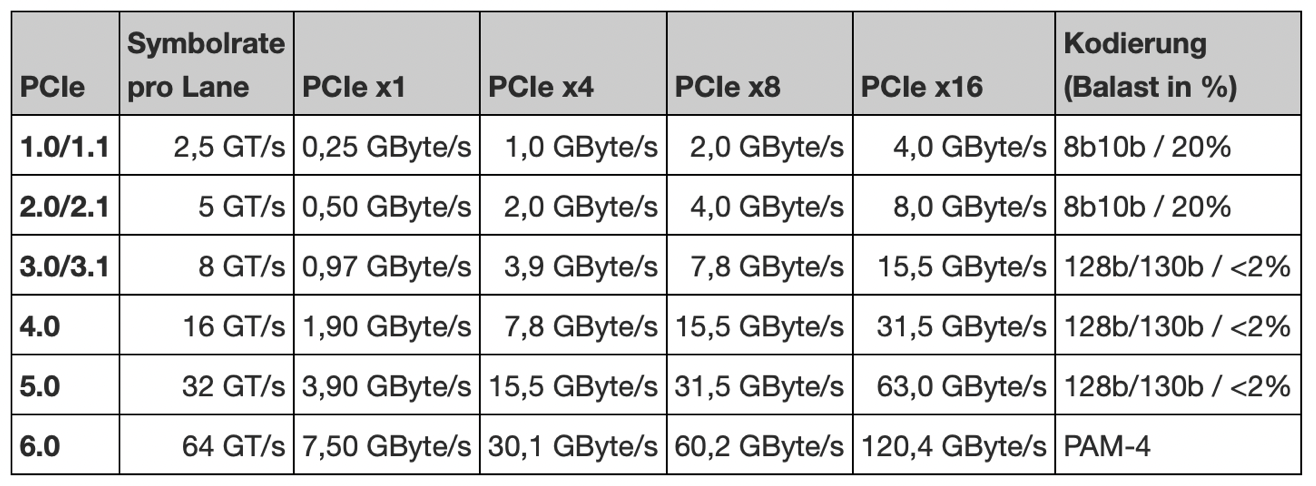

All ConnectX-3 cards support auto-negotiate and backwards compatibility. For „full-speed“ they are advertised as PCIe 3.0 x8 ideally. They also work in PCIe 2.0 slots and with fewer lanes (x1, x2, x4). But they require a mechanical x8 slot at least. In my case I use one card in my TrueNAS Scale PCIe 3.0 x16 (mechanical) / x4 (electrical) slot and one card in my Windows machine .. also electrically an x4 slot. Quick recap about (maximum) PCIe speeds:

I flashed both cards in my Windows 11 Pro machine and afterwards put one in the TrueNAS system. The process is quite straight forward:

1. Download MFT (Mellanox Firmware Tools) 4.22.0 for your system (Windows x64 in my case)

network.nvidia.com

network.nvidia.com

2. Download newest Firmware (MCX354A-FCBT) 2.42.5000

network.nvidia.com

network.nvidia.com

3. Run CMD as administrator with following useful commands:

4. Flash the firmware

5. Change port mode from IB to ETH

... if you use Windows 11 also download WinOF 5.50.54000 for Windows Server 2019.

And then it should work!

Next I will update on speed, benchmark and potential tuning!

AMD Ryzen with ECC and 6x M.2 NVMe build

UPDATE - For the final BOM and build please skip to: https://www.truenas.com/community/threads/amd-ryzen-with-ecc-and-6x-m-2-nvme-build.104574/post-724734 Read below in order to follow my entire journey starting with the initial build: ----------- I am wrapping up my final hardware choice to...

———

I am trying to post my journey and learnings about 40GbE QSFP in this separate thread because it might be of general use for some. Disclamer I am no expert but I learn as I go so take everything with a grain of salt - input welcome!

In general I chose to go with Mellanox ConnectX-3 PCIe cards because they are dirt cheap (purchased two for 35€ each) on eBay and other platforms. And for homelab / server use they have an amazing cost/value proposition.

There are a ton of different variants out there (not addressing individual OEM variants from HP, Lenovo ..) so I browsed through the official tech specs and tried to assemble an overview for myself:

According to most guides and forums best compatibility is when using those cards in ETH (Ethernet) mode instead of the IB (Infiniband) setup. So this is what I am going to try first.

Another important finding is .. that it does not seem to matter which card you get because all have pretty much the same hardware and there is a way to flash each card with the latest 40GbE firmware. Even from different vendors (IBM, HP...). Only the „Pro“ cards have some special hardware that offers a bit more features (RDMA/RoCE v2) and of course you can not flash a physical SFP+ port to a QSFP port. ;) Keeping that in mind I purchased two „MCX354A-FCBT“ cards on eBay.

All ConnectX-3 cards support auto-negotiate and backwards compatibility. For „full-speed“ they are advertised as PCIe 3.0 x8 ideally. They also work in PCIe 2.0 slots and with fewer lanes (x1, x2, x4). But they require a mechanical x8 slot at least. In my case I use one card in my TrueNAS Scale PCIe 3.0 x16 (mechanical) / x4 (electrical) slot and one card in my Windows machine .. also electrically an x4 slot. Quick recap about (maximum) PCIe speeds:

I flashed both cards in my Windows 11 Pro machine and afterwards put one in the TrueNAS system. The process is quite straight forward:

1. Download MFT (Mellanox Firmware Tools) 4.22.0 for your system (Windows x64 in my case)

NVIDIA Firmware Tools (MFT)

The NVIDIA Firmware Tools (MFT) package is a set of firmware management tools

2. Download newest Firmware (MCX354A-FCBT) 2.42.5000

Firmware for ConnectX®-3 IB/VPI

Updating Firmware for ConnectX®-3 Pro EN PCI Express Network Interface Cards (NICs)

3. Run CMD as administrator with following useful commands:

# show device list of installed mellanox cards

mst status

#detailed firmware info

mlxfwmanager --query

#see current card config

mlxconfig -d /dev/mst/mt4099_pci_cr0 query

4. Flash the firmware

# flash firmware

flint -d mt4099_pci_cr0 -i "firmwarefile".bin -allow_psid_change burn

5. Change port mode from IB to ETH

#for instance turn both ports from VPI/Auto to Ethernet only:

mlxconfig -d /dev/mst/mt4099_pci_cr0 set LINK_TYPE_P1=2 LINK_TYPE_P2=2

... if you use Windows 11 also download WinOF 5.50.54000 for Windows Server 2019.

And then it should work!

Next I will update on speed, benchmark and potential tuning!

Last edited: