BloodyIron

Contributor

- Joined

- Feb 28, 2013

- Messages

- 133

Oh also I just noticed this is in the SCALE section... not CORE... I'm aiming to do mine with CORE...

@Glitch01 is using ConnectX-3 cards (QSFP) in ETH Mode. I am using ConnectX-4 cards (SFP28/SFP+) ... also in ETH Mode.@Glitch01 are your ConnectX-4 cards in IB or ETH mode? I for one would like to know a lot more about your configuration and set-up specific to the IB cards please! Also, why does your iperf only go up to 24gpbs? That seems low... (from my armchair, since I'm starting the IB adventure myself hehe).

Have TrueNAS in ZFS which buffers directly to RAM before the actual read/writes to the HDD's. Since I have 32 GB on the NAS it just dumps it as fast as possible to RAM as completed before the actual writes to HDD. Similarly, I have an app for RAM caching on Windows (4 GB allocated) for reads/writes to the local drives that function similarly to ZFS. MTU for the testing was also set to 9000 over a direct connection from the client to TrueNAS, but I'm finding that Windows does not like the value of 9000 as it frequently resets the connection on this card when running through a switch. I have MTU set for 9k on a 10 Gbe RJ45 connection that works on Windows, but it just seems to hate it on the Mellanox. 9k seems to be fine on TrueNAS to the switch which is also set to 9k.I am wondering how you achieve 4000 MB/s read and write to the TrueNAS system? Especially since they are mostly HDDs?

I currently have issues with my ConnectX-4 cards since I have a weird behaviour not achieving full 10GbE down/up speed:

Upgrade to 25GbE (Mellanox ConnectX-4)

I am in the market to upgrade my network infrastructure to 25GbE. Therefore I would like to purchase three PCIe cards (Workstation, Server, Backup Node) and after reading the STH article (https://www.servethehome.com/mellanox-connectx-4-lx-mini-review-ubiquitous-25gbe/) I guess price-wise the...www.truenas.com

Ran some testing on 10 Gbe from Windows box to router and NAS to router. I'm thinking the throughput limitation is related to Windows. Windows to router is almost half the bitrate of TrueNAS to the router.@Glitch01 are your ConnectX-4 cards in IB or ETH mode? I for one would like to know a lot more about your configuration and set-up specific to the IB cards please! Also, why does your iperf only go up to 24gpbs? That seems low... (from my armchair, since I'm starting the IB adventure myself hehe).

I just ran into a super weird behaviour ... testing win11 and mac machines connected to my 10GbE switch accessing the TrueNAS machine:Ran some testing on 10 Gbe from Windows box to router and NAS to router. I'm thinking the throughput limitation is related to Windows. Windows to router is almost half the bitrate of TrueNAS to the router.

From TrueNAS to router iperf3

View attachment 68230

From Windows to router iperf3

View attachment 68231

Mac OS issuing sync writes? It's known to do that over SMB, unlike Windows which does basically none.Mac it is the Write to TrueNAS Server

Not sure if this applies to your situation. Depending on how your proxmox is configured and how many devices are sharing the connection may also have an impact.I just ran into a super weird behaviour ... testing win11 and mac machines connected to my 10GbE switch accessing the TrueNAS machine:

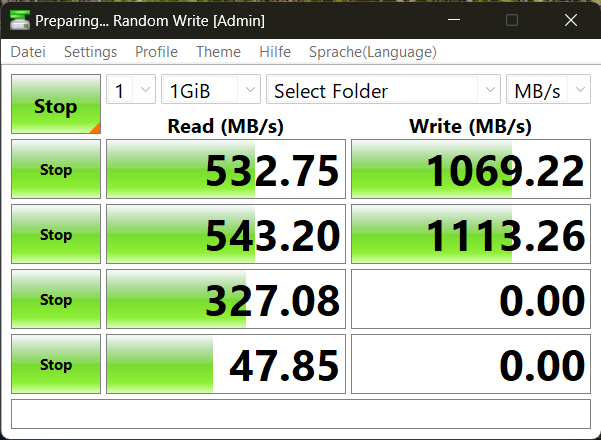

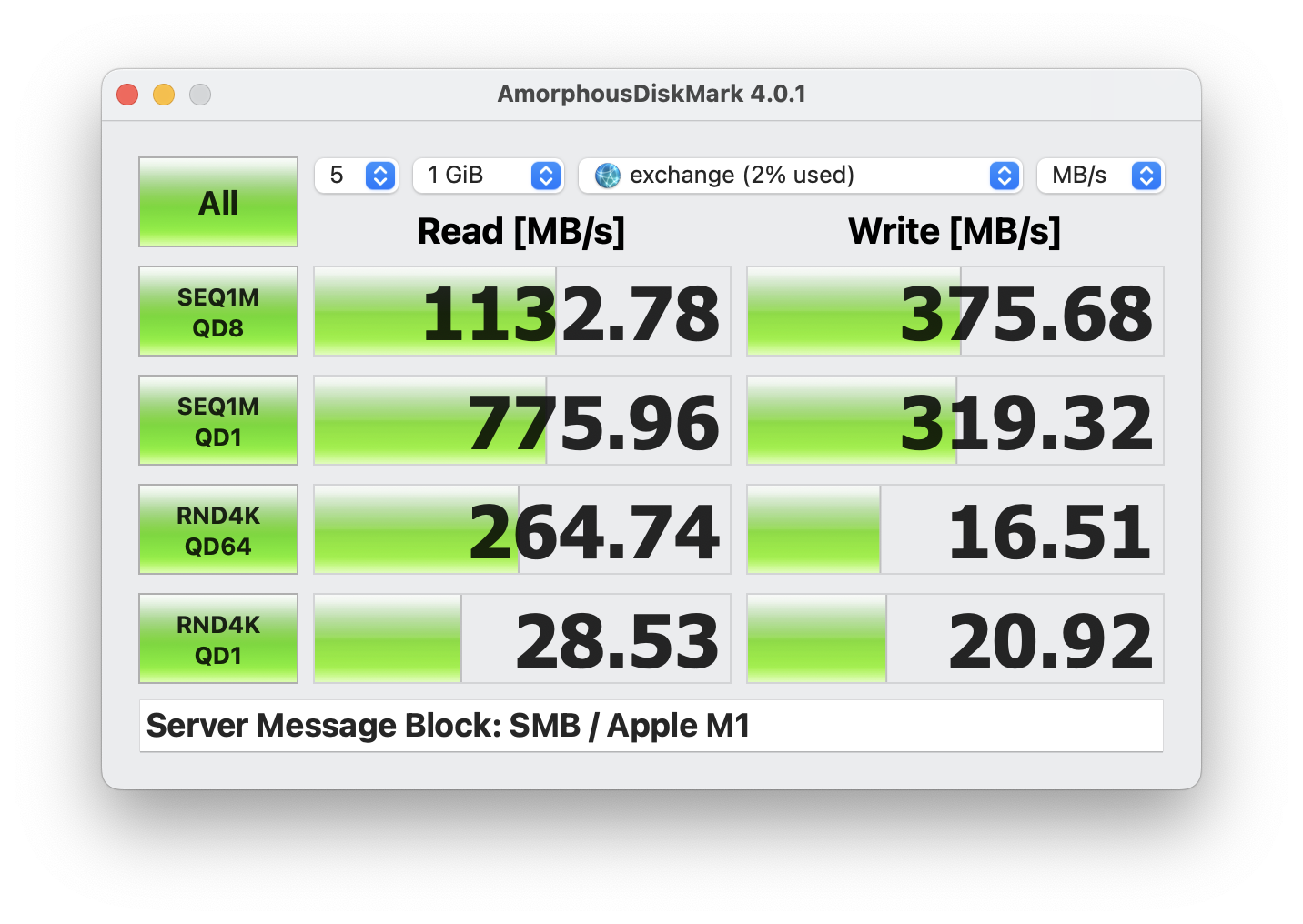

In Windows the Read from TrueNAS is limited and for Mac it is the Write to TrueNAS Server. Same SMB target folder on NAS (NVMe drive), connected to same router?!?

Windows to Proxmox

Mac to Proxmox

I am on 13.4 newest update.Not sure if this applies to your situation. Depending on how your proxmox is configured and how many devices are sharing the connection may also have an impact.

SMB with Mac

Between OS X 10.11.5 and macOS 10.13.3, Apple enforced packet signing when using SMB. This security feature was designed to prevent "man in the middle" attacks, but it turned out to have major performance implications for 10Gb SMB connections.

The policy was lifted in 10.13.4, and should not apply to subsequent releases.

More information about the packet signing policy can be found in Apple's knowledge base: https://support.apple.com/en-us/HT205926

TrueNAS and Linux are directly connected or via a Switch? If so what Switch...?Additional testing from Linux on my main pc. Mellanox adapter reached 36 Gbps in Linux while 10 Gbe reached 5.7 Gbps. The 10 Gbe nic was originally on a pcie 4.0 x4 bus, but I moved it to a pcie 3.0 x8 bus with no noticeable difference. Speeds performed better under Linux OS for both 40 Gbe and 10 Gbe though I'm still scratching my head about the bottleneck for 10 Gbe. I guess there's something in the mb bios that I'm missing that is preventing the nic from reaching max performance.

From TrueNAS to Linux iperf3 over Mellanox to Mellanox

View attachment 68266

From TrueNAS to Linux iperf3 over 10 Gbe to 10 Gbe

View attachment 68267

The 10 Gbe connection is through a Zyxel semi-managed switch. Both the TrueNAS and the client machine are connected through a 10GBase-T SFP+ to RJ45 connection. A direct connection from the client machine to TrueNAS faired a bit better for 10 Gbe. Windows gets about 6 Gbps through the direct connection in iperf, so I'm guessing Linux will get about 7-8 Gbps respectively. But I digress, the Mellanox connection is working well over a direct connection right now and well exceeds the NVMe cache write speeds to the pool (~2.8 GB/s). I'm currently looking into a custom-built switch solution that will host the 40 Gbe connection from the NAS to the rest of the 10 Gbe clients. This is more for future proofing as the current NAS hardware can host up to 26 sata connections. I'll probably cap the system out at 14x 14 tb hdd's to a single pool as end-of-life for the hardware.TrueNAS and Linux are directly connected or via a Switch? If so what Switch...?

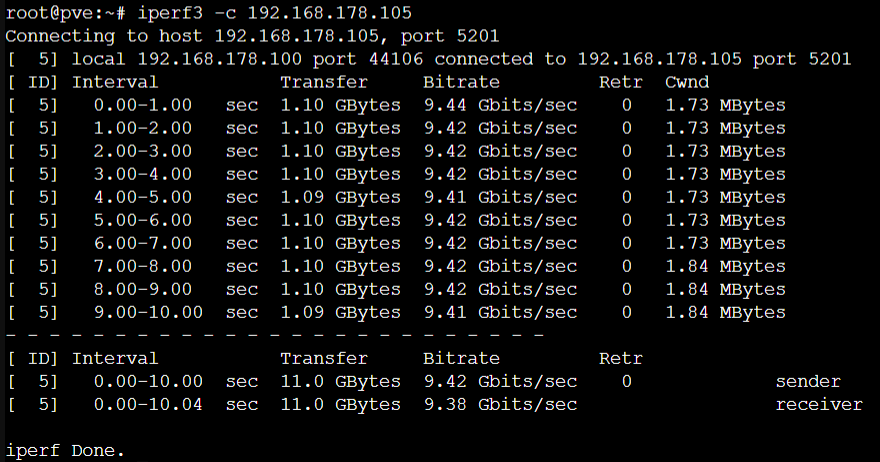

When I speed test both my proxmox systems to each other (via switch) directly from CLI shows full speed, I tested both devices in server/client mode:

The direct 40G connection works fine and the 10G connection works fine on their own on the same box. I'm only having an issue when bridging the two connections, but bridging requires a lot of cpu power to deal with the overhead. My plan is to just have the 40G on TrueNAS and have a dedicated switch to deal with the bridging issue for the rest of the network.Hi. Did you make any progress on this? We have two servers (1 core, 1 scale) with 10G and 40G nics and gave up on the 40G connections as the transfer speed from our clients (mostly Mac) to the TrueNas servers is horrible (less than 200MB/s). Read speeds are fine. Same time the 10G connections work as expected with almost full bandwidth.

We were recently in contact with a server vendor about purchasing a prebuilt and configured TrueNas system and they were also telling us that 40G is broken in general on TrueNAS.

One more mention I wanted to share. I decided to bridge 1 gbe, 2x 10 gbe, & the two Mellanox 40 gbe ports for testing. 1 gbe performed at 1 gbe speeds, 10 gbe ports performed at 10 gbe speeds, and the 40 gbe only performed at 10 gbe speeds. Not sure if there is a software cap in TrueNAS or something, but when the 40 gbe are bridged with the rest of the ports, they will not operate at 40 gbe speds.