joebb1017

Cadet

- Joined

- Feb 12, 2022

- Messages

- 1

Hi,

I have some questions/looking for advice on some SSD pool performance and optimizations.

The setup

I am running a Truenas Scale 22.02-RC.2 deployment in VMWare. I have 2x LSI SAS2308 HBA cards passed through to the VM. I have 48 GB Ram and 4 vCPU assigned to the machine. Everything works well, except for read write transfer speeds. The host has Intel X520 10GBe cards installed, and I am using a VMXNET3 adapter for networking.

The pools are as follows:

2 x RAIDZ2 VDEV in main pool using Intel DC SSD Drives. Each VDEV is 6 drives

1 x Mirror of 2 drives of some Samsung EVO consumer drives.

I have scrubs set up, and the pools have been running for almost 2 years now. Drive life is somewhere around 98%-100% across everything.

Everything networking wise is 10GBe with Intel X520 cards, from server to client.

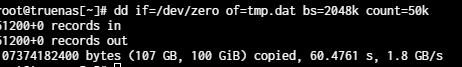

DD Performance results

SMB/IPerf Questions

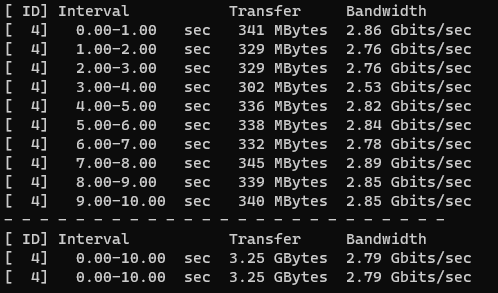

I have a windows 11 client that only gets 300MB/s read and write to the system. I get slightly better performance on Windows Server deployments, where i can get about 400-450 MB/s read/write. It seems there is something in Windows that is causing this to work slower than expected

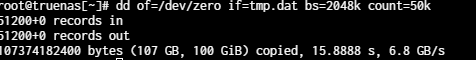

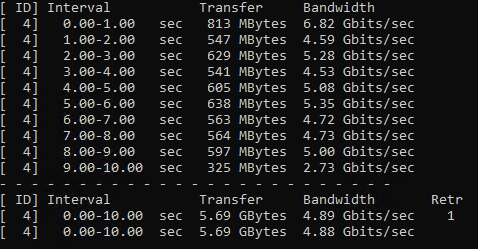

Iperf from Windows 11

Read from Truenas to Win 11

Write from Win 11 to Truenas

Iperf from Windows Server

Write from Truenas to Win Server

Write from Win Server to Truenas

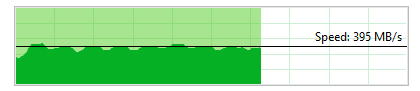

As you can see, i get full data reads from Truenas to Win Server until i hit the cashe limits of the drives.

However, the write speed to Truenas seems to be limited somehow. It also seems that there is some "write caching" in that an SMB file transfer will have peaks and valleys during data transfer.

Optimization Questions

Are there any optimizations in regards to SMB/Truesnas or Windows itself that I should look into? Right now the only thing I have is vfs.zfs.metaslab.lba_weighting_enabled = 0

I have tested these results using jumbo frames and regular size frames, which jumbo frames do not make any difference

Conclusion

Is this somthing that is normal performance and i should tweak my expectations, or is there something that i can tune to gain better performance?

I have some questions/looking for advice on some SSD pool performance and optimizations.

The setup

I am running a Truenas Scale 22.02-RC.2 deployment in VMWare. I have 2x LSI SAS2308 HBA cards passed through to the VM. I have 48 GB Ram and 4 vCPU assigned to the machine. Everything works well, except for read write transfer speeds. The host has Intel X520 10GBe cards installed, and I am using a VMXNET3 adapter for networking.

The pools are as follows:

2 x RAIDZ2 VDEV in main pool using Intel DC SSD Drives. Each VDEV is 6 drives

1 x Mirror of 2 drives of some Samsung EVO consumer drives.

I have scrubs set up, and the pools have been running for almost 2 years now. Drive life is somewhere around 98%-100% across everything.

Everything networking wise is 10GBe with Intel X520 cards, from server to client.

DD Performance results

SMB/IPerf Questions

I have a windows 11 client that only gets 300MB/s read and write to the system. I get slightly better performance on Windows Server deployments, where i can get about 400-450 MB/s read/write. It seems there is something in Windows that is causing this to work slower than expected

Iperf from Windows 11

Read from Truenas to Win 11

Write from Win 11 to Truenas

Iperf from Windows Server

Write from Truenas to Win Server

Write from Win Server to Truenas

As you can see, i get full data reads from Truenas to Win Server until i hit the cashe limits of the drives.

However, the write speed to Truenas seems to be limited somehow. It also seems that there is some "write caching" in that an SMB file transfer will have peaks and valleys during data transfer.

Optimization Questions

Are there any optimizations in regards to SMB/Truesnas or Windows itself that I should look into? Right now the only thing I have is vfs.zfs.metaslab.lba_weighting_enabled = 0

I have tested these results using jumbo frames and regular size frames, which jumbo frames do not make any difference

Conclusion

Is this somthing that is normal performance and i should tweak my expectations, or is there something that i can tune to gain better performance?