Server:

TrueNAS-12.0-U4.1

HP DL380e Gen8

2x Xeon E5-2450L

96GB ECC DDR3

HP H240 HBA controller

8x 10TB WD Ultrastar in RAIDZ2

Intel X520-DA2 network card with 10Gb SFP

Jumbo frames enabled

Client:

Windows 10

i7-4790

32GB DDR3

6x 256GB SSD in RAID0

Intel X520-DA2 network card with 10Gb SFP

Jumbo frames enabled

Network:

Mikrotik CRS317 switch with 10Gb SFPs

Jumbo frames enabled

iPerf:

Running iPerf gives me around 5.5Gb/s across the network.

root@zfs[/mnt/tank/void]# iperf -s -w 128k

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 125 KByte

------------------------------------------------------------

[ 4] local 10.1.2.1 port 5001 connected with 10.1.3.1 port 55291

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 6.44 GBytes 5.53 Gbits/sec

Running dd locally:

When testing with an uncompressed dataset I can write at around 230MB/s and read at 500MB/s locally on the server using dd.

root@zfs[/mnt/tank/void]# dd if=/dev/zero of=testfile bs=1M count=2500 status=progress

10427039744 bytes (10 GB, 9944 MiB) transferred 46.006s, 227 MB/s

2500+0 records in

2500+0 records out

10485760000 bytes transferred in 46.355416 secs (226203557 bytes/sec)

root@zfs[/mnt/tank/void]# dd if=largearchive.7z of=/dev/null bs=1M status=progress

27142389760 bytes (27 GB, 25 GiB) transferred 54.010s, 503 MB/s

25949+1 records in

25949+1 records out

27210067918 bytes transferred in 54.114394 secs (502824961 bytes/sec)

scp across network from server to client:

I only get around 135MB/s through scp. However, it looks like scp is maxing one of the CPU cores on the server during the transfer so maybe this is normal for scp and the limitation is the single threaded CPU performance. Any comments on this are appreciated.

smb across network from server to client:

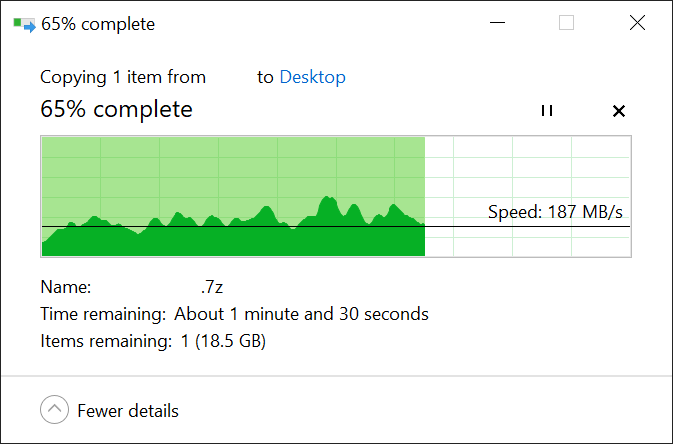

Copying a large file via smb gives me an average of around 150-200MB/s, with the speed fluctuating all over the place throughout the process. Even if the average speed is normal, the fact that it's inconsistent doesn't seem right. The CPU remains fairly idle during the process.

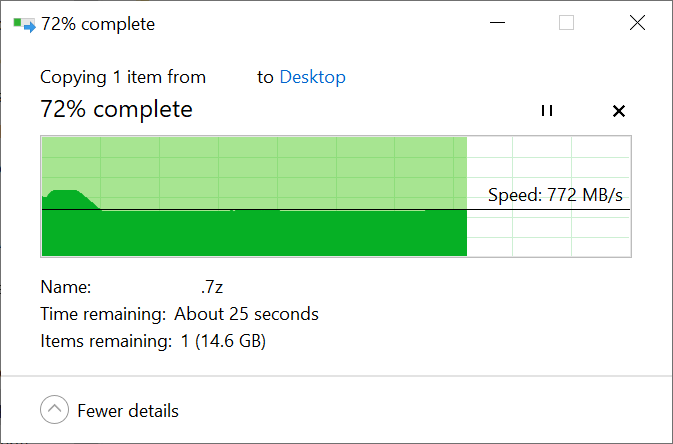

If I then copy the exact same file again (now that it's in the cache), I get full 10Gb line speed. The bottleneck in this picture is actually the SSD in the client throttling itself after a little while. The transfer runs at 1.05GB/s for several seconds until this happens. This suggests to me that there's no problem with my networking configuration.

Given that the server can read from disk at around 500MB/s, and my network can do more than 500MB/s based on both iPerf and the transfer above which was within the cache, why do I only see 150-200MB/s when it's reading from disk and sending it across the network?

Does anyone have any suggestions for things I can look at?

TrueNAS-12.0-U4.1

HP DL380e Gen8

2x Xeon E5-2450L

96GB ECC DDR3

HP H240 HBA controller

8x 10TB WD Ultrastar in RAIDZ2

Intel X520-DA2 network card with 10Gb SFP

Jumbo frames enabled

Client:

Windows 10

i7-4790

32GB DDR3

6x 256GB SSD in RAID0

Intel X520-DA2 network card with 10Gb SFP

Jumbo frames enabled

Network:

Mikrotik CRS317 switch with 10Gb SFPs

Jumbo frames enabled

iPerf:

Running iPerf gives me around 5.5Gb/s across the network.

root@zfs[/mnt/tank/void]# iperf -s -w 128k

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 125 KByte

------------------------------------------------------------

[ 4] local 10.1.2.1 port 5001 connected with 10.1.3.1 port 55291

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 6.44 GBytes 5.53 Gbits/sec

Running dd locally:

When testing with an uncompressed dataset I can write at around 230MB/s and read at 500MB/s locally on the server using dd.

root@zfs[/mnt/tank/void]# dd if=/dev/zero of=testfile bs=1M count=2500 status=progress

10427039744 bytes (10 GB, 9944 MiB) transferred 46.006s, 227 MB/s

2500+0 records in

2500+0 records out

10485760000 bytes transferred in 46.355416 secs (226203557 bytes/sec)

root@zfs[/mnt/tank/void]# dd if=largearchive.7z of=/dev/null bs=1M status=progress

27142389760 bytes (27 GB, 25 GiB) transferred 54.010s, 503 MB/s

25949+1 records in

25949+1 records out

27210067918 bytes transferred in 54.114394 secs (502824961 bytes/sec)

scp across network from server to client:

I only get around 135MB/s through scp. However, it looks like scp is maxing one of the CPU cores on the server during the transfer so maybe this is normal for scp and the limitation is the single threaded CPU performance. Any comments on this are appreciated.

smb across network from server to client:

Copying a large file via smb gives me an average of around 150-200MB/s, with the speed fluctuating all over the place throughout the process. Even if the average speed is normal, the fact that it's inconsistent doesn't seem right. The CPU remains fairly idle during the process.

If I then copy the exact same file again (now that it's in the cache), I get full 10Gb line speed. The bottleneck in this picture is actually the SSD in the client throttling itself after a little while. The transfer runs at 1.05GB/s for several seconds until this happens. This suggests to me that there's no problem with my networking configuration.

Given that the server can read from disk at around 500MB/s, and my network can do more than 500MB/s based on both iPerf and the transfer above which was within the cache, why do I only see 150-200MB/s when it's reading from disk and sending it across the network?

Does anyone have any suggestions for things I can look at?