My guess is that you're bumping up against the limits of the SAS 2008/2308chipset and/or the 6Gb/s SATA/SAS2 I/O system.

TL/DR: SAS3 gives 56k IOPS and 7450MB/s, while SAS2/SATA gives 13.6k IOPS and 1786MB/s

My conclusion? The SATA/SAS2 I/O system's performance is constrained, even when using SSDs.

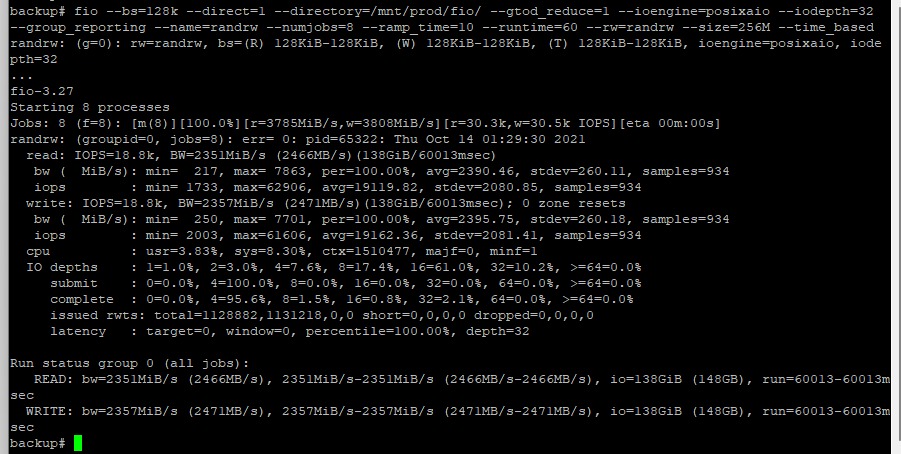

Background: I ran your

fio benchmark on 2 of my systems. I should say 'nearly the same as yours', because both servers run FreeNAS 11.2-U8 and their version of

fio doesn't support the

--gtod_reduce option you used. The pools on both systems are made of spinning rust, not SSDs.

I get results very similar to yours on the server running 6Gb/s LSI SAS9207-8i HBAs (LSI SAS2308 chipset) and spectacularly better results from the system equipped with a 12Gb/s LSI SAS9300-8i HBA (LSI SAS3008).

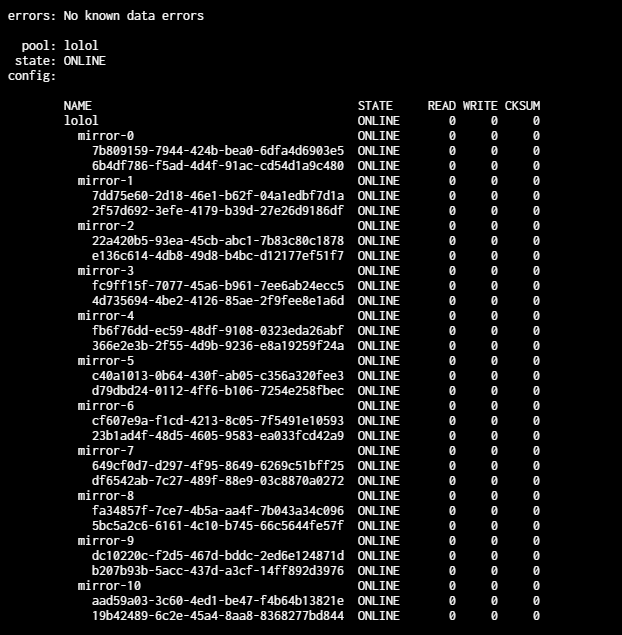

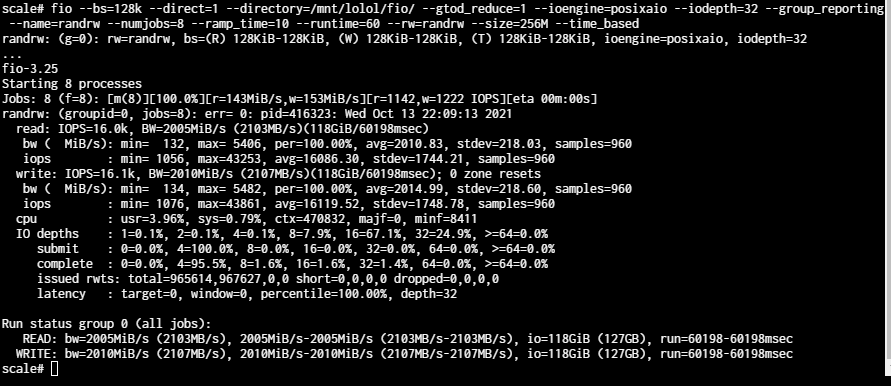

The SATA/SAS2 system ('BANDIT') is a Supermicro X9DRi-LN4F with 3 x LSI SAS9207-8i, a direct-attached SAS2 backplane, and 16 x 4TB SATA disks configured as mirrors. Results are

13.6k IOPS and

~1786MB/s, much the same as yours:

Code:

randrw: (groupid=0, jobs=12): err= 0: pid=82115: Sat Oct 16 23:33:04 2021

read: IOPS=13.6k, BW=1703MiB/s (1786MB/s)(99.8GiB/60015msec)

slat (nsec): min=524, max=1157.3M, avg=420280.39, stdev=7927301.30

clat (usec): min=5, max=1940.9k, avg=7019.84, stdev=36687.99

lat (usec): min=66, max=1941.2k, avg=7440.54, stdev=37766.61

clat percentiles (usec):

| 1.00th=[ 55], 5.00th=[ 355], 10.00th=[ 709],

| 20.00th=[ 1450], 30.00th=[ 2212], 40.00th=[ 3032],

| 50.00th=[ 3884], 60.00th=[ 4752], 70.00th=[ 5735],

| 80.00th=[ 6783], 90.00th=[ 8455], 95.00th=[ 10290],

| 99.00th=[ 34341], 99.50th=[ 206570], 99.90th=[ 608175],

| 99.95th=[ 767558], 99.99th=[1069548]

bw ( KiB/s): min= 1303, max=498671, per=8.50%, avg=148189.67, stdev=90632.25, samples=1351

iops : min= 10, max= 3895, avg=1157.24, stdev=708.06, samples=1351

write: IOPS=13.6k, BW=1704MiB/s (1787MB/s)(99.9GiB/60015msec)

slat (usec): min=2, max=1301.2k, avg=410.30, stdev=7885.67

clat (usec): min=30, max=1941.2k, avg=7442.47, stdev=39351.72

lat (usec): min=103, max=1941.4k, avg=7853.22, stdev=40445.87

clat percentiles (usec):

| 1.00th=[ 57], 5.00th=[ 392], 10.00th=[ 758],

| 20.00th=[ 1500], 30.00th=[ 2278], 40.00th=[ 3097],

| 50.00th=[ 3916], 60.00th=[ 4817], 70.00th=[ 5735],

| 80.00th=[ 6849], 90.00th=[ 8455], 95.00th=[ 10421],

| 99.00th=[ 42730], 99.50th=[ 235930], 99.90th=[ 650118],

| 99.95th=[ 801113], 99.99th=[1069548]

bw ( KiB/s): min= 751, max=486098, per=8.50%, avg=148295.44, stdev=90556.52, samples=1351

iops : min= 5, max= 3797, avg=1158.05, stdev=707.47, samples=1351

lat (usec) : 10=0.01%, 50=0.25%, 100=1.52%, 250=1.55%, 500=3.43%

lat (usec) : 750=3.46%, 1000=3.44%

lat (msec) : 2=13.29%, 4=24.23%, 10=43.32%, 20=4.05%, 50=0.56%

lat (msec) : 100=0.18%, 250=0.28%, 500=0.28%, 750=0.11%, 1000=0.04%

cpu : usr=2.27%, sys=2.02%, ctx=2400591, majf=0, minf=0

IO depths : 1=2.8%, 2=7.1%, 4=14.8%, 8=29.8%, 16=59.7%, 32=3.7%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.9%, 8=0.1%, 16=0.1%, 32=3.1%, 64=0.0%, >=64=0.0%

issued rwts: total=817461,818140,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

READ: bw=1703MiB/s (1786MB/s), 1703MiB/s-1703MiB/s (1786MB/s-1786MB/s), io=99.8GiB (107GB), run=60015-60015msec

WRITE: bw=1704MiB/s (1787MB/s), 1704MiB/s-1704MiB/s (1787MB/s-1787MB/s), io=99.9GiB (107GB), run=60015-60015msec

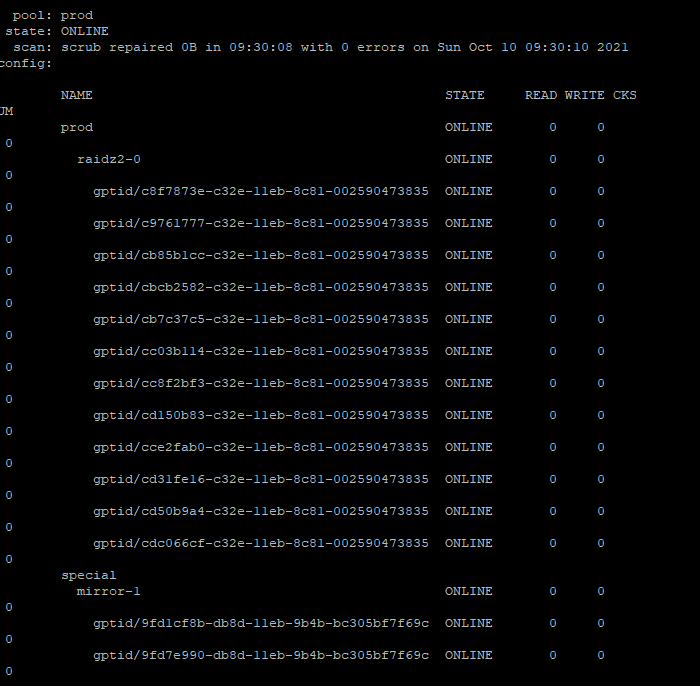

The SAS3 system ('BACON') is a Supermicro X10SRL-F with an LSI SAS9300-8i, a SAS3 expander backplane, and 10 x 4TB SAS3 disks configured as 2 x 5-disk RAIDZ2 vdevs. Results are

~56.8k IOPS and

~7450MB/s (details below):

Code:

randrw: (groupid=0, jobs=12): err= 0: pid=1378: Sat Oct 16 23:38:34 2021

read: IOPS=56.8k, BW=7102MiB/s (7447MB/s)(416GiB/60001msec)

slat (nsec): min=478, max=605061k, avg=66475.01, stdev=1108715.40

clat (usec): min=7, max=824734, avg=2043.60, stdev=6062.93

lat (usec): min=39, max=825033, avg=2110.07, stdev=6183.62

clat percentiles (usec):

| 1.00th=[ 75], 5.00th=[ 192], 10.00th=[ 310], 20.00th=[ 537],

| 30.00th=[ 766], 40.00th=[ 1004], 50.00th=[ 1237], 60.00th=[ 1483],

| 70.00th=[ 1745], 80.00th=[ 2089], 90.00th=[ 3523], 95.00th=[ 7111],

| 99.00th=[ 14353], 99.50th=[ 17433], 99.90th=[ 61080], 99.95th=[113771],

| 99.99th=[231736]

bw ( KiB/s): min=12288, max=1024000, per=8.33%, avg=605694.98, stdev=314143.10, samples=1436

iops : min= 96, max= 8000, avg=4731.67, stdev=2454.25, samples=1436

write: IOPS=56.9k, BW=7108MiB/s (7454MB/s)(417GiB/60001msec)

slat (nsec): min=1376, max=613328k, avg=79069.72, stdev=1357027.67

clat (usec): min=17, max=842909, avg=2283.93, stdev=6745.07

lat (usec): min=55, max=842934, avg=2363.00, stdev=6897.94

clat percentiles (usec):

| 1.00th=[ 113], 5.00th=[ 233], 10.00th=[ 351], 20.00th=[ 586],

| 30.00th=[ 816], 40.00th=[ 1057], 50.00th=[ 1287], 60.00th=[ 1532],

| 70.00th=[ 1795], 80.00th=[ 2180], 90.00th=[ 3949], 95.00th=[ 8291],

| 99.00th=[ 17433], 99.50th=[ 22414], 99.90th=[ 77071], 99.95th=[137364],

| 99.99th=[246416]

bw ( KiB/s): min=10496, max=1024512, per=8.33%, avg=606213.19, stdev=314317.66, samples=1436

iops : min= 82, max= 8004, avg=4735.72, stdev=2455.62, samples=1436

lat (usec) : 10=0.01%, 20=0.01%, 50=0.12%, 100=1.10%, 250=5.37%

lat (usec) : 500=10.79%, 750=10.76%, 1000=10.68%

lat (msec) : 2=38.12%, 4=13.58%, 10=6.23%, 20=2.74%, 50=0.37%

lat (msec) : 100=0.08%, 250=0.06%, 500=0.01%, 750=0.01%, 1000=0.01%

cpu : usr=8.43%, sys=9.43%, ctx=10067248, majf=0, minf=0

IO depths : 1=1.1%, 2=5.5%, 4=13.5%, 8=28.0%, 16=63.6%, 32=5.6%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.6%, 8=0.3%, 16=0.2%, 32=2.9%, 64=0.0%, >=64=0.0%

issued rwts: total=3408848,3411977,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

READ: bw=7102MiB/s (7447MB/s), 7102MiB/s-7102MiB/s (7447MB/s-7447MB/s), io=416GiB (447GB), run=60001-60001msec

WRITE: bw=7108MiB/s (7454MB/s), 7108MiB/s-7108MiB/s (7454MB/s-7454MB/s), io=417GiB (447GB), run=60001-60001msec

My

fio script:

Code:

fio --name=randrw \

--bs=128k \

--direct=1 \

--directory=/mnt/tank/systems \

--ioengine=posixaio \

--iodepth=32 \

--group_reporting \

--numjobs=12 \

--ramp_time=10 \

--runtime=60 \

--rw=randrw \

--size=256MGB \

--time_based