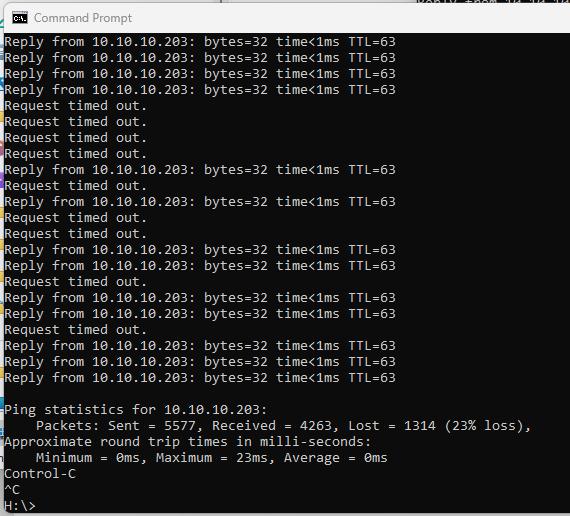

I just upgraded to TrueNAS Scale 22.12.3.1. After the upgrade completed successfully, I am seeing weird network instability. Keeping a constant ping to the TrueNAS web interface is seeing about 23% packet loss. Keeping a constant ping to servers on the same network/subnet/rack are showing 100% success rate. Even a constant ping to the TrueNAS server Board Management Card (BMC) shows 100% success. Only the TrueNAS interface is showing packet loss. Main interface is running on a 4x25g bond to dual-redundant TOR switches with a MLAG to the bonded ports.

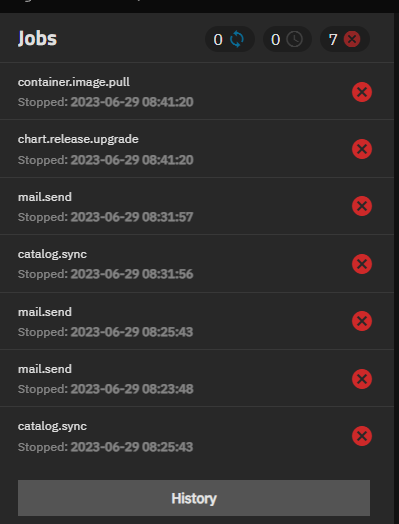

I was seeing several other errors/alerts after the upgrade but gave the system time to settle down before I got worried. I was seeing failed jobs as in this screencap:

There were several alerts regarding something about an "Assert" "Processor Detected". Which I stupidly dismissed without screen capturing.

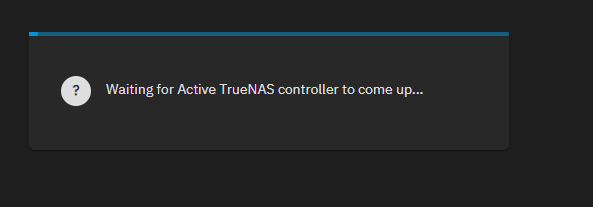

I'll also mention the web interface seems very sluggish to load pages. Some pages (such as Virtualization) seem to crash the controller because after I click on the page, I get a message "Waiting for Active TrueNAS controller to come up...". Then it comes back to the login page. After I login, it goes to the page I originally request. The controller also crashes when I'm trying to import a certificate. However, after the crash and re-login, the certificate is not present in the certificates page.

This is running on an enterprise-grade Gigabyte server: AMD EPYC 7313P 16-Core, 256GB RAM, 2 SATA SSD (boot/OS), 4 NVME SSD (2x L2 ARC on main datapool and 2x RAID 1 datapool for VMs), 16x 18TB Seagate Exos (15-wide RaidZ3 w/ hot spare), 2x 4-port 25gbps NICs (Broadcom).

I was seeing several other errors/alerts after the upgrade but gave the system time to settle down before I got worried. I was seeing failed jobs as in this screencap:

There were several alerts regarding something about an "Assert" "Processor Detected". Which I stupidly dismissed without screen capturing.

I'll also mention the web interface seems very sluggish to load pages. Some pages (such as Virtualization) seem to crash the controller because after I click on the page, I get a message "Waiting for Active TrueNAS controller to come up...". Then it comes back to the login page. After I login, it goes to the page I originally request. The controller also crashes when I'm trying to import a certificate. However, after the crash and re-login, the certificate is not present in the certificates page.

This is running on an enterprise-grade Gigabyte server: AMD EPYC 7313P 16-Core, 256GB RAM, 2 SATA SSD (boot/OS), 4 NVME SSD (2x L2 ARC on main datapool and 2x RAID 1 datapool for VMs), 16x 18TB Seagate Exos (15-wide RaidZ3 w/ hot spare), 2x 4-port 25gbps NICs (Broadcom).