mcantkier

Dabbler

- Joined

- Mar 9, 2021

- Messages

- 20

Hi all,

First post, I've searched the forums and so far no clues as to what's going on with my server. I did manage to pick up on the pertinent information requested for similar issues and will include them below. I'll do my best to follow the forum rules for posting, please forgive me if I miss something!

Obligatory server information:

Description of the issues I have observed:

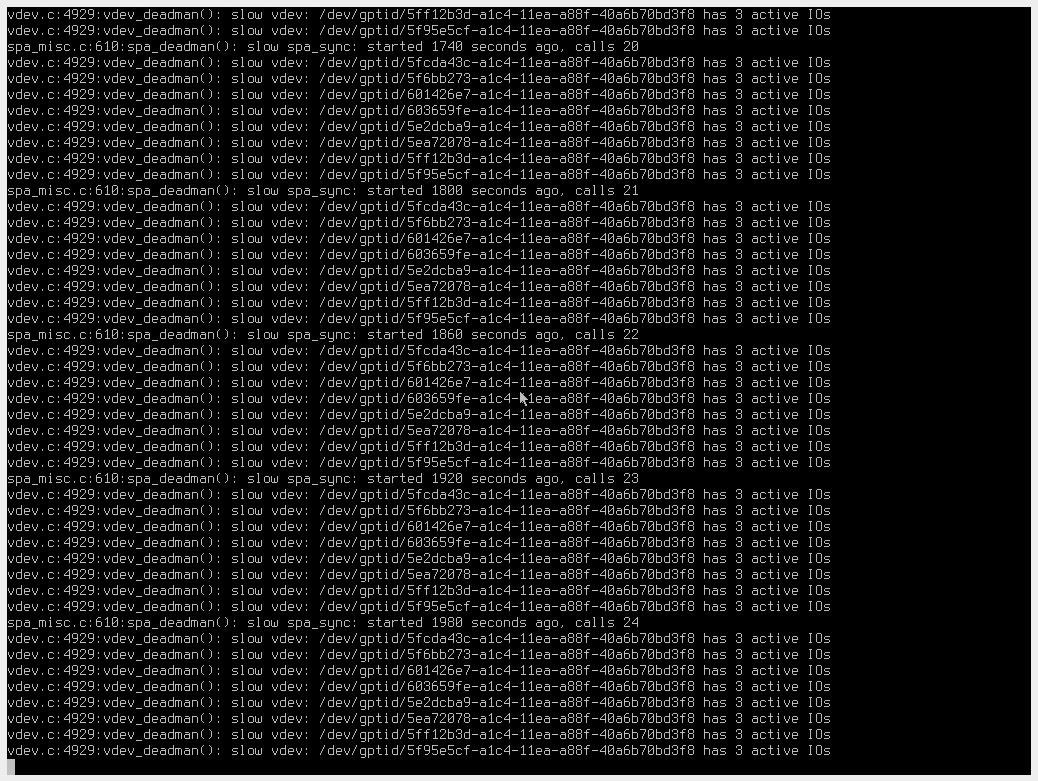

On boot the server loops and then eventually hangs on vdev_deadman(): slow vdev:

I can get past this with a 'CTRL-C' and the server will boot but the (only) pool does not mount or show in /mnt/

Here are various commands and output that may be useful:

The "Scrub in progress" seems to be the root of my issues. If I issue a zpool scrub -s VeeamPool it hangs and never completes.

Just a listing of /mnt

Not sure if it was a good idea but I tried to export/disconnect the pool while retaining all of it's data. It gets to 80% of completion the the server hangs complaining about it is out of swap space and can only be hard booted at that point.

Any ideas or help will be most appreciated.

Thanks,

Michael

First post, I've searched the forums and so far no clues as to what's going on with my server. I did manage to pick up on the pertinent information requested for similar issues and will include them below. I'll do my best to follow the forum rules for posting, please forgive me if I miss something!

Obligatory server information:

Supermicro X11SCA-F Version 1.01A

Intel(R) Core(TM) i7-9700K CPU @ 3.60GHz

64GB ECC DDR4 (16GB DIMM's)

8 x 10TB Ironwolf (2 RAIDZ1 VDEV's) [ST10000VN0008-2J]

2 x CT480BX500SSD1 SSD's (Mirrored) Boot

LSI SAS2308 HBA IT Mode

Intel 10GB 2 x SFP+

Onboard 1GB

TrueNAS-12-0-U2

Description of the issues I have observed:

On boot the server loops and then eventually hangs on vdev_deadman(): slow vdev:

I can get past this with a 'CTRL-C' and the server will boot but the (only) pool does not mount or show in /mnt/

Here are various commands and output that may be useful:

The "Scrub in progress" seems to be the root of my issues. If I issue a zpool scrub -s VeeamPool it hangs and never completes.

Code:

zpool status -v

pool: VeeamPool

state: ONLINE

scan: scrub in progress since Sun Mar 7 00:00:02 2021

13.4T scanned at 5.01G/s, 7.57T issued at 5.07M/s, 34.4T total

0B repaired, 21.99% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

VeeamPool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/5fcda43c-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

gptid/5f6bb273-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

gptid/601426e7-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

gptid/603659fe-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

gptid/5e2dcba9-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

gptid/5ea72078-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

gptid/5ff12b3d-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

gptid/5f95e5cf-a1c4-11ea-a88f-40a6b70bd3f8 ONLINE 0 0 0

errors: No known data errors

pool: freenas-boot

state: ONLINE

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

scan: scrub repaired 0B in 00:00:17 with 0 errors on Tue Mar 2 03:45:17 2021

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ada0p2 ONLINE 0 0 0

ada1p2 ONLINE 0 0 0

errors: No known data errorsJust a listing of /mnt

Code:

MCR-DC-TrueNAS# cd /mnt MCR-DC-TrueNAS# ls -l total 4 -rw-r--r-- 1 root wheel 5 Feb 19 09:25 md_size

Code:

camcontrol devlist <ATA ST10000VN0008-2J SC60> at scbus0 target 0 lun 0 (pass0,da0) <ATA ST10000VN0008-2J SC60> at scbus0 target 1 lun 0 (pass1,da1) <ATA ST10000VN0008-2J SC60> at scbus0 target 2 lun 0 (pass2,da2) <ATA ST10000VN0008-2J SC60> at scbus0 target 3 lun 0 (pass3,da3) <ATA ST10000VN0008-2J SC60> at scbus0 target 4 lun 0 (pass4,da4) <ATA ST10000VN0008-2J SC60> at scbus0 target 5 lun 0 (pass5,da5) <ATA ST10000VN0008-2J SC60> at scbus0 target 6 lun 0 (pass6,da6) <ATA ST10000VN0008-2J SC60> at scbus0 target 7 lun 0 (pass7,da7) <HL-DT-ST DVD-ROM DTC0N 1.00> at scbus1 target 0 lun 0 (pass8,cd0) <CT480BX500SSD1 M6CR022> at scbus2 target 0 lun 0 (pass9,ada0) <CT480BX500SSD1 M6CR022> at scbus3 target 0 lun 0 (pass10,ada1) <AHCI SGPIO Enclosure 2.00 0001> at scbus9 target 0 lun 0 (pass11,ses0)

Code:

glabel status

Name Status Components

gptid/8d85e603-9914-11ea-8749-40a6b70bd3f8 N/A ada0p1

gptid/8ce9b7b7-9f73-11ea-ac1d-40a6b70bd3f8 N/A ada1p1

gptid/601426e7-a1c4-11ea-a88f-40a6b70bd3f8 N/A da0p2

gptid/5f6bb273-a1c4-11ea-a88f-40a6b70bd3f8 N/A da1p2

gptid/603659fe-a1c4-11ea-a88f-40a6b70bd3f8 N/A da2p2

gptid/5fcda43c-a1c4-11ea-a88f-40a6b70bd3f8 N/A da3p2

gptid/5ea72078-a1c4-11ea-a88f-40a6b70bd3f8 N/A da4p2

gptid/5f95e5cf-a1c4-11ea-a88f-40a6b70bd3f8 N/A da5p2

gptid/5ff12b3d-a1c4-11ea-a88f-40a6b70bd3f8 N/A da6p2

gptid/5e2dcba9-a1c4-11ea-a88f-40a6b70bd3f8 N/A da7p2

Code:

gpart show

=> 40 937703008 ada0 GPT (447G)

40 532480 1 efi (260M)

532520 33554432 3 freebsd-swap (16G)

34086952 903610368 2 freebsd-zfs (431G)

937697320 5728 - free - (2.8M)

=> 40 937703008 ada1 GPT (447G)

40 532480 1 efi (260M)

532520 33554432 3 freebsd-swap (16G)

34086952 903616096 2 freebsd-zfs (431G)

=> 40 19532873648 da0 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da1 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da2 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da3 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da4 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da5 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da6 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

=> 40 19532873648 da7 GPT (9.1T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 19528679256 2 freebsd-zfs (9.1T)

These services depend on pool VeeamPool and will be disrupted if the pool is detached:

SMB Share:

- MCR-DR

iSCSI Extent:

- Veeam

Error: Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/middlewared/job.py", line 367, in run

await self.future

File "/usr/local/lib/python3.8/site-packages/middlewared/job.py", line 403, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.8/site-packages/middlewared/schema.py", line 973, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/pool.py", line 1572, in export

raise CallError(sysds_job.error)

middlewared.service_exception.CallError: [EFAULT] [EPERM] sysdataset_update.pool: System dataset location may not be moved while the Active Directory service is enabled.

Not sure if it was a good idea but I tried to export/disconnect the pool while retaining all of it's data. It gets to 80% of completion the the server hangs complaining about it is out of swap space and can only be hard booted at that point.

Any ideas or help will be most appreciated.

Thanks,

Michael