- Joined

- Nov 12, 2015

- Messages

- 1,471

Hey Folks! Wanted to give all our TrueNAS fans a quick sneak peek at the current status of Cluster creation for SCALE. This is all currently being done running the latest TrueNAS SCALE and TrueCommand Nightly images as of 4/8/2021. Since a picture (Or in this case a series of moving pictures) is worth a thousand words, without further ado here's what the setup procedure looks like at this stage:

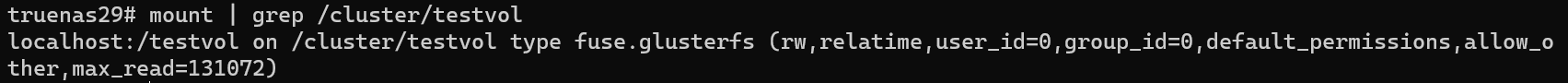

In this example I was able to create a quick dispersed cluster (Erasure Coded) with minimal effort, which is now ready to accept network client connections. If you dig in further on each of the member nodes of the cluster you will find that a '/cluster/testvol' mountpoint was also created, providing local access to the cluster from any host machine.

This is all nightly image code, but has made some good progress in recent months, so we felt it was worth sharing more public now. We're looking to have more of this officially documented by the time of TrueNAS SCALE 21.06 and TrueCommand 2.0-BETA in the coming months. For the adventurous among you, feel free to kick the tires and as always, bug reports or other feedback is welcome.

In this example I was able to create a quick dispersed cluster (Erasure Coded) with minimal effort, which is now ready to accept network client connections. If you dig in further on each of the member nodes of the cluster you will find that a '/cluster/testvol' mountpoint was also created, providing local access to the cluster from any host machine.

This is all nightly image code, but has made some good progress in recent months, so we felt it was worth sharing more public now. We're looking to have more of this officially documented by the time of TrueNAS SCALE 21.06 and TrueCommand 2.0-BETA in the coming months. For the adventurous among you, feel free to kick the tires and as always, bug reports or other feedback is welcome.

Last edited: