Papulatus

Cadet

- Joined

- Jul 9, 2019

- Messages

- 1

Hello everyone, this is my first post on this community, recently I built a nas server with freenas, this is my server.

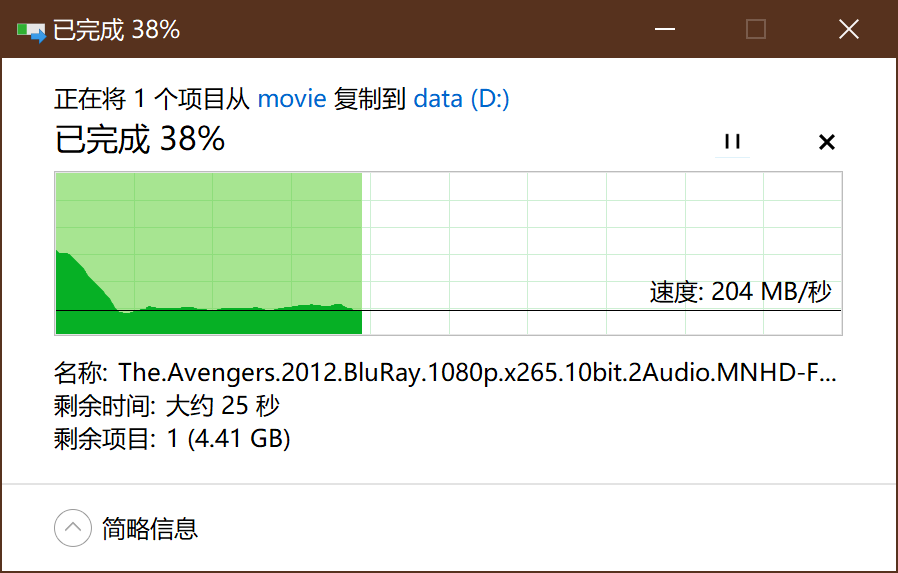

But I found that the cold data(without cache) read speed over samba like this, it's slow, even seq read, it's only 200MB/s,

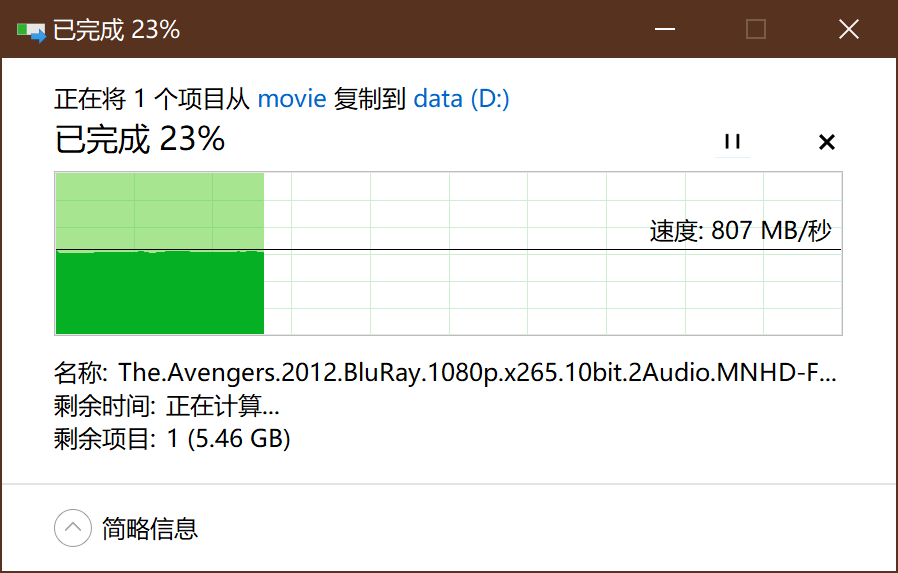

but the second time I read it, the speed was great cause of the amazing arc, it's 800MB/s

I guess that my Mellanox NIC is not compatible with FreeNAS, so I used iperf to test the need speed, the result is this:

server side (freenas)

client side (windows 10)

It seems that the NIC is not the bottleneck, then I use fio to seq read from the other cold data not in cache, the result is:

It's about 330MB/s, I'm confusing the speed, is there any problem with my test? Or this speed is normal from a zpool with 8 disks?

In most cases the speed is OK, my arc hit ratio with l2arc is nearly 100%, but when I read from cold data, for example, I use zvol and iscsi target to store my game, every time I want to play the world of the warcraft, I need to copy the whole game data to my local disk to warm the cache, if not it will be a loooooooooong blue line in the game.

Anyone can help me with this case? I've searched the forum but I didn't find any post like this.

Thanks

- Motherboard: SuperMicro X11SSH-LN4F

- CPU: Intel Xeon E3 1260Lv5

- RAM: 4x 16 GB Samsung DDR4 ECC 2400T

- Boot: 2x Samsung FIT Plus 32GB Mirror

- Hard drives: 8x Seagate IronWolf 4TB RAIDZ2

- HBA card: none, direct from sata3 port on the motherboard

- ZIL: Intel Optane 800p 58GB

- L2ARC: Samsung SM961 256GB

- NIC: Mellanox ConnectX-3 MCX312B-XCBT active-backup bond

- PSU: Seasonic 350M1U

- Case: UNAS NSC-810A

But I found that the cold data(without cache) read speed over samba like this, it's slow, even seq read, it's only 200MB/s,

but the second time I read it, the speed was great cause of the amazing arc, it's 800MB/s

I guess that my Mellanox NIC is not compatible with FreeNAS, so I used iperf to test the need speed, the result is this:

server side (freenas)

Code:

[ 5] 7.00-8.00 sec 140 MBytes 1.18 Gbits/sec [ 8] 7.00-8.00 sec 140 MBytes 1.18 Gbits/sec [ 10] 7.00-8.00 sec 140 MBytes 1.18 Gbits/sec [ 12] 7.00-8.00 sec 140 MBytes 1.18 Gbits/sec [ 14] 7.00-8.00 sec 140 MBytes 1.18 Gbits/sec [ 16] 7.00-8.00 sec 140 MBytes 1.17 Gbits/sec [ 18] 7.00-8.00 sec 140 MBytes 1.18 Gbits/sec [ 20] 7.00-8.00 sec 139 MBytes 1.17 Gbits/sec [SUM] 7.00-8.00 sec 1.09 GBytes 9.40 Gbits/sec

client side (windows 10)

Code:

[ 4] 8.00-8.72 sec 101 MBytes 1.18 Gbits/sec [ 6] 8.00-8.72 sec 101 MBytes 1.18 Gbits/sec [ 8] 8.00-8.72 sec 101 MBytes 1.18 Gbits/sec [ 10] 8.00-8.72 sec 100 MBytes 1.17 Gbits/sec [ 12] 8.00-8.72 sec 100 MBytes 1.17 Gbits/sec [ 14] 8.00-8.72 sec 100 MBytes 1.17 Gbits/sec [ 16] 8.00-8.72 sec 100 MBytes 1.17 Gbits/sec [ 18] 8.00-8.72 sec 99.6 MBytes 1.16 Gbits/sec [SUM] 8.00-8.72 sec 804 MBytes 9.37 Gbits/sec

It seems that the NIC is not the bottleneck, then I use fio to seq read from the other cold data not in cache, the result is:

Code:

fio --filename=3.mkv --sync=1 --rw=read --bs=128k --numjobs=1 --iodepth=32 --group_reporting --name=test --runtime=300 Run status group 0 (all jobs): READ: bw=330MiB/s (346MB/s), 330MiB/s-330MiB/s (346MB/s-346MB/s), io=6962MiB (7300MB), run=21099-21099msec

It's about 330MB/s, I'm confusing the speed, is there any problem with my test? Or this speed is normal from a zpool with 8 disks?

In most cases the speed is OK, my arc hit ratio with l2arc is nearly 100%, but when I read from cold data, for example, I use zvol and iscsi target to store my game, every time I want to play the world of the warcraft, I need to copy the whole game data to my local disk to warm the cache, if not it will be a loooooooooong blue line in the game.

Anyone can help me with this case? I've searched the forum but I didn't find any post like this.

Thanks