ClimbingKId

Cadet

- Joined

- Aug 25, 2021

- Messages

- 6

A long standing TrueNas customer here, running 2x10TB WD Gold Drives in a mirror for over two years. System is TrueNas Core 12.08.1, under ESXI7 with LSI3008 HBA controller passed through, with 32GB Ram. Had been running flawlessly and fast across a 10GB network.

I recently bought two more drives, to double available space. Having taken suitable backups onto a backup Truenas machine, I set about building a new pool, with the 4 pretested drives arranged into a mirror of 2 vdevs, expecting this to give the best read performance, with adequate redundancy.

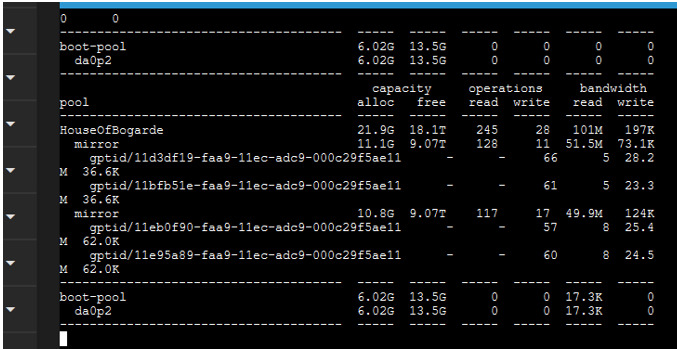

However, read performance was terrible, and slower than my previous single vdev mirror. After much head scratching I looked at a zpool iostat, to see each of the drives reading only 101M, all the drives not hitting more that 25M each.

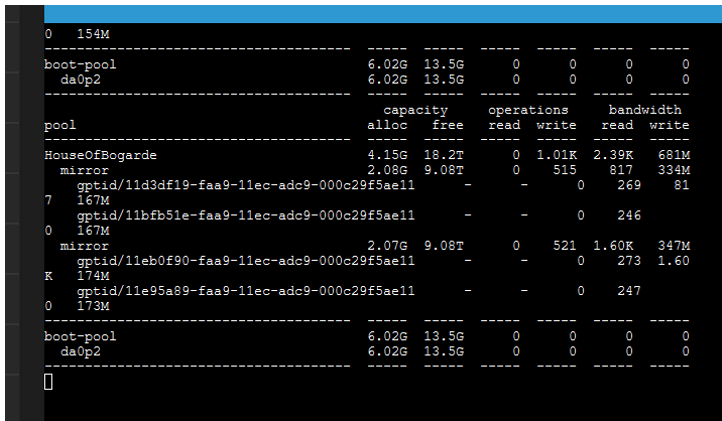

Writting on the other hand, was very fast, hitting 681M, with each drive hitting 160M+

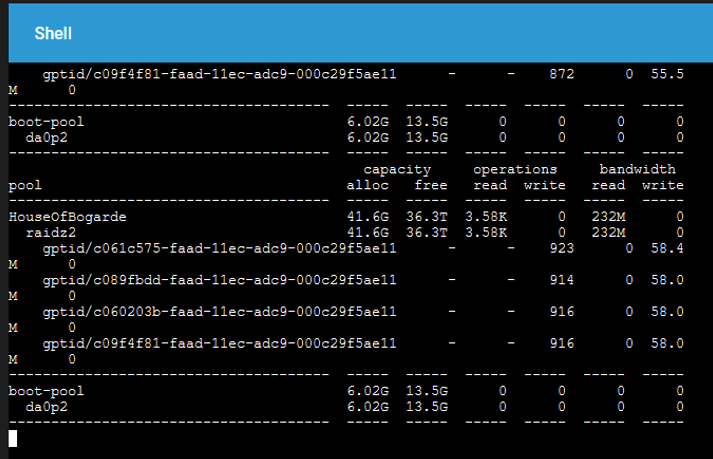

So I burned the mirror and setup a RAIDZ2 array. This time getting much faster reads of about 230-250M.

I tried the setup a few times, but never really got satisfactory read speeds from the dual vdev mirror. I know the system is capable of more, given the bandwidth on writes, and on RAIDZ2 read performance, but file copy performance across a network shows only around 100M/sec vs RAIDZ2 doubling or even trippling that.

Cached reads hit 10GB speeds across teh network, and the only thing changed was the addition of two more WD Gold drives. Even my old single mirror vdev was faster.

Any thoughts or suggestions?

Thanks

CC

I recently bought two more drives, to double available space. Having taken suitable backups onto a backup Truenas machine, I set about building a new pool, with the 4 pretested drives arranged into a mirror of 2 vdevs, expecting this to give the best read performance, with adequate redundancy.

However, read performance was terrible, and slower than my previous single vdev mirror. After much head scratching I looked at a zpool iostat, to see each of the drives reading only 101M, all the drives not hitting more that 25M each.

Writting on the other hand, was very fast, hitting 681M, with each drive hitting 160M+

So I burned the mirror and setup a RAIDZ2 array. This time getting much faster reads of about 230-250M.

I tried the setup a few times, but never really got satisfactory read speeds from the dual vdev mirror. I know the system is capable of more, given the bandwidth on writes, and on RAIDZ2 read performance, but file copy performance across a network shows only around 100M/sec vs RAIDZ2 doubling or even trippling that.

Cached reads hit 10GB speeds across teh network, and the only thing changed was the addition of two more WD Gold drives. Even my old single mirror vdev was faster.

Any thoughts or suggestions?

Thanks

CC