Greetings yall, hoping to get some help wrapping my head around the issue I'm seeing. I have always been having issues similar to the experience in the following post

www.truenas.com

which I have attributed to hardware or some other inadequate configuration but performance was tolerable as I could burst to 10GB writes now and again and sustain ~6GB/s until the crash in speed. After updating to 12.0-U8 I'm experiencing worsening performance that I would like some assistance with.

www.truenas.com

which I have attributed to hardware or some other inadequate configuration but performance was tolerable as I could burst to 10GB writes now and again and sustain ~6GB/s until the crash in speed. After updating to 12.0-U8 I'm experiencing worsening performance that I would like some assistance with.

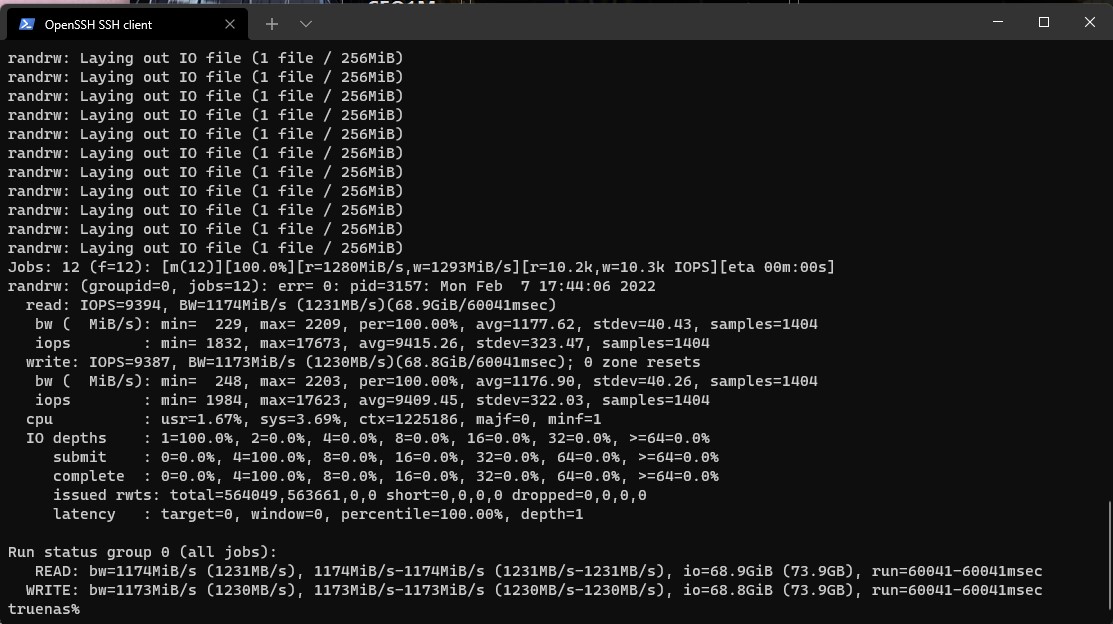

This is a home lab environment primarily hosting VM storage and Game storage (largely steam) via iscsi due to game mounting requirements and issues. I will out myself right out of the gate and state that the zvols are raidz1 (3 drives) and I know that it is not ideal for block storage as per @jgreco and his wonderful posts and guides. While it is a less than ideal state I haven't procured the drive(s) to move everything over and blow away the pool yet. That said the network performance is abysmal atm between the NAS and Windows 11 over iscsi (jumbo frames or not). Based on some of the guides and posts I have seen of others troubleshooting I am posting the performance across the drives which is showing I should be able to hit 10g speeds (which I had prior to 12.0-U8)

I'm sure we would want better for block storage however largely the speed has been acceptable.

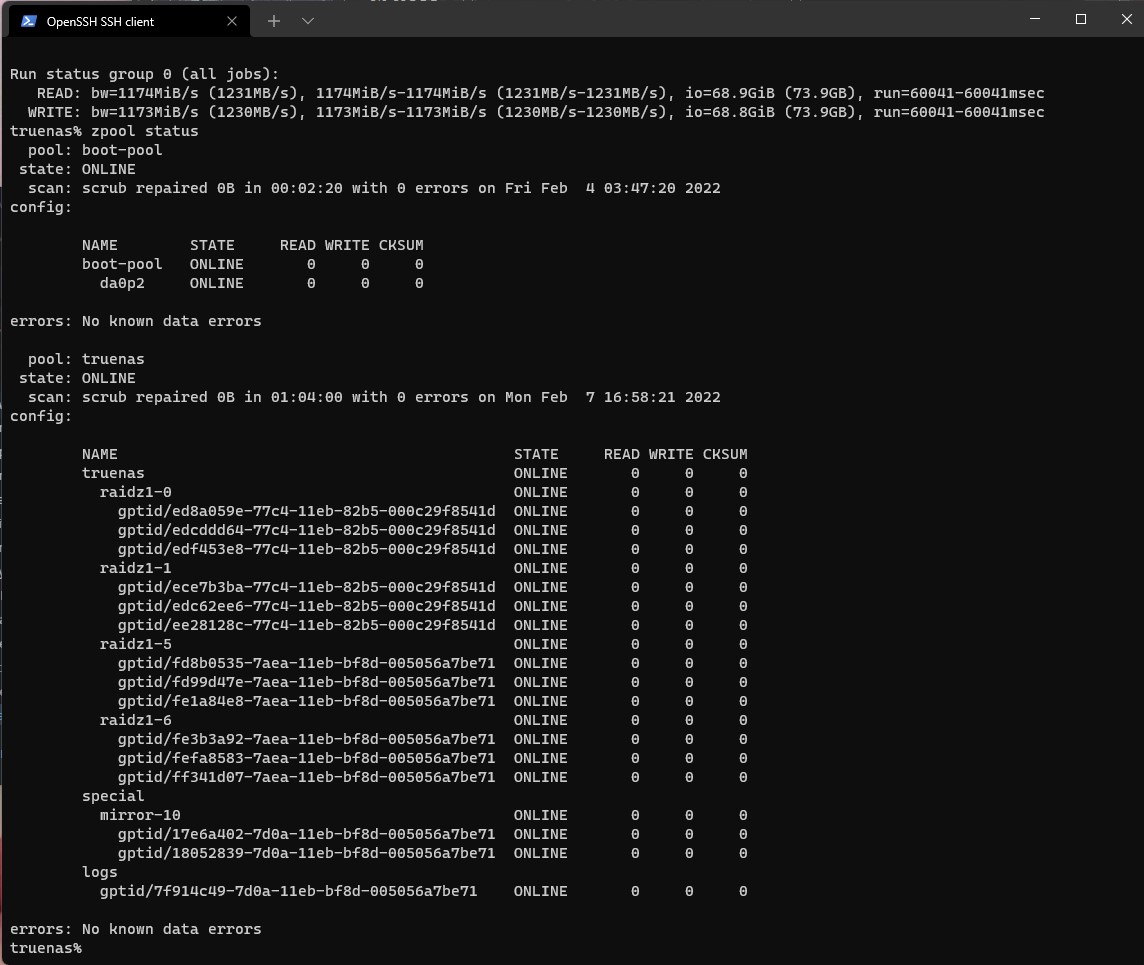

My pool configuration is as follows with 3 SSd's for SLOG and l2ARC, spinning rust sas drives for the rest

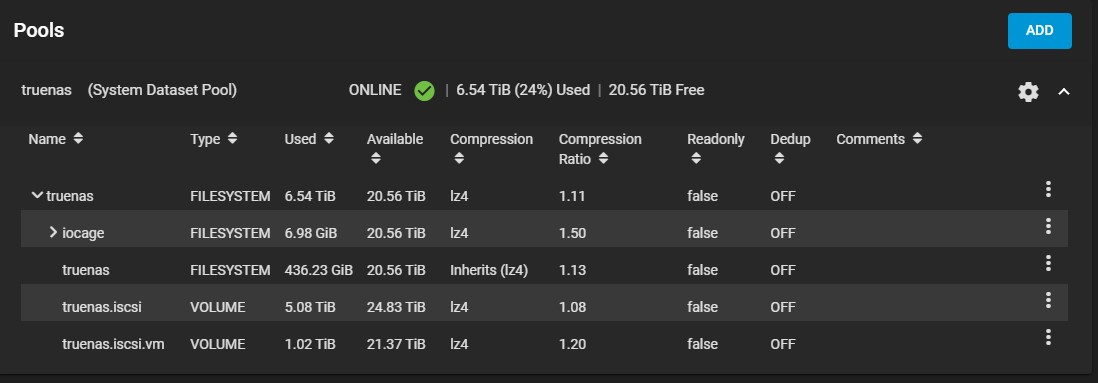

Pool configuration is here and note it says 24% which is roughly accurate given the 1vm in it's datastore and the 4tb of games on the iscsi share

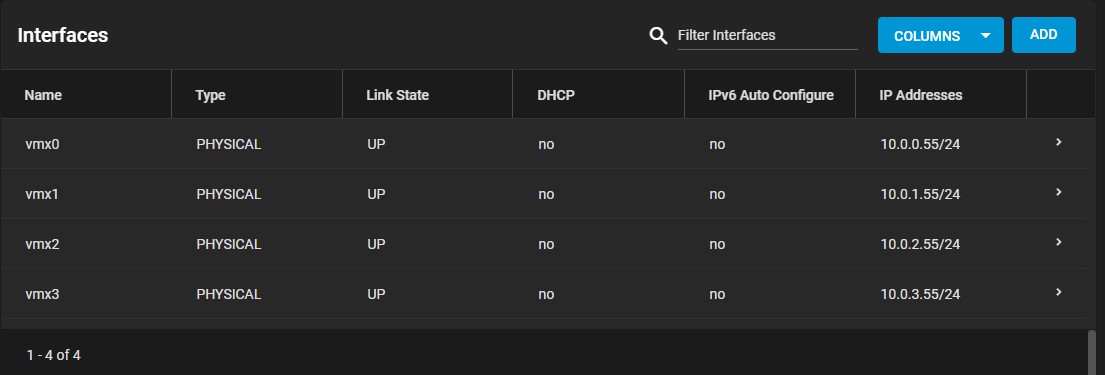

As previously mentioned I have 10gbe with 4 virtual nics for MPIO however since I'm the only one using it to the iscsi share and esxi is connected once to the vm datastore I can and likely will trim that back if not needed

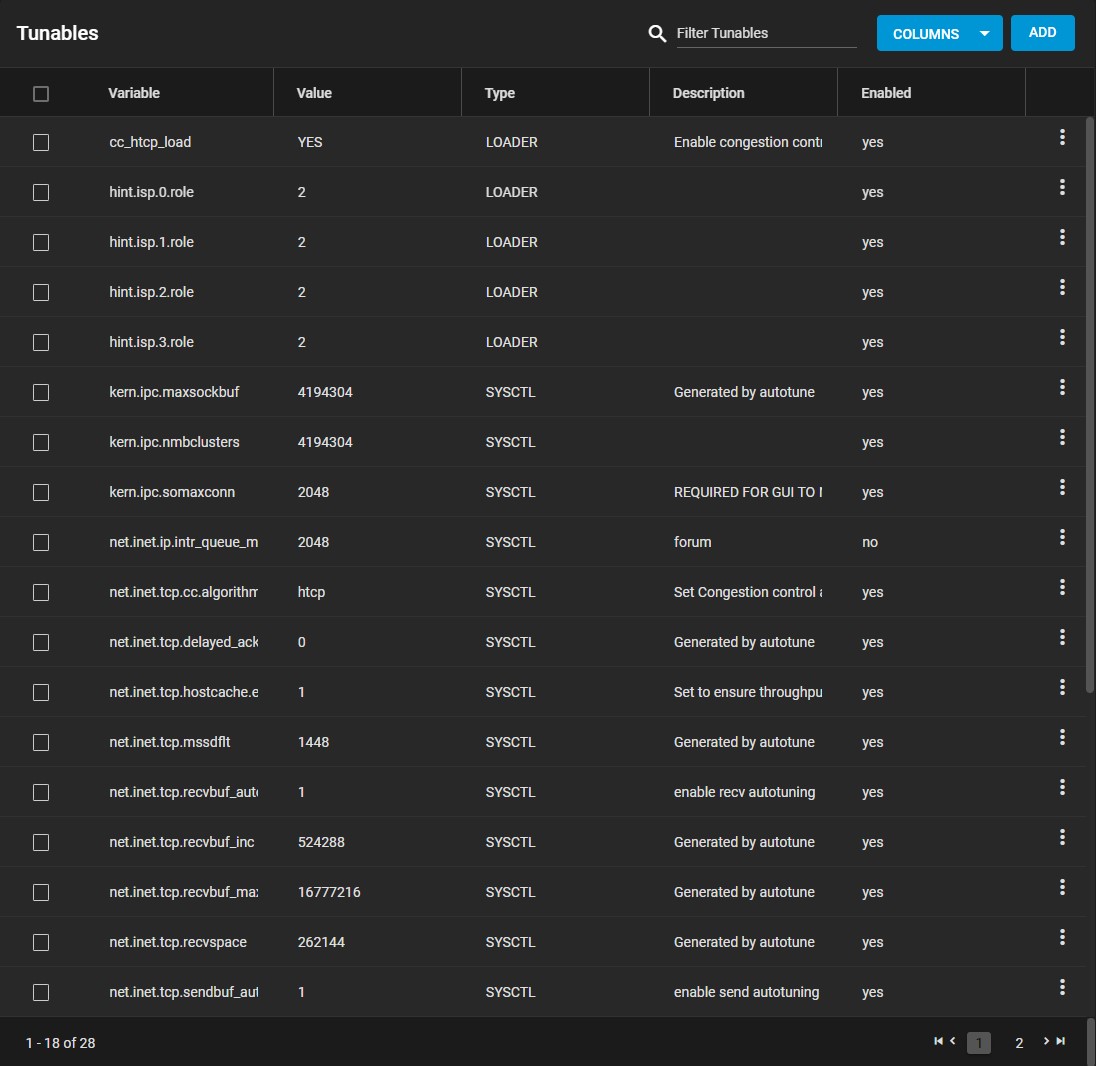

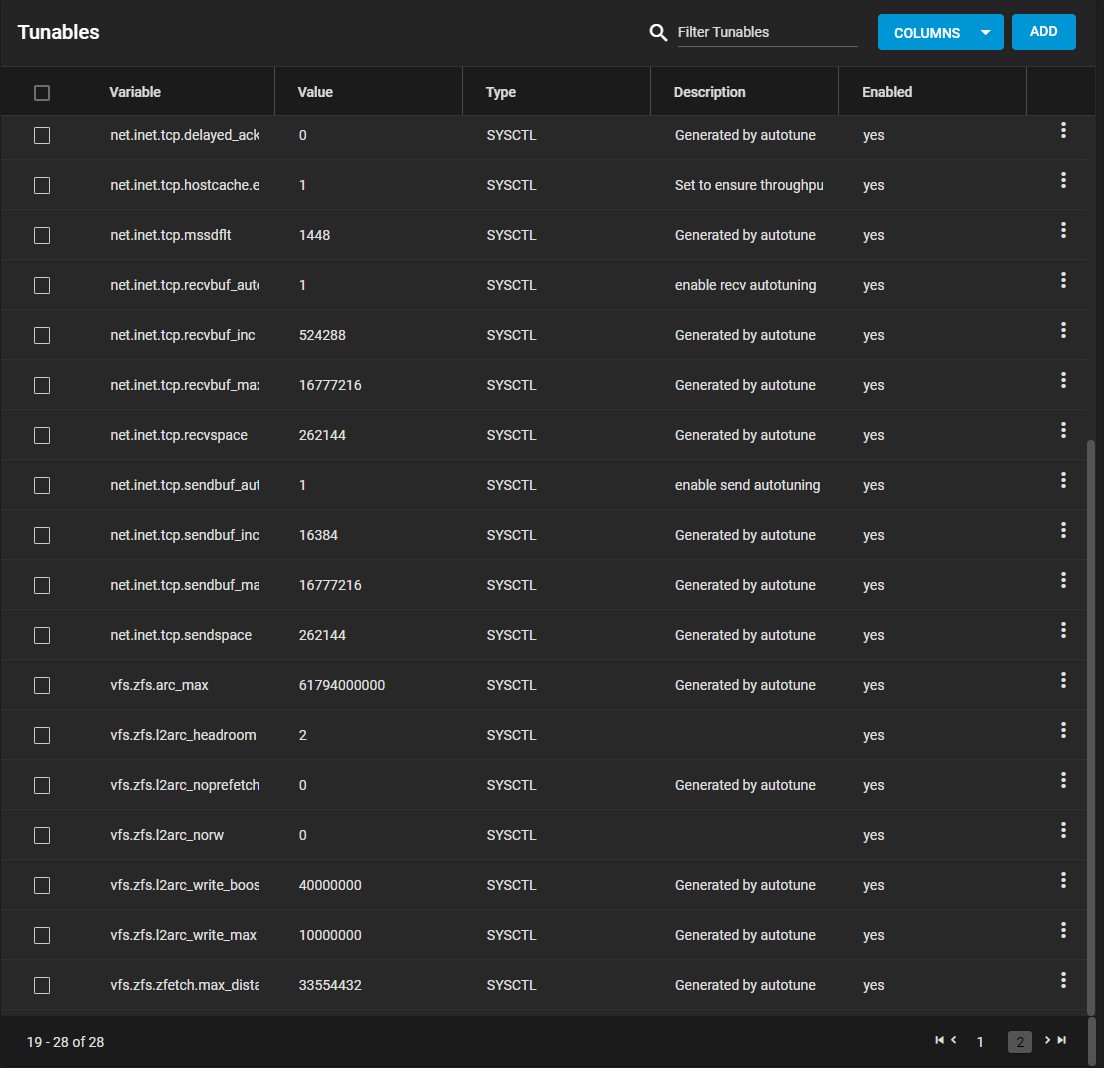

My tuneables are as follows

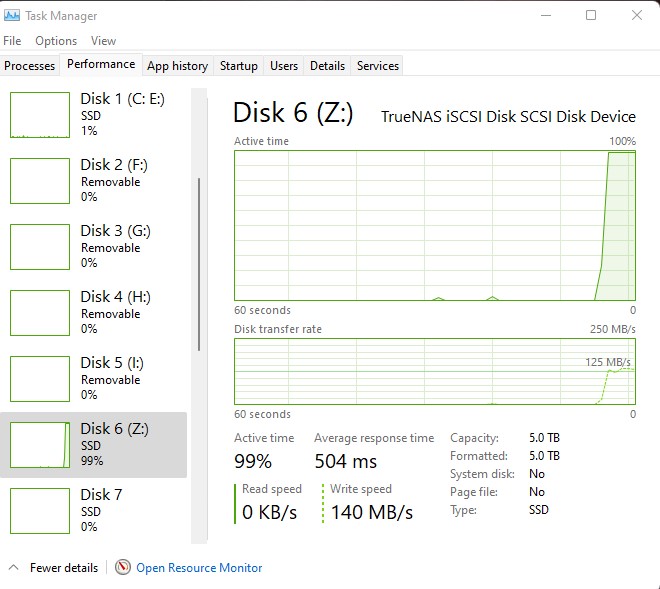

Attached are some graphs for experienced performance when attempting to do any work on the nas be it a single file move, stream a file, benchmark, etc. I'm at a loss as to what would have changed or why the performance is tanking. Network latency is shooting through the roof on any file access and when the connection to the nas hits 99%-100 latency can be anywhere from 100-1000ms making everything come to a screeching halt. While this graph is showing 104 MB/s that is actually a luxury due to the forthcoming results from a crystalmark benchmark and likely due to larger file xfer.

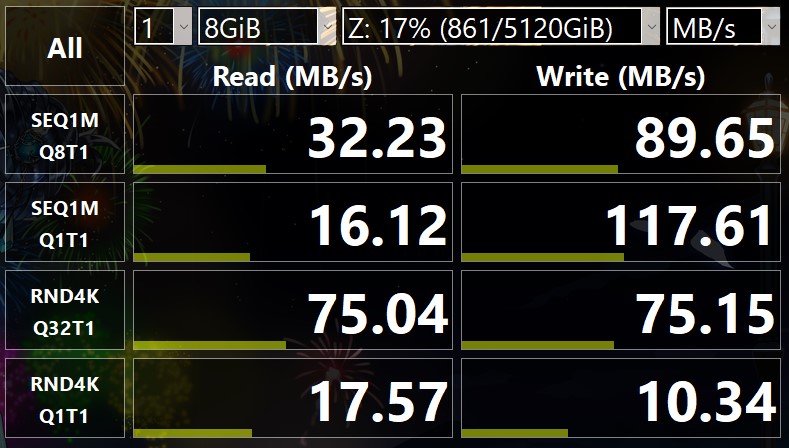

Here in all it's glory is what I'm experiencing from a file xfer perspective. I had previously experienced this at almost 1GB/s in the SEQ1 portions however given my setup I never expected it to be that high nor consistently that high. That said read performance is now terrible and is overshadowed by the current write performance which is backwards from what i have come to experience. No changes to hardware, or software other than the newest 12.0-U8 updated.

Thanks for taking the time to look through and assist as it is greatly appreciated. I'm by no means an expert however I attempted to follow the best practices outlayed as I migrated from xpenology one of which I know is currently wrong is the need to switch to mirrors from raidz however I believe my main and current issue lies elsewhere.

My Specs

SOLVED - Transfer speed drops after the first trasfer

Good afternoon to everyone, I'am new to FreeNAS and I'm struggling with some strange network behaviour and low trasfer speed. I upgraded my old configuration to meet a "decent" quality for running a home backup server without any big expectations. I use Dell T1600 workstation with Xeon 31225...

This is a home lab environment primarily hosting VM storage and Game storage (largely steam) via iscsi due to game mounting requirements and issues. I will out myself right out of the gate and state that the zvols are raidz1 (3 drives) and I know that it is not ideal for block storage as per @jgreco and his wonderful posts and guides. While it is a less than ideal state I haven't procured the drive(s) to move everything over and blow away the pool yet. That said the network performance is abysmal atm between the NAS and Windows 11 over iscsi (jumbo frames or not). Based on some of the guides and posts I have seen of others troubleshooting I am posting the performance across the drives which is showing I should be able to hit 10g speeds (which I had prior to 12.0-U8)

I'm sure we would want better for block storage however largely the speed has been acceptable.

My pool configuration is as follows with 3 SSd's for SLOG and l2ARC, spinning rust sas drives for the rest

Pool configuration is here and note it says 24% which is roughly accurate given the 1vm in it's datastore and the 4tb of games on the iscsi share

As previously mentioned I have 10gbe with 4 virtual nics for MPIO however since I'm the only one using it to the iscsi share and esxi is connected once to the vm datastore I can and likely will trim that back if not needed

My tuneables are as follows

Attached are some graphs for experienced performance when attempting to do any work on the nas be it a single file move, stream a file, benchmark, etc. I'm at a loss as to what would have changed or why the performance is tanking. Network latency is shooting through the roof on any file access and when the connection to the nas hits 99%-100 latency can be anywhere from 100-1000ms making everything come to a screeching halt. While this graph is showing 104 MB/s that is actually a luxury due to the forthcoming results from a crystalmark benchmark and likely due to larger file xfer.

Here in all it's glory is what I'm experiencing from a file xfer perspective. I had previously experienced this at almost 1GB/s in the SEQ1 portions however given my setup I never expected it to be that high nor consistently that high. That said read performance is now terrible and is overshadowed by the current write performance which is backwards from what i have come to experience. No changes to hardware, or software other than the newest 12.0-U8 updated.

Thanks for taking the time to look through and assist as it is greatly appreciated. I'm by no means an expert however I attempted to follow the best practices outlayed as I migrated from xpenology one of which I know is currently wrong is the need to switch to mirrors from raidz however I believe my main and current issue lies elsewhere.

My Specs

Supermicro H8DGU

16 Core x2 Opteron 6378 (Dual Socket)

ESXi 7

96g ddr3

LSI 6gbps SAS HBA (I believe in IT mode but would need to confirm)

Mellanox MT27500 (Connectx-3) 10G dual port fiber nic

Truenas 12.0-U8 VM

12vCPUs

64GB Ram

HBA Passthrough all 32 bays

4 Nics (VMXNet 3)

10g brocade 24port fiber switch for interconnects

16 Core x2 Opteron 6378 (Dual Socket)

ESXi 7

96g ddr3

LSI 6gbps SAS HBA (I believe in IT mode but would need to confirm)

Mellanox MT27500 (Connectx-3) 10G dual port fiber nic

Truenas 12.0-U8 VM

12vCPUs

64GB Ram

HBA Passthrough all 32 bays

4 Nics (VMXNet 3)

10g brocade 24port fiber switch for interconnects