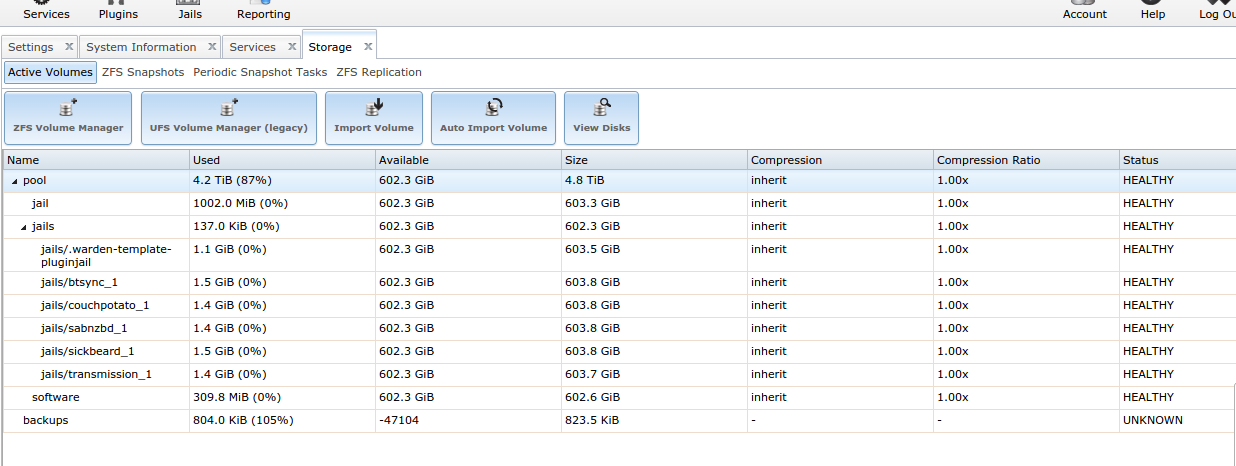

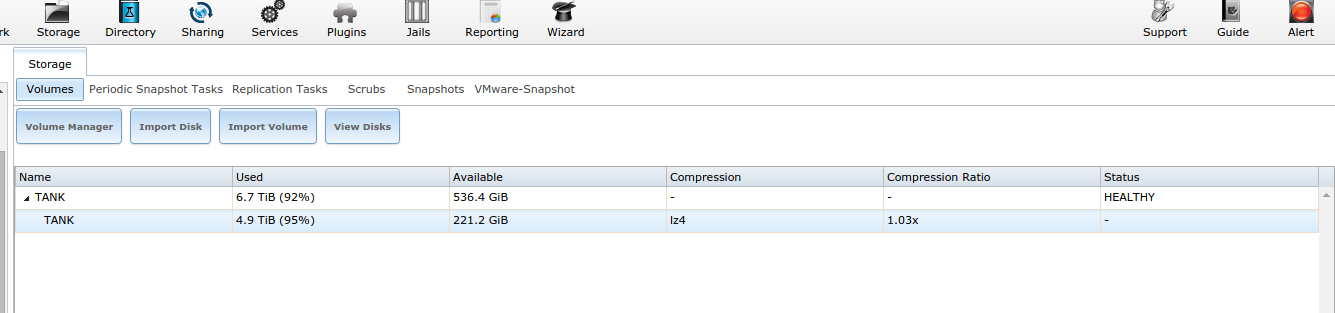

I'm kinda stumped. I have 2 freenas servers, both in RAIDZ1 with 4x2TB disks. Trying to RYSNC between the two to migrate to new hardware. I had to stop the process since it was obvious something was wrong, I just don't know what. I didn't have 'delete' option enabled, but I certainly haven't added 700GB of storage that isn't accounted for.

Can anyone help me out as far as what to look for? Would snapshots existing on the pushing server maybe cause something like that?

*edit*

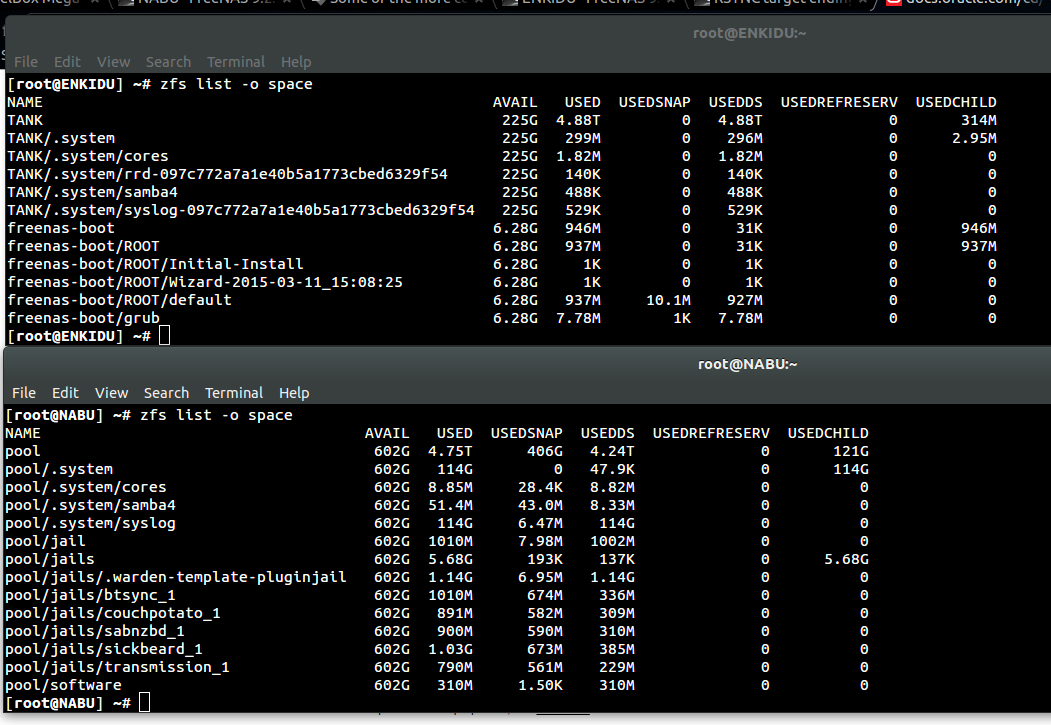

Now that I've looked at the zfs output, it looks like they might be closer than the GUI suggested. I realize I do need to free up some space, as to not be over that 80% threshold. However, I'm not exactly sure what is happening with the snapshot data in this case, or really why it's so effing large. I only keep them for 2 weeks, and I certainly haven't added in 400GB of data in the last two weeks.

Ah well, if anyone can provide guidance/input I'd greatly appreciate it.

Can anyone help me out as far as what to look for? Would snapshots existing on the pushing server maybe cause something like that?

*edit*

Now that I've looked at the zfs output, it looks like they might be closer than the GUI suggested. I realize I do need to free up some space, as to not be over that 80% threshold. However, I'm not exactly sure what is happening with the snapshot data in this case, or really why it's so effing large. I only keep them for 2 weeks, and I certainly haven't added in 400GB of data in the last two weeks.

Ah well, if anyone can provide guidance/input I'd greatly appreciate it.

Last edited: