Thank you both for your replies!

I probably have a lot to learn about snapshopts and will follow up with some more questions, which unraveled here. But first let's answer questions and do it chronologically.

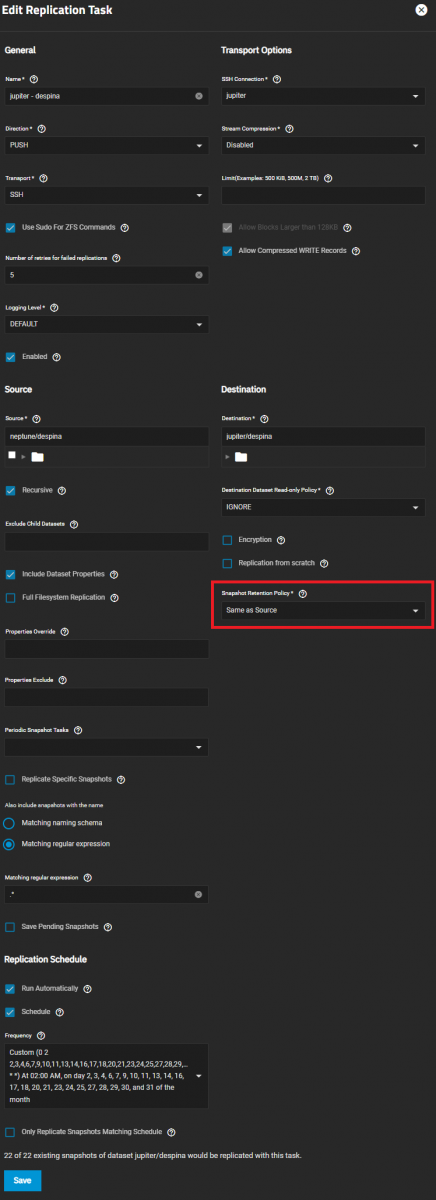

The screenshots seem to depict

@chuck32 is logged into

two different servers (Source and Destination).

The Destination server wouldn't know about the expiration / pruning policy configured on the Source server. In fact, the Destination could even be a

non-TrueNAS ZFS server.

Yes, as I laid out in my OP the destination is a separate machine. That's why the screenshots have two color themes (I do not want to mix up which machine I'm working on by accident). But both run the same version of truenas.

So when the time comes, zettarepl (initiated on the Source side) will prune the expired snapshots over-the-wire on the Destination side.

Let's see if it actually prunes after the timer expires.

Edit: And it does - the snapshot pruning is only tracked on the machine where the job actually runs. Points to the minty user in the front row.

How did you know that before me ;)

I setup a test dataset with hourly snapshots and a two hour retention time. I can confirm the snapshots got deleted on the remote machine also. Additionally the snapshots I manually deleted on the source got also destroyed, which is nice.

Code:

[2024/01/20 08:00:00] INFO [MainThread] [zettarepl.zettarepl] Scheduled tasks: [<Periodic Snapshot Task 'task_17'>]

[2024/01/20 08:00:00] INFO [MainThread] [zettarepl.snapshot.create] On <Shell(<LocalTransport()>)> creating recursive snapshot ('neptune/test-dataset', 'auto-2024-01-20_08-00')

[2024/01/20 08:00:00] INFO [MainThread] [zettarepl.zettarepl] Created ('neptune/test-dataset', 'auto-2024-01-20_08-00')

[2024/01/20 08:00:00] INFO [Thread-1984] [zettarepl.paramiko.replication_task__task_17] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/20 08:00:00] INFO [Thread-1984] [zettarepl.paramiko.replication_task__task_17] Authentication (publickey) successful!

[2024/01/20 08:00:01] INFO [replication_task__task_17] [zettarepl.replication.pre_retention] Pre-retention destroying snapshots: [('jupiter/test-dataset', 'auto-2023-12-22_22-44'), ('jupiter/test-dataset', 'auto-2023-12-23_22-44'), ('jupiter/test-dataset', 'auto-2023-12-24_22-44')]

[2024/01/20 08:00:01] INFO [replication_task__task_17] [zettarepl.snapshot.destroy] On <Shell(<SSH Transport(admin@192.168.178.143)>)> for dataset 'jupiter/test-dataset' destroying snapshots {'auto-2023-12-23_22-44', 'auto-2023-12-22_22-44', 'auto-2023-12-24_22-44'}

[2024/01/20 08:00:01] INFO [Thread-1986] [zettarepl.paramiko.retention] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/20 08:00:01] INFO [Thread-1986] [zettarepl.paramiko.retention] Authentication (publickey) successful!

[2024/01/20 08:00:01] INFO [replication_task__task_17] [zettarepl.replication.run] For replication task 'task_17': doing push from 'neptune/test-dataset' to 'jupiter/test-dataset' of snapshot='auto-2024-01-20_08-00' incremental_base='auto-2024-01-20_04-00' include_intermediate=False receive_resume_token=None encryption=False

[2024/01/20 08:00:01] INFO [retention] [zettarepl.zettarepl] Retention destroying local snapshots: []

[2024/01/20 08:00:03] INFO [retention] [zettarepl.zettarepl] Retention on <SSH Transport(admin@192.168.178.143)> destroying snapshots: []

[2024/01/20 08:00:03] INFO [retention] [zettarepl.zettarepl] Retention on <LocalTransport()> destroying snapshots: []

[2024/01/20 08:00:04] INFO [retention] [zettarepl.zettarepl] Retention destroying local snapshots: [('neptune/test-dataset', 'auto-2024-01-20_04-00')]

[2024/01/20 08:00:04] INFO [retention] [zettarepl.snapshot.destroy] On <Shell(<LocalTransport()>)> for dataset 'neptune/test-dataset' destroying snapshots {'auto-2024-01-20_04-00'}

[2024/01/20 08:00:05] INFO [Thread-1989] [zettarepl.paramiko.retention] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/20 08:00:05] INFO [Thread-1989] [zettarepl.paramiko.retention] Authentication (publickey) successful!

[2024/01/20 08:00:06] INFO [retention] [zettarepl.zettarepl] Retention on <SSH Transport(admin@192.168.178.143)> destroying snapshots: [('jupiter/test-dataset', 'auto-2024-01-20_04-00')]

[2024/01/20 08:00:06] INFO [retention] [zettarepl.snapshot.destroy] On <Shell(<SSH Transport(admin@192.168.178.143)>)> for dataset 'jupiter/test-dataset' destroying snapshots {'auto-2024-01-20_04-00'}

[2024/01/20 08:00:06] INFO [retention] [zettarepl.zettarepl] Retention on <LocalTransport()> destroying snapshots: []

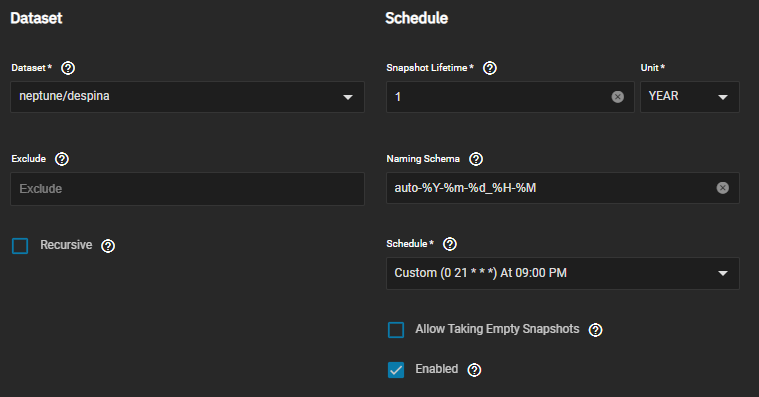

Now onto the questions that arose during my investigation of the log files. Some explanation upfront:

syncthing-data

scanned-documents

are both under /mnt/neptune, they are not childs of each other.

| dataset | snapshot schedule | replication schedule |

| syncthing-data | 00:03 daily | 12:00 daily |

| scanned-documents | 22:47 daily | linked to snapshot |

1) For some reason, the snapshot auto-2024-01-20_04-00 got marked for deletion correctly, it even displayed in the GUI that it will be destroyed at 06:00. When I checked after 07:00 it was still there. On the run at 08:00 it finally got destroyed. Even if there was some local / UTC time mix up (I'm ahead one hour) it should have been destroyed at 07:00. There's no mention in the logs about the snapshot at 5, 6 or 7 am.

Curious at least. Any idea for the delay?

2) Then I realized some of the tasks seemed to run at weird times.

Code:

[2024/01/18 22:47:00] INFO [MainThread] [zettarepl.zettarepl] Scheduled tasks: [<Periodic Snapshot Task 'task_16'>]

[2024/01/18 22:47:00] INFO [MainThread] [zettarepl.snapshot.create] On <Shell(<LocalTransport()>)> creating recursive snapshot ('neptune/scanned-documents', 'auto-2024-01-18_22-47')

[2024/01/18 22:47:00] INFO [MainThread] [zettarepl.zettarepl] Created ('neptune/scanned-documents', 'auto-2024-01-18_22-47')

[2024/01/18 22:47:00] INFO [Thread-1784] [zettarepl.paramiko.replication_task__task_16] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/18 22:47:00] INFO [Thread-1784] [zettarepl.paramiko.replication_task__task_16] Authentication (publickey) successful!

[2024/01/18 22:47:01] INFO [replication_task__task_16] [zettarepl.replication.pre_retention] Pre-retention destroying snapshots: []

[2024/01/18 22:47:01] INFO [Thread-1786] [zettarepl.paramiko.retention] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/18 22:47:01] INFO [replication_task__task_16] [zettarepl.replication.run] For replication task 'task_16': doing push from 'neptune/scanned-documents' to 'jupiter/scanned-documents' of snapshot='auto-2024-01-18_22-43' incremental_base=None include_intermediate=True receive_resume_token=None encryption=False

[2024/01/18 22:47:01] INFO [Thread-1786] [zettarepl.paramiko.retention] Authentication (publickey) successful!

[2024/01/18 22:47:01] ERROR [retention] [zettarepl.replication.task.snapshot_owner] Failed to list snapshots with <Shell(<SSH Transport(admin@192.168.178.143)>)>: ExecException(1, "cannot open 'jupiter/scanned-documents': dataset does not exist\n"). Assuming remote has no snapshots

[2024/01/18 22:47:01] INFO [retention] [zettarepl.zettarepl] Retention destroying local snapshots: []

[2024/01/18 22:47:03] INFO [retention] [zettarepl.zettarepl] Retention on <SSH Transport(admin@192.168.178.143)> destroying snapshots: []

[2024/01/18 22:47:03] INFO [retention] [zettarepl.zettarepl] Retention on <LocalTransport()> destroying snapshots: []

[2024/01/18 22:47:07] INFO [replication_task__task_16] [zettarepl.replication.run] For replication task 'task_16': doing push from 'neptune/scanned-documents' to 'jupiter/scanned-documents' of snapshot='auto-2024-01-18_22-47' incremental_base='auto-2024-01-18_22-43' include_intermediate=True receive_resume_token=None encryption=False

[2024/01/18 22:47:09] INFO [retention] [zettarepl.zettarepl] Retention destroying local snapshots: []

[2024/01/18 22:47:10] INFO [Thread-1789] [zettarepl.paramiko.retention] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/18 22:47:10] INFO [Thread-1789] [zettarepl.paramiko.retention] Authentication (publickey) successful!

[2024/01/18 22:47:11] INFO [retention] [zettarepl.zettarepl] Retention on <SSH Transport(admin@192.168.178.143)> destroying snapshots: []

[2024/01/18 22:47:11] INFO [retention] [zettarepl.zettarepl] Retention on <LocalTransport()> destroying snapshots: []

[2024/01/18 22:47:45] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:237] Connecting...

[2024/01/18 22:47:45] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:237] [async_exec:239] Running ['zfs', 'list', '-t', 'snapshot', '-H', '-o', 'name', '-s', 'name', '-d', '1', 'jupiter/scanned-documents'] with sudo=False

[2024/01/18 22:47:45] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:237] [async_exec:239] Reading stdout

[2024/01/18 22:47:45] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:237] [async_exec:239] Waiting for exit status

[2024/01/18 22:47:45] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:237] [async_exec:239] Success: 'jupiter/scanned-documents@auto-2024-01-18_22-43\njupiter/scanned-documents@auto-2024-01-18_22-47\n'

[2024/01/18 22:48:18] DEBUG [IoThread_1] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:238] Connecting...

[2024/01/18 22:48:18] DEBUG [IoThread_1] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:238] [async_exec:240] Running ['zfs', 'list', '-t', 'snapshot', '-H', '-o', 'name', '-s', 'name', '-d', '1', 'jupiter/syncthing-data'] with sudo=False

[2024/01/18 22:48:18] DEBUG [IoThread_1] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:238] [async_exec:240] Reading stdout

[2024/01/18 22:48:18] DEBUG [IoThread_1] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:238] [async_exec:240] Waiting for exit status

[2024/01/18 22:48:18] DEBUG [IoThread_1] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:238] [async_exec:240] Success: 'jupiter/syncthing-data@auto-2023-10-21_21-00\njupiter/syncthing-data@auto-2023-10-22_21-00\njupiter/syncthing-data@auto-2023-10-23_21-00\njupiter/syncthing-data@auto-2023-10-24_21-00\njupiter/syncthing-data@auto-2023-10-25_21-00\njupiter/syncthing-data@auto-2023-10-27_21-00\njupiter/syncthing-data@auto-2023-10-28_21-00\njupiter/syncthing-data@auto-2023-10-29_21-00\njupiter/syncthing-data@auto-2023-10-30_21-00\njupiter/syncthing-data@auto-2023-10-31_21-00\njupiter/syncthing-data@auto-2023-11-01_21-00\njupiter/syncthing-data@auto-2023-11-02_21-00\njupiter/syncthing-data@auto-2023-11-03_21-00\njupiter/syncthing-data@auto-2023-11-04_21-00\njupiter/syncthing-data@auto-2023-11-05_21-00\njupiter/syncthing-data@auto-2023-11-06_21-00\njupiter/syncthing-data@auto-2023-11-07_21-00\njupiter/syncthing-data@auto-2023-11-08_21-00\njupiter/syncthing-data@auto-2023-11-11_21-00\njupiter/syncthing-data@auto-2023-11-13_21-00\njupiter/syncthing-data@auto-2023-11-14_21-00\njupiter/syncthing-data@auto-2023-11-15_21-00\njupiter/syncthing-data@auto-2023-11-16_21-00\njupiter/syncthing-data@auto-2023-11-17_21-00\njupiter/syncthing-data@auto-2023-11-18_21-00\njupiter/syncthing-data@auto-2023-11-19_21-00\njupiter/syncthing-data@auto-2023-11-20_21-00\njupiter/syncthing-data@auto-2023-11-21_21-00\njupiter/syncthing-data@auto-2023-11-22_21-00\njupiter/syncthing-data@auto-2023-11-23_21-00\njupiter/syncthing-data@auto-2023-11-24_21-00\njupiter/syncthing-data@auto-2023-11-25_21-00\njupiter/syncthing-data@auto-2023-11-26_21-00\njupiter/syncthing-data@auto-2023-11-27_00-00\njupiter/syncthing-data@auto-2023-11-28_00-00\njupiter/syncthing-data@auto-2023-11-29_00-00\njupiter/syncthing-data@auto-2023-11-30_00-00\njupiter/syncthing-data@auto-2023-12-01_00-00\njupiter/syncthing-data@auto-2023-12-02_00-00\njupiter/syncthing-data@auto-2023-12-03_00-00\njupiter/syncthing-data@auto-2023-12-04_00-00\njupiter/syncthing-data@auto-2023-12-05_00-00\njupiter/syncthing-data@auto-2023-12-06_00-00\njupiter/syncthing-data@auto-2023-12-07_00-00\njupiter/syncthing-data@auto-2023-12-08_00-00\njupiter/syncthing-data@auto-2023-12-09_00-00\njupiter/syncthing-data@auto-2023-12-10_00-00\njupiter/syncthing-data@auto-2023-12-11_00-00\njupiter/syncthing-data@auto-2023-12-12_00-00\njupiter/syncthing-data@auto-2023-12-13_00-00\njupiter/syncthing-data@auto-2023-12-14_00-00\njupiter/syncthing-data@auto-2023-12-15_00-00\njupiter/syncthing-data@auto-2023-12-16_00-00\njupiter/syncthing-data@auto-2023-12-17_00-00\njupiter/syncthing-data@auto-2023-12-18_00-00\njupiter/syncthing-data@auto-2023-12-19_00-00\njupiter/syncthing-data@auto-2023-12-20_00-00\njupiter/syncthing-data@auto-2023-12-21_00-00\njupiter/syncthing-data@auto-2023-12-22_00-00\njupiter/syncthing-data@auto-2023-12-23_00-00\njupiter/syncthing-data@auto-2023-12-24_00-00\njupiter/syncthing-data@auto-2023-12-25_00-00\njupiter/syncthing-data@auto-2023-12-26_00-00\njupiter/syncthing-data@auto-2023-12-27_00-03\njupiter/syncthing-data@auto-2023-12-28_00-03\njupiter/syncthing-data@auto-2023-12-29_00-03\njupiter/syncthing-data@auto-2023-12-30_00-03\njupiter/syncthing-data@auto-2023-12-31_00-03\njupiter/syncthing-data@auto-2024-01-01_00-03\njupiter/syncthing-data@auto-2024-01-02_00-03\njupiter/syncthing-data@auto-2024-01-03_00-03\njupiter/syncthing-data@auto-2024-01-04_00-03\njupiter/syncthing-data@auto-2024-01-05_00-03\njupiter/syncthing-data@auto-2024-01-06_00-03\njupiter/syncthing-data@auto-2024-01-07_00-03\njupiter/syncthing-data@auto-2024-01-08_00-03\njupiter/syncthing-data@auto-2024-01-09_00-03\njupiter/syncthing-data@auto-2024-01-10_00-03\njupiter/syncthing-data@auto-2024-01-11_00-03\njupiter/syncthing-data@auto-2024-01-12_00-03\njupiter/syncthing-data@auto-2024-01-13_00-03\njupiter/syncthing-data@auto-2024-01-14_00-03\njupiter/syncthing-data@auto-2024-01-15_00-03\njupiter/syncthing-data@auto-2024-01-16_00-03\njupiter/syncthing-data@auto-2024-01-17_00-03\njupiter/syncthing-data@auto-2024-01-18_00-03\njupiter/syncthing-data@manual-2023-11-20_17-25_beforededup\n'

For some reason, running the replication task for scanned-documents triggered

zfs list for the unrelated dataset /syncthing-data. I verified that during two other manual runs of the replication task for scanned-documents. After I opened the snapshop task for scanned-documents and unset Recursive and set it again, that "connection" seemed to have disappeared. I read somewhere that you need to the snapshot tasks to recursive for the remote retention policy to work.

Code:

[2024/01/19 10:29:31] DEBUG [IoThread_15] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:239] Connecting...

[2024/01/19 10:29:31] DEBUG [IoThread_15] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:239] [async_exec:241] Running ['zfs', 'list', '-t', 'snapshot', '-H', '-o', 'name', '-s', 'name', '-d', '1', 'jupiter/syncthing-data'] with sudo=False

[2024/01/19 10:29:31] DEBUG [IoThread_15] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:239] [async_exec:241] Reading stdout

[2024/01/19 10:29:31] DEBUG [IoThread_15] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:239] [async_exec:241] Waiting for exit status

[2024/01/19 10:29:31] DEBUG [IoThread_15] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:239] [async_exec:241] Success: 'jupiter/syncthing-data@auto-2023-10-21_21-00\njupiter/syncthing-data@auto-2023-10-22_21-00\njupiter/syncthing-data@auto-2023-10-23_21-00\njupiter/syncthing-data@auto-2023-10-24_21-00\njupiter/syncthing-data@auto-2023-10-25_21-00\njupiter/syncthing-data@auto-2023-10-27_21-00\njupiter/syncthing-data@auto-2023-10-28_21-00\njupiter/syncthing-data@auto-2023-10-29_21-00\njupiter/syncthing-data@auto-2023-10-30_21-00\njupiter/syncthing-data@auto-2023-10-31_21-00\njupiter/syncthing-data@auto-2023-11-01_21-00\njupiter/syncthing-data@auto-2023-11-02_21-00\njupiter/syncthing-data@auto-2023-11-03_21-00\njupiter/syncthing-data@auto-2023-11-04_21-00\njupiter/syncthing-data@auto-2023-11-05_21-00\njupiter/syncthing-data@auto-2023-11-06_21-00\njupiter/syncthing-data@auto-2023-11-07_21-00\njupiter/syncthing-data@auto-2023-11-08_21-00\njupiter/syncthing-data@auto-2023-11-11_21-00\njupiter/syncthing-data@auto-2023-11-13_21-00\njupiter/syncthing-data@auto-2023-11-14_21-00\njupiter/syncthing-data@auto-2023-11-15_21-00\njupiter/syncthing-data@auto-2023-11-16_21-00\njupiter/syncthing-data@auto-2023-11-17_21-00\njupiter/syncthing-data@auto-2023-11-18_21-00\njupiter/syncthing-data@auto-2023-11-19_21-00\njupiter/syncthing-data@auto-2023-11-20_21-00\njupiter/syncthing-data@auto-2023-11-21_21-00\njupiter/syncthing-data@auto-2023-11-22_21-00\njupiter/syncthing-data@auto-2023-11-23_21-00\njupiter/syncthing-data@auto-2023-11-24_21-00\njupiter/syncthing-data@auto-2023-11-25_21-00\njupiter/syncthing-data@auto-2023-11-26_21-00\njupiter/syncthing-data@auto-2023-11-27_00-00\njupiter/syncthing-data@auto-2023-11-28_00-00\njupiter/syncthing-data@auto-2023-11-29_00-00\njupiter/syncthing-data@auto-2023-11-30_00-00\njupiter/syncthing-data@auto-2023-12-01_00-00\njupiter/syncthing-data@auto-2023-12-02_00-00\njupiter/syncthing-data@auto-2023-12-03_00-00\njupiter/syncthing-data@auto-2023-12-04_00-00\njupiter/syncthing-data@auto-2023-12-05_00-00\njupiter/syncthing-data@auto-2023-12-06_00-00\njupiter/syncthing-data@auto-2023-12-07_00-00\njupiter/syncthing-data@auto-2023-12-08_00-00\njupiter/syncthing-data@auto-2023-12-09_00-00\njupiter/syncthing-data@auto-2023-12-10_00-00\njupiter/syncthing-data@auto-2023-12-11_00-00\njupiter/syncthing-data@auto-2023-12-12_00-00\njupiter/syncthing-data@auto-2023-12-13_00-00\njupiter/syncthing-data@auto-2023-12-14_00-00\njupiter/syncthing-data@auto-2023-12-15_00-00\njupiter/syncthing-data@auto-2023-12-16_00-00\njupiter/syncthing-data@auto-2023-12-17_00-00\njupiter/syncthing-data@auto-2023-12-18_00-00\njupiter/syncthing-data@auto-2023-12-19_00-00\njupiter/syncthing-data@auto-2023-12-20_00-00\njupiter/syncthing-data@auto-2023-12-21_00-00\njupiter/syncthing-data@auto-2023-12-22_00-00\njupiter/syncthing-data@auto-2023-12-23_00-00\njupiter/syncthing-data@auto-2023-12-24_00-00\njupiter/syncthing-data@auto-2023-12-25_00-00\njupiter/syncthing-data@auto-2023-12-26_00-00\njupiter/syncthing-data@auto-2023-12-27_00-03\njupiter/syncthing-data@auto-2023-12-28_00-03\njupiter/syncthing-data@auto-2023-12-29_00-03\njupiter/syncthing-data@auto-2023-12-30_00-03\njupiter/syncthing-data@auto-2023-12-31_00-03\njupiter/syncthing-data@auto-2024-01-01_00-03\njupiter/syncthing-data@auto-2024-01-02_00-03\njupiter/syncthing-data@auto-2024-01-03_00-03\njupiter/syncthing-data@auto-2024-01-04_00-03\njupiter/syncthing-data@auto-2024-01-05_00-03\njupiter/syncthing-data@auto-2024-01-06_00-03\njupiter/syncthing-data@auto-2024-01-07_00-03\njupiter/syncthing-data@auto-2024-01-08_00-03\njupiter/syncthing-data@auto-2024-01-09_00-03\njupiter/syncthing-data@auto-2024-01-10_00-03\njupiter/syncthing-data@auto-2024-01-11_00-03\njupiter/syncthing-data@auto-2024-01-12_00-03\njupiter/syncthing-data@auto-2024-01-13_00-03\njupiter/syncthing-data@auto-2024-01-14_00-03\njupiter/syncthing-data@auto-2024-01-15_00-03\njupiter/syncthing-data@auto-2024-01-16_00-03\njupiter/syncthing-data@auto-2024-01-17_00-03\njupiter/syncthing-data@auto-2024-01-18_00-03\njupiter/syncthing-data@manual-2023-11-20_17-25_beforededup\n'

[2024/01/19 10:33:56] DEBUG [IoThread_12] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:240] Connecting...

[2024/01/19 10:33:56] DEBUG [IoThread_12] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:240] [async_exec:242] Running ['zfs', 'list', '-t', 'snapshot', '-H', '-o', 'name', '-s', 'name', '-d', '1', 'jupiter/scanned-documents'] with sudo=False

[2024/01/19 10:33:56] DEBUG [IoThread_12] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:240] [async_exec:242] Reading stdout

[2024/01/19 10:33:56] DEBUG [IoThread_12] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:240] [async_exec:242] Waiting for exit status

[2024/01/19 10:33:56] DEBUG [IoThread_12] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:240] [async_exec:242] Success: 'jupiter/scanned-documents@auto-2024-01-18_22-43\njupiter/scanned-documents@auto-2024-01-18_22-47\n'

[2024/01/19 10:34:05] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:241] Connecting...

[2024/01/19 10:34:06] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:241] [async_exec:243] Running ['zfs', 'list', '-t', 'snapshot', '-H', '-o', 'name', '-s', 'name', '-d', '1', 'jupiter/syncthing-data'] with sudo=False

[2024/01/19 10:34:06] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:241] [async_exec:243] Reading stdout

[2024/01/19 10:34:06] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:241] [async_exec:243] Waiting for exit status

[2024/01/19 10:34:06] DEBUG [IoThread_20] [zettarepl.transport.base_ssh] [ssh:admin@192.168.178.143] [shell:241] [async_exec:243] Success: 'jupiter/syncthing-data@auto-2023-10-21_21-00\njupiter/syncthing-data@auto-2023-10-22_21-00\njupiter/syncthing-data@auto-2023-10-23_21-00\njupiter/syncthing-data@auto-2023-10-24_21-00\njupiter/syncthing-data@auto-2023-10-25_21-00\njupiter/syncthing-data@auto-2023-10-27_21-00\njupiter/syncthing-data@auto-2023-10-28_21-00\njupiter/syncthing-data@auto-2023-10-29_21-00\njupiter/syncthing-data@auto-2023-10-30_21-00\njupiter/syncthing-data@auto-2023-10-31_21-00\njupiter/syncthing-data@auto-2023-11-01_21-00\njupiter/syncthing-data@auto-2023-11-02_21-00\njupiter/syncthing-data@auto-2023-11-03_21-00\njupiter/syncthing-data@auto-2023-11-04_21-00\njupiter/syncthing-data@auto-2023-11-05_21-00\njupiter/syncthing-data@auto-2023-11-06_21-00\njupiter/syncthing-data@auto-2023-11-07_21-00\njupiter/syncthing-data@auto-2023-11-08_21-00\njupiter/syncthing-data@auto-2023-11-11_21-00\njupiter/syncthing-data@auto-2023-11-13_21-00\njupiter/syncthing-data@auto-2023-11-14_21-00\njupiter/syncthing-data@auto-2023-11-15_21-00\njupiter/syncthing-data@auto-2023-11-16_21-00\njupiter/syncthing-data@auto-2023-11-17_21-00\njupiter/syncthing-data@auto-2023-11-18_21-00\njupiter/syncthing-data@auto-2023-11-19_21-00\njupiter/syncthing-data@auto-2023-11-20_21-00\njupiter/syncthing-data@auto-2023-11-21_21-00\njupiter/syncthing-data@auto-2023-11-22_21-00\njupiter/syncthing-data@auto-2023-11-23_21-00\njupiter/syncthing-data@auto-2023-11-24_21-00\njupiter/syncthing-data@auto-2023-11-25_21-00\njupiter/syncthing-data@auto-2023-11-26_21-00\njupiter/syncthing-data@auto-2023-11-27_00-00\njupiter/syncthing-data@auto-2023-11-28_00-00\njupiter/syncthing-data@auto-2023-11-29_00-00\njupiter/syncthing-data@auto-2023-11-30_00-00\njupiter/syncthing-data@auto-2023-12-01_00-00\njupiter/syncthing-data@auto-2023-12-02_00-00\njupiter/syncthing-data@auto-2023-12-03_00-00\njupiter/syncthing-data@auto-2023-12-04_00-00\njupiter/syncthing-data@auto-2023-12-05_00-00\njupiter/syncthing-data@auto-2023-12-06_00-00\njupiter/syncthing-data@auto-2023-12-07_00-00\njupiter/syncthing-data@auto-2023-12-08_00-00\njupiter/syncthing-data@auto-2023-12-09_00-00\njupiter/syncthing-data@auto-2023-12-10_00-00\njupiter/syncthing-data@auto-2023-12-11_00-00\njupiter/syncthing-data@auto-2023-12-12_00-00\njupiter/syncthing-data@auto-2023-12-13_00-00\njupiter/syncthing-data@auto-2023-12-14_00-00\njupiter/syncthing-data@auto-2023-12-15_00-00\njupiter/syncthing-data@auto-2023-12-16_00-00\njupiter/syncthing-data@auto-2023-12-17_00-00\njupiter/syncthing-data@auto-2023-12-18_00-00\njupiter/syncthing-data@auto-2023-12-19_00-00\njupiter/syncthing-data@auto-2023-12-20_00-00\njupiter/syncthing-data@auto-2023-12-21_00-00\njupiter/syncthing-data@auto-2023-12-22_00-00\njupiter/syncthing-data@auto-2023-12-23_00-00\njupiter/syncthing-data@auto-2023-12-24_00-00\njupiter/syncthing-data@auto-2023-12-25_00-00\njupiter/syncthing-data@auto-2023-12-26_00-00\njupiter/syncthing-data@auto-2023-12-27_00-03\njupiter/syncthing-data@auto-2023-12-28_00-03\njupiter/syncthing-data@auto-2023-12-29_00-03\njupiter/syncthing-data@auto-2023-12-30_00-03\njupiter/syncthing-data@auto-2023-12-31_00-03\njupiter/syncthing-data@auto-2024-01-01_00-03\njupiter/syncthing-data@auto-2024-01-02_00-03\njupiter/syncthing-data@auto-2024-01-03_00-03\njupiter/syncthing-data@auto-2024-01-04_00-03\njupiter/syncthing-data@auto-2024-01-05_00-03\njupiter/syncthing-data@auto-2024-01-06_00-03\njupiter/syncthing-data@auto-2024-01-07_00-03\njupiter/syncthing-data@auto-2024-01-08_00-03\njupiter/syncthing-data@auto-2024-01-09_00-03\njupiter/syncthing-data@auto-2024-01-10_00-03\njupiter/syncthing-data@auto-2024-01-11_00-03\njupiter/syncthing-data@auto-2024-01-12_00-03\njupiter/syncthing-data@auto-2024-01-13_00-03\njupiter/syncthing-data@auto-2024-01-14_00-03\njupiter/syncthing-data@auto-2024-01-15_00-03\njupiter/syncthing-data@auto-2024-01-16_00-03\njupiter/syncthing-data@auto-2024-01-17_00-03\njupiter/syncthing-data@auto-2024-01-18_00-03\njupiter/syncthing-data@manual-2023-11-20_17-25_beforededup\n'

Also I'm wondering if the DEBUG is any reason to be concerned, but I doubt it.

This was triggered automatically, I was asleep then ;)

Code:

[2024/01/20 03:27:10] INFO [retention] [zettarepl.zettarepl] Retention destroying local snapshots: [('neptune/test-dataset', 'auto-2024-01-20_01-00')]

[2024/01/20 03:27:10] INFO [retention] [zettarepl.snapshot.destroy] On <Shell(<LocalTransport()>)> for dataset 'neptune/test-dataset' destroying snapshots {'auto-2024-01-20_01-00'}

[2024/01/20 03:27:11] INFO [Thread-1945] [zettarepl.paramiko.retention] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/01/20 03:27:11] INFO [Thread-1945] [zettarepl.paramiko.retention] Authentication (publickey) successful!

[2024/01/20 03:27:12] INFO [retention] [zettarepl.zettarepl] Retention on <SSH Transport(admin@192.168.178.143)> destroying snapshots: [('jupiter/test-dataset', 'auto-2024-01-20_01-00')]

[2024/01/20 03:27:12] INFO [retention] [zettarepl.snapshot.destroy] On <Shell(<SSH Transport(admin@192.168.178.143)>)> for dataset 'jupiter/test-dataset' destroying snapshots {'auto-2024-01-20_01-00'}

[2024/01/20 03:27:13] INFO [retention] [zettarepl.zettarepl] Retention on <LocalTransport()> destroying snapshots: []

I would

assume nothing of that is reason for concern and the

zettarepl just occasionally (not necessarly linked to snapshot / replication schedules) performs some tasks.

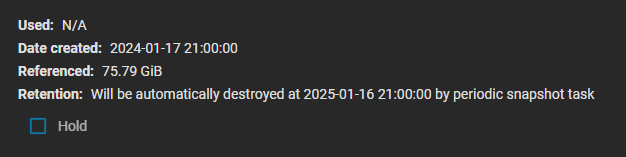

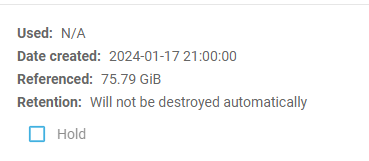

3) Because I also discovered that there are many snapshots on source that will not get deleted I got curious, because I was certain to have set a policy before. Turns out: When you delete the snapshot task, the retention policy will automatically change to

Will not be destroyed automatically.

Before it says

Will be automatically destroyed at XXX by periodic snapshot task. Maybe I'm a stickler for wording, but

by periodic snapshot task implied to me: there is

a period snapshot task and that will destroy the snapshot. Apparantly you need to read it as:

Will be automatically destroyed at XXX by the specific snapshot task that created this snapshot.

I checked the documentation to see if I missed that, but I couldn't find any mention of that.

documentation. I discovered an interesting bit though:

| Snapshot Lifetime | Enter the length of time to retain the snapshot on this system using a numeric value and a single lowercase letter for units. Examples: 3h is three hours, 1m is one month, and 1y is one year. Does not accept minute values. After the time expires, the snapshot is removed. Snapshots replicated to other systems are not affected. |

I would like to see the clarification here that this is not universally true. They will be affected when you set the retention policy same as source. But one could argue, whoever sets this knows what they are doing anyway. The fact that even manually deleted snapshots, which did not inherit a retention time will get synchronized, i.e. deleted on the replication target.

Thank you for your time and hopefully you can enlighten me on some of the points I stumbled upon.

Have a nice weekend!