I have been seeing repeated crashes qemu/kvm crashes in my VM when under heavy IO load.

all I see in the logs is the message

failed to set up stack guard page: Cannot allocate memory

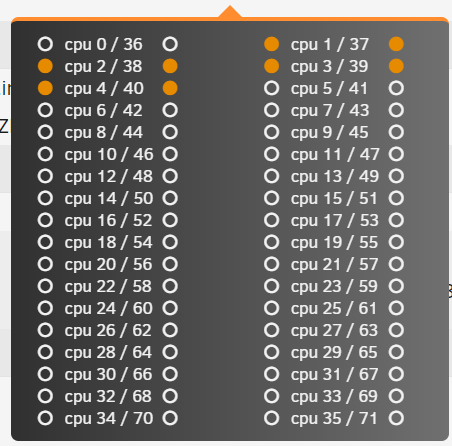

This is a large VM that maps to all of the CPU resources, and a good chunk (but nowhere near all) of the RAM.

this is on an up to date cobia release.

anyway to debug what's going on?e

all the logs I see are

as far as I can tlel the stack guard page error leads to the crash, as I dont see it in the log of a running VM instance, only after it crashes.

all I see in the logs is the message

failed to set up stack guard page: Cannot allocate memory

This is a large VM that maps to all of the CPU resources, and a good chunk (but nowhere near all) of the RAM.

this is on an up to date cobia release.

anyway to debug what's going on?e

all the logs I see are

Code:

failed to set up stack guard page: Cannot allocate memory

2024-03-19 19:16:55.941+0000: shutting down, reason=crashed

2024-03-19 19:38:04.915+0000: starting up libvirt version: 9.0.0, package: 9.0.0-4 (Debian), qemu version: 7.2.2Debian 1:7.2+dfsg-7, kernel: 6.1.74-production+truenas, hostname: truenas.local

LC_ALL=C \

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin \

HOME=/var/lib/libvirt/qemu/domain-12-1_vm \

XDG_DATA_HOME=/var/lib/libvirt/qemu/domain-12-1_vm/.local/share \

XDG_CACHE_HOME=/var/lib/libvirt/qemu/domain-12-1_vm/.cache \

XDG_CONFIG_HOME=/var/lib/libvirt/qemu/domain-12-1_vm/.config \

/usr/bin/qemu-system-x86_64 \

-name guest=1_vm,debug-threads=on \

-S \

-object '{"qom-type":"secret","id":"masterKey0","format":"raw","file":"/var/lib/libvirt/qemu/domain-12-1_vm/master-key.aes"}' \

-machine pc-i440fx-7.2,usb=off,dump-guest-core=off,memory-backend=pc.ram \

-accel kvm \

-cpu host,migratable=on,host-cache-info=on,l3-cache=off \

-m 131072 \

-object '{"qom-type":"memory-backend-ram","id":"pc.ram","size":137438953472}' \

-overcommit mem-lock=on \

-smp 48,sockets=2,dies=1,cores=12,threads=2 \

-uuid 5a756db6-26f8-4b43-bdf4-e2fe0ddd3bd5 \

-no-user-config \

-nodefaults \

-chardev socket,id=charmonitor,fd=34,server=on,wait=off \

-mon chardev=charmonitor,id=monitor,mode=control \

-rtc base=localtime \

-no-shutdown \

-boot strict=on \

-device '{"driver":"nec-usb-xhci","id":"usb","bus":"pci.0","addr":"0x4"}' \

-device '{"driver":"ahci","id":"sata0","bus":"pci.0","addr":"0x5"}' \

-device '{"driver":"virtio-serial-pci","id":"virtio-serial0","bus":"pci.0","addr":"0x6"}' \

-blockdev '{"driver":"host_device","filename":"/dev/zvol/primary/vm-s5trh3","aio":"threads","node-name":"libvirt-2-storage","cache":{"direct":true,"no-flush":false},"auto-read-only":true,"discard":"unmap"}' \

-blockdev '{"node-name":"libvirt-2-format","read-only":false,"cache":{"direct":true,"no-flush":false},"driver":"raw","file":"libvirt-2-storage"}' \

-device '{"driver":"ide-hd","bus":"sata0.0","drive":"libvirt-2-format","id":"sata0-0-0","bootindex":1,"write-cache":"on"}' \

-blockdev '{"driver":"host_device","filename":"/dev/zvol/primary/vm-store","aio":"threads","node-name":"libvirt-1-storage","cache":{"direct":true,"no-flush":false},"auto-read-only":true,"discard":"unmap"}' \

-blockdev '{"node-name":"libvirt-1-format","read-only":false,"cache":{"direct":true,"no-flush":false},"driver":"raw","file":"libvirt-1-storage"}' \

-device '{"driver":"virtio-blk-pci","bus":"pci.0","addr":"0x7","drive":"libvirt-1-format","id":"virtio-disk0","bootindex":2,"write-cache":"on"}' \

-netdev '{"type":"tap","fd":"35","vhost":true,"vhostfd":"37","id":"hostnet0"}' \

-device '{"driver":"virtio-net-pci","netdev":"hostnet0","id":"net0","mac":"00:a0:98:0e:eb:f8","bus":"pci.0","addr":"0x3"}' \

-chardev pty,id=charserial0 \

-device '{"driver":"isa-serial","chardev":"charserial0","id":"serial0","index":0}' \

-chardev spicevmc,id=charchannel0,name=vdagent \

-device '{"driver":"virtserialport","bus":"virtio-serial0.0","nr":1,"chardev":"charchannel0","id":"channel0","name":"com.redhat.spice.0"}' \

-chardev socket,id=charchannel1,fd=33,server=on,wait=off \

-device '{"driver":"virtserialport","bus":"virtio-serial0.0","nr":2,"chardev":"charchannel1","id":"channel1","name":"org.qemu.guest_agent.0"}' \

-device '{"driver":"usb-tablet","id":"input0","bus":"usb.0","port":"1"}' \

-audiodev '{"id":"audio1","driver":"spice"}' \

-spice port=5900,addr=0.0.0.0,disable-ticketing=on,seamless-migration=on \

-device '{"driver":"qxl-vga","id":"video0","max_outputs":1,"ram_size":67108864,"vram_size":67108864,"vram64_size_mb":0,"vgamem_mb":16,"xres":1024,"yres":768,"bus":"pci.0","addr":"0x2"}' \

-device '{"driver":"vfio-pci","host":"0000:05:00.0","id":"hostdev0","bus":"pci.0","addr":"0x8"}' \

-device '{"driver":"virtio-balloon-pci","id":"balloon0","deflate-on-oom":true,"bus":"pci.0","addr":"0x9"}' \

-sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny \

-msg timestamp=on

char device redirected to /dev/pts/1 (label charserial0)

failed to set up stack guard page: Cannot allocate memory

2024-03-21 08:44:51.274+0000: shutting down, reason=crashedas far as I can tlel the stack guard page error leads to the crash, as I dont see it in the log of a running VM instance, only after it crashes.