Shigure

Dabbler

- Joined

- Sep 1, 2022

- Messages

- 39

Applied the update yesterday and there are two things pop up for one of my pools.

One is a failed SMART test at lifetime 11(aborted due to restart) and with some search it seems it will eventually be flushed with newer records as long as I use the drives for enough time, they are brand new when I put them into the box.

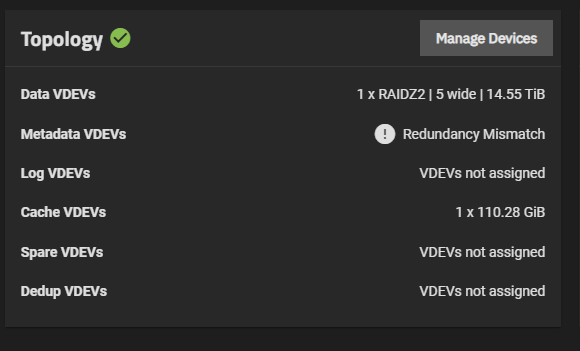

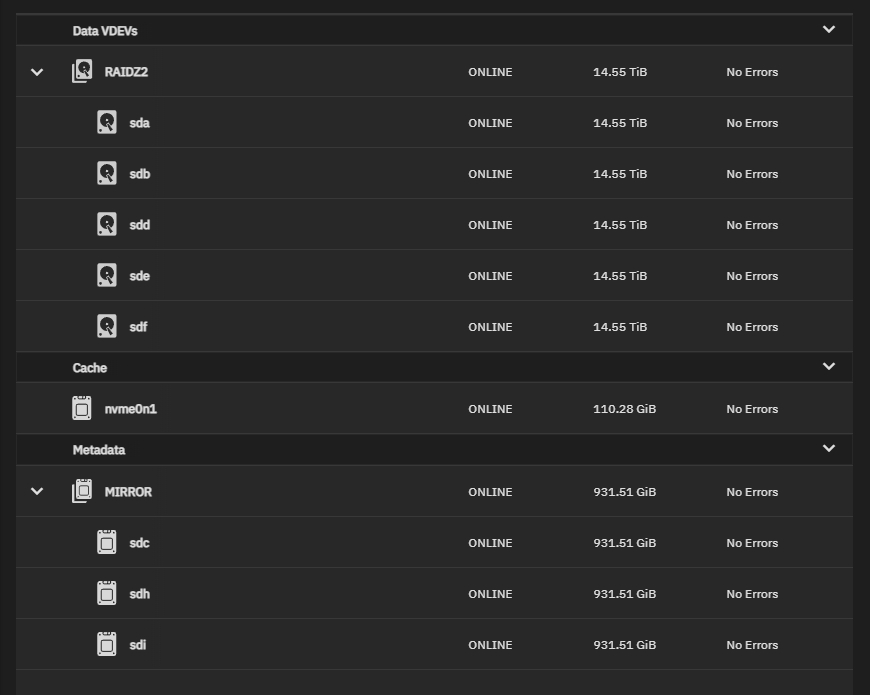

The other is the redundancy mismatch notice in topology. That pool has a 5 wide RaidZ2 data vdev and a 3 wide mirror metadata vdev(and 1 spare there just in case since I do have a exact same drive lying around). The raid type is different but I think both vdev can take two drive failures at the same time without losing data and the concept was approved here by others as well when I put everything together. (screenshot below)

Is there a way to do something to those two...changes? As they are not really issues. Well if not I think I can probably live with them anyway as long as I know my pool is safe...

One is a failed SMART test at lifetime 11(aborted due to restart) and with some search it seems it will eventually be flushed with newer records as long as I use the drives for enough time, they are brand new when I put them into the box.

The other is the redundancy mismatch notice in topology. That pool has a 5 wide RaidZ2 data vdev and a 3 wide mirror metadata vdev(and 1 spare there just in case since I do have a exact same drive lying around). The raid type is different but I think both vdev can take two drive failures at the same time without losing data and the concept was approved here by others as well when I put everything together. (screenshot below)

Is there a way to do something to those two...changes? As they are not really issues. Well if not I think I can probably live with them anyway as long as I know my pool is safe...