greg_905

Dabbler

- Joined

- Jun 1, 2023

- Messages

- 17

Hello,

I recently started a new gig and inherited a ZFS filesystem using TrueNAS core as the host OS. This is used for archive / Second copy of production data. The person who set it up put all the disks into a single vdev. I want to rebuild the vdev layout but first need to get the archive data on there there to two sets of tape before destroying the file system. To keep things short and not get into details, we write to this array all day long for days at a time, with brief interruptions.

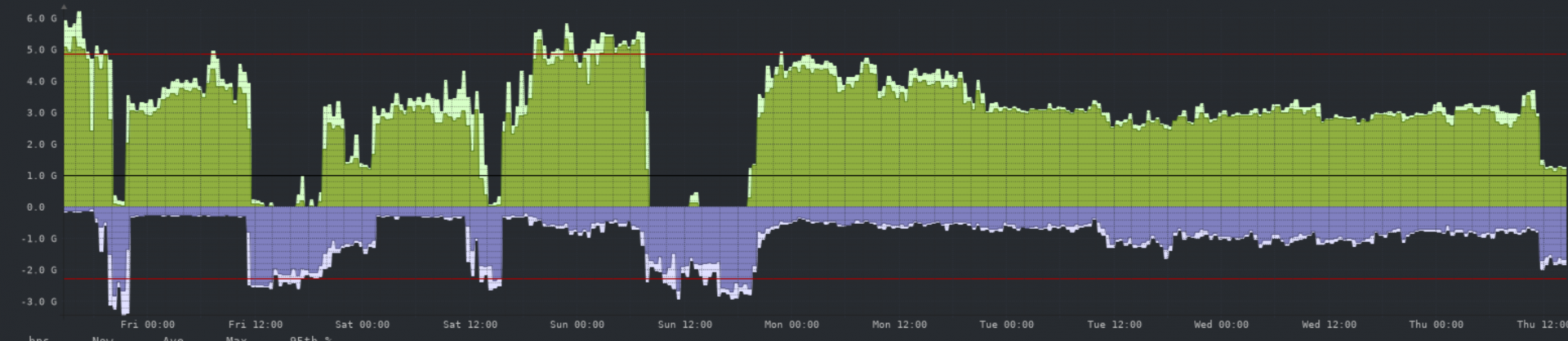

Anytime we are writing to the array, the read performance falls through the floor. For example, when we are not writing to the array, we see about 700MBs to the tape library - over NFS (everything is NFS here). When we start writing to the array that drops to 30MBs or lower. While the writes are heavier than the reads, I expected they wouldn't cause as heavy a read performance hit as they are. See the graph below to see the trend. Green is into the array, blue is out to the backup server.

Is this expected behaviour or seem normal? Its been awhile since I administrated a ZFS file system, I don't recall this being a thing. Our other in house file servers (Isilon and a Qumulo) can read and write without this drastic of a hit in either direction.

mb: SSG-640SP-E1CR60 X12 Single Node 60-bay Supermicro Storage Server

cpu: Intel Xeon Gold 6326

ram: 128Gigs

HDs: 58(in one vdev, zraid3) x 18TB, 2x hotspares - 2 x 1.6TB NVMe cache, 1 x same for logs

system drives: 2xmirror Micron 5300 PRO 240GB

NIC: Intel X710 dual 10Gbit

I recently started a new gig and inherited a ZFS filesystem using TrueNAS core as the host OS. This is used for archive / Second copy of production data. The person who set it up put all the disks into a single vdev. I want to rebuild the vdev layout but first need to get the archive data on there there to two sets of tape before destroying the file system. To keep things short and not get into details, we write to this array all day long for days at a time, with brief interruptions.

Anytime we are writing to the array, the read performance falls through the floor. For example, when we are not writing to the array, we see about 700MBs to the tape library - over NFS (everything is NFS here). When we start writing to the array that drops to 30MBs or lower. While the writes are heavier than the reads, I expected they wouldn't cause as heavy a read performance hit as they are. See the graph below to see the trend. Green is into the array, blue is out to the backup server.

Is this expected behaviour or seem normal? Its been awhile since I administrated a ZFS file system, I don't recall this being a thing. Our other in house file servers (Isilon and a Qumulo) can read and write without this drastic of a hit in either direction.

mb: SSG-640SP-E1CR60 X12 Single Node 60-bay Supermicro Storage Server

cpu: Intel Xeon Gold 6326

ram: 128Gigs

HDs: 58(in one vdev, zraid3) x 18TB, 2x hotspares - 2 x 1.6TB NVMe cache, 1 x same for logs

system drives: 2xmirror Micron 5300 PRO 240GB

NIC: Intel X710 dual 10Gbit