Hi Guys,

Let me preface with the fact that I am not using any L2ARC or ZIL, have a pretty well spec'd system (IMHO) that I am not looking to replace but completely open to adding hardware to what I have.

- Supermicro X10 w/ I3-4130T, 32GB ECC

- Lenovo SA120 DAS 6.0GB, w/ IT Flashed LSI 12.0GB Card

- 12x 2TB 7200RPM arranged as single Raid-Z2

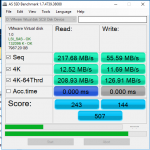

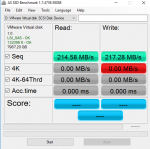

This is mostly a storage backend for media so that is why I didn't go for mirrors. I needed capacity more than performance. I only have two gigabit links dedicated to iSCSI because I know that my limiting factor will be my RAID configuration as a Z2, I will only get the performance of a single disk. I attached a screenshot of the disk benchmark, after running the a workload over night. I wanted to present a picture not being completely fresh.

However, I have gotten into BURSTcoin mining to take advantage of some 12TB of free space just for fun. The workload is that you generate large samples of data and then the "mining" is the ability to read back that data, looking for matching blocks. So, you are reading multiple 2TB files as fast as possible before someone else finds a matching block and lets the network know.

I seem to have great performance but as soon as whatever buffer fills up it turns to crud. What do I mean... well, my issue is that when I have "burstable" types of data, such as writing this random workload, I get what I would expect regarding performance. (Roughly, 80MB/sec writes).. However, when doing the reads I get maybe 10-15MB/sec tops. When doing disk benchmarks, I also max out my Dual gigabit iSCSI bandwidth.. so from a configuration perspective I am at a bit of a loss since ESX looks good, performance looks OK for a low power workload.

When I am doing 4k benchmarks, I end up around 10MB/Sec which is also not what I would expect but seems in line with the performance I am getting in the application. Oh yeah, the iSCSI is terminated in ESX, and presented to a single VM as an attached disk.

When I reboot everything and clear the caches in FreeNAS - I get "ideal" benchmarks of approx 200MB/Sec reads and writes. but same 10-15MB 4k Performance.

When looking at some statistics, my pool is still under 80% utilized, my ARC is full at 27.5GB and my hit ratio is at 93% under the workload. There is only 1VM attached to these disks.

So my questions are:

- Is this normal based on a 12 drive Raid-Z2?

- From what I am reading, adding a ZIL or L2ARC wouldn't really help here, true?

- My RAM seems to be spot on, for a total of 24TB unformatted disk, 16TB usable.

- What can I do to improve my 4k reads and writes? Again, am I stuck due to the inherent speed of the pool?

- I understand the latency is a killer and ethernet has a huge chunk of overhead, aside from going to 10G (which I suspect would be the same latency since it too is ethernet), can I do anything to improve my latency? In Windows, I get at most 500-600ms latency when under load.

- What hardware can I throw at this to make it better? Is there anything within reason or should I consider a new build dedicated for this?

Ultimately, my goal is to get another shelf and dedicated all of the disks as single drive iSCSI LUN's back to ESX from FreeNAS

Any advice is welcome!

Thanks!!

Let me preface with the fact that I am not using any L2ARC or ZIL, have a pretty well spec'd system (IMHO) that I am not looking to replace but completely open to adding hardware to what I have.

- Supermicro X10 w/ I3-4130T, 32GB ECC

- Lenovo SA120 DAS 6.0GB, w/ IT Flashed LSI 12.0GB Card

- 12x 2TB 7200RPM arranged as single Raid-Z2

This is mostly a storage backend for media so that is why I didn't go for mirrors. I needed capacity more than performance. I only have two gigabit links dedicated to iSCSI because I know that my limiting factor will be my RAID configuration as a Z2, I will only get the performance of a single disk. I attached a screenshot of the disk benchmark, after running the a workload over night. I wanted to present a picture not being completely fresh.

However, I have gotten into BURSTcoin mining to take advantage of some 12TB of free space just for fun. The workload is that you generate large samples of data and then the "mining" is the ability to read back that data, looking for matching blocks. So, you are reading multiple 2TB files as fast as possible before someone else finds a matching block and lets the network know.

I seem to have great performance but as soon as whatever buffer fills up it turns to crud. What do I mean... well, my issue is that when I have "burstable" types of data, such as writing this random workload, I get what I would expect regarding performance. (Roughly, 80MB/sec writes).. However, when doing the reads I get maybe 10-15MB/sec tops. When doing disk benchmarks, I also max out my Dual gigabit iSCSI bandwidth.. so from a configuration perspective I am at a bit of a loss since ESX looks good, performance looks OK for a low power workload.

When I am doing 4k benchmarks, I end up around 10MB/Sec which is also not what I would expect but seems in line with the performance I am getting in the application. Oh yeah, the iSCSI is terminated in ESX, and presented to a single VM as an attached disk.

When I reboot everything and clear the caches in FreeNAS - I get "ideal" benchmarks of approx 200MB/Sec reads and writes. but same 10-15MB 4k Performance.

When looking at some statistics, my pool is still under 80% utilized, my ARC is full at 27.5GB and my hit ratio is at 93% under the workload. There is only 1VM attached to these disks.

So my questions are:

- Is this normal based on a 12 drive Raid-Z2?

- From what I am reading, adding a ZIL or L2ARC wouldn't really help here, true?

- My RAM seems to be spot on, for a total of 24TB unformatted disk, 16TB usable.

- What can I do to improve my 4k reads and writes? Again, am I stuck due to the inherent speed of the pool?

- I understand the latency is a killer and ethernet has a huge chunk of overhead, aside from going to 10G (which I suspect would be the same latency since it too is ethernet), can I do anything to improve my latency? In Windows, I get at most 500-600ms latency when under load.

- What hardware can I throw at this to make it better? Is there anything within reason or should I consider a new build dedicated for this?

Ultimately, my goal is to get another shelf and dedicated all of the disks as single drive iSCSI LUN's back to ESX from FreeNAS

Any advice is welcome!

Thanks!!