D

DeletedUser122199

Guest

Hello. I am new to TrueNAS and NAS in general, but I believe I have set up things as I should, yet I've ran into a strange issue.

Hardware - repurposed Asustor Nimbustor 4-AS5304T:

CPU: Intel Celeron J4105

RAM: 2x4GB 2400MHz

Boot drive: repurposed WD Blue 3D NAND, M.2 - 500 GB connected from external case over USB-C to USB-A 3.x

Storage drives: 4xWD Red Pro 8TB WD8003FFBX

LAN: 2x2.5Gbit => 2x2.5Gbit switch => 10Gbit PCI-E card in PC

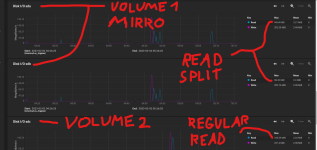

I have tried to configurate the drives in RAID Z1, Z2 and in mirror but in all configs the read performance is the same as if it's from single drive. Eventually I have tried to create a volume from two drives in mirror and one volume from one single drive and tried to compare the performance for read by simply moving files to and from PC. The speeds were pretty much the same in both cases and they reflected the specs of read/write of a single drive WD8003FFBX, cca 200 MB/s. In the screenshot you can also see that the two drives that are in mirror just split the read load, but stayed under the speed of a single drive, while the single drive used its full read speed, and reached the same speed as the two.

I am not aware of dong any special settings that could cause this and I have verified that the machine as a whole could deliver much higher read speeds, when I tried the original Asustor OS for a while. However I am not really an expert, so I might be wrong.

I would appreciate any advice you could give me.

Hardware - repurposed Asustor Nimbustor 4-AS5304T:

CPU: Intel Celeron J4105

RAM: 2x4GB 2400MHz

Boot drive: repurposed WD Blue 3D NAND, M.2 - 500 GB connected from external case over USB-C to USB-A 3.x

Storage drives: 4xWD Red Pro 8TB WD8003FFBX

LAN: 2x2.5Gbit => 2x2.5Gbit switch => 10Gbit PCI-E card in PC

I have tried to configurate the drives in RAID Z1, Z2 and in mirror but in all configs the read performance is the same as if it's from single drive. Eventually I have tried to create a volume from two drives in mirror and one volume from one single drive and tried to compare the performance for read by simply moving files to and from PC. The speeds were pretty much the same in both cases and they reflected the specs of read/write of a single drive WD8003FFBX, cca 200 MB/s. In the screenshot you can also see that the two drives that are in mirror just split the read load, but stayed under the speed of a single drive, while the single drive used its full read speed, and reached the same speed as the two.

I am not aware of dong any special settings that could cause this and I have verified that the machine as a whole could deliver much higher read speeds, when I tried the original Asustor OS for a while. However I am not really an expert, so I might be wrong.

I would appreciate any advice you could give me.