Kuyper

Dabbler

- Joined

- Nov 30, 2014

- Messages

- 20

2017-12-31 noon UPDATE: Solved! When rolling back an OS to an earlier release (in my case to 11.0.U4 from 11.1) you CANNOT restore from the later release's backup; @Ericloewe pointed this out, and after doing a factory reset (on the 11.0.U4 install) I recovered from my 9.3 backup, let it do it's reboot jiggery-pokery, and my pool came back online, happy and healthy, even if I'm still shaking a little :) ) Read through the whole mission below, if you have the stomach for it :)

2017-12-31 morning UPDATE: the panic issue was resolved by rolling back from 11.1 to 11.0.U4 (apparently a known issue) but things are not yet rosy, as the pool imports, but is not mounting correctly, or being seen properly by the UI - scroll down for all the gory details; I'll update here again when that's been solved

Really hoping that @joeschmuck can jump in here, as he has some context in helping me earlier at

https://forums.freenas.org/index.ph...ot-volume-new-device-not-showing-gptid.59880/

I successfully upgraded to FreeNAS 11, and all was good. So I shut my system down and added the first of my new 8TB drives, fired up, and left the system running for a few days to burn in the new drive, let it run through some smarctl tests etc.

I then started the first of the

commands to start the resilvering. I was remote over ssh at the time, but within seconds of starting the zpool replace, I lost complete access to my machine. I just got home some days later, and was greeted with this message :(

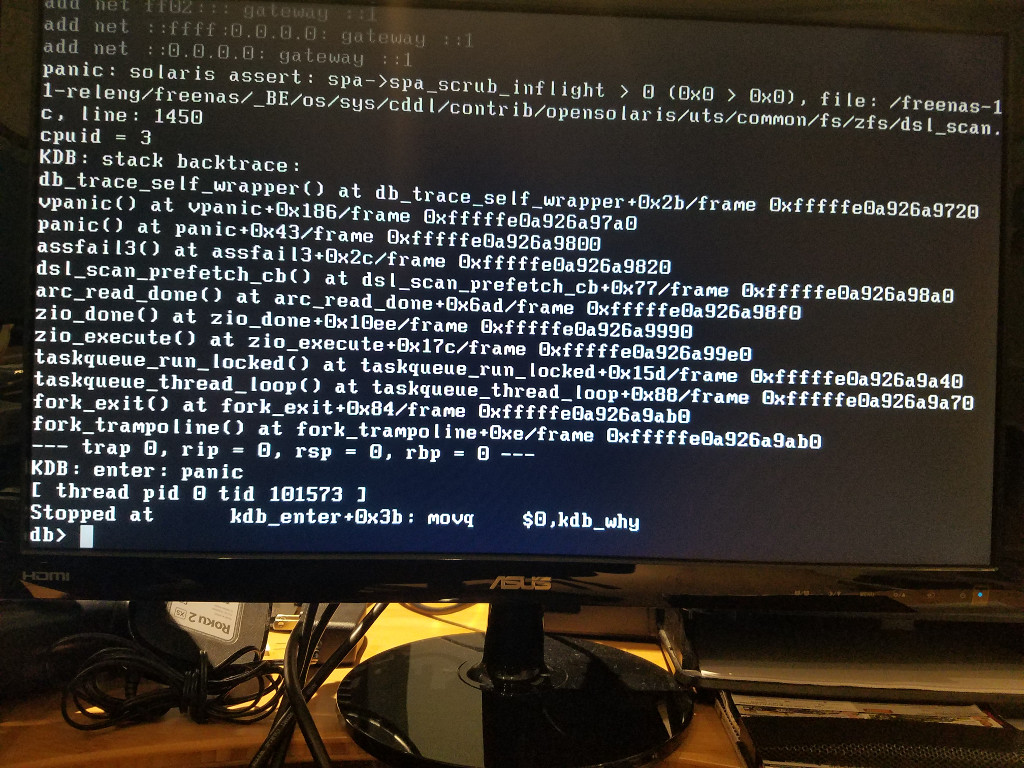

full screen shot below

I followed the steps at

https://forums.freenas.org/index.php?threads/kernel-panic-importing-a-pool.7095/

but even after booting in single and running a

it panics again.

I'm obviously pretty desperate as I have >15TB of, well, everything, just sitting there inaccessible (but hopefully still intact) so pretty much any help appreciated.

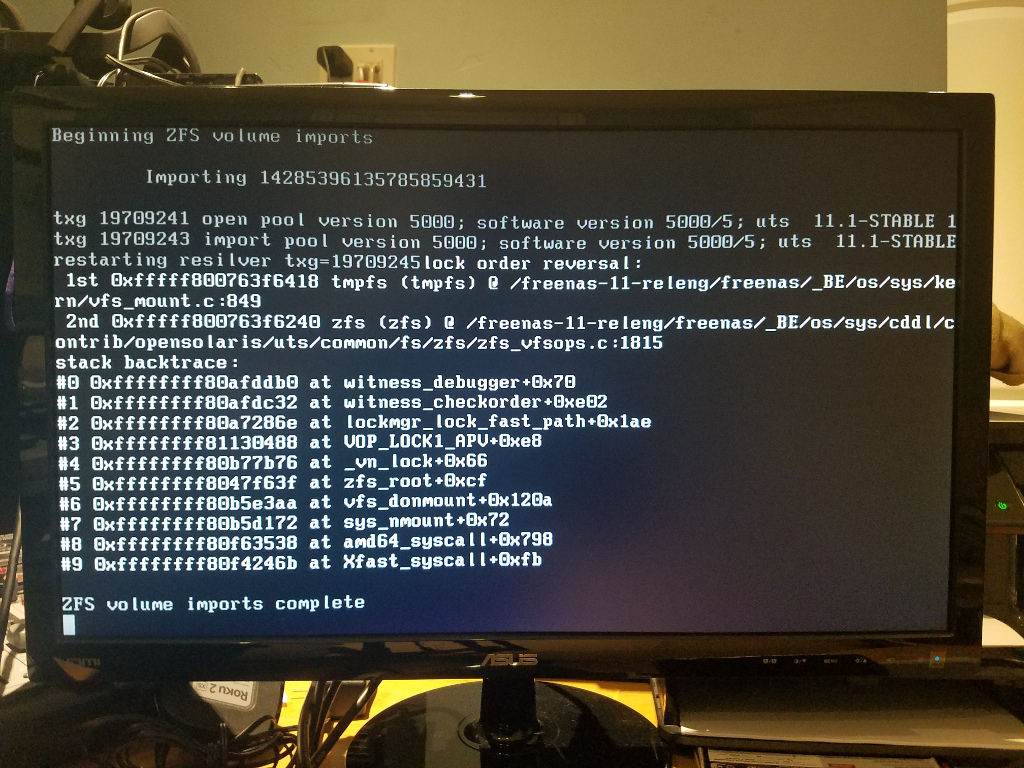

I did a reboot and grabbed some more screenshots as it's coming up, and you can see some references to a resilver starting, but then right after that it panics again. 2nd screenshot below is actually right before the panic

As for the inevitable hardware specs question (note, all was working fine; all I've added is 1 new drive):

The HUH728080AL is the new drive. One of these

https://www.hgst.com/products/hard-drives/ultrastar-he10

in 8TB trim

Screenshot:

Just before it panics, I get this:

You can see it restarting the resilver before that has a stack backtrace itself.

Is my best bet just to pull the new drive and let it monut the old (even if degraded) pool?

Thanks

Kuyper

2017-12-31 morning UPDATE: the panic issue was resolved by rolling back from 11.1 to 11.0.U4 (apparently a known issue) but things are not yet rosy, as the pool imports, but is not mounting correctly, or being seen properly by the UI - scroll down for all the gory details; I'll update here again when that's been solved

Really hoping that @joeschmuck can jump in here, as he has some context in helping me earlier at

https://forums.freenas.org/index.ph...ot-volume-new-device-not-showing-gptid.59880/

I successfully upgraded to FreeNAS 11, and all was good. So I shut my system down and added the first of my new 8TB drives, fired up, and left the system running for a few days to burn in the new drive, let it run through some smarctl tests etc.

I then started the first of the

Code:

zpool replace

commands to start the resilvering. I was remote over ssh at the time, but within seconds of starting the zpool replace, I lost complete access to my machine. I just got home some days later, and was greeted with this message :(

Code:

panic: solaris assert: spa->spa_scrub_inflight > 0

full screen shot below

I followed the steps at

https://forums.freenas.org/index.php?threads/kernel-panic-importing-a-pool.7095/

but even after booting in single and running a

Code:

zpool import -f -R /mnt tank01

it panics again.

I'm obviously pretty desperate as I have >15TB of, well, everything, just sitting there inaccessible (but hopefully still intact) so pretty much any help appreciated.

I did a reboot and grabbed some more screenshots as it's coming up, and you can see some references to a resilver starting, but then right after that it panics again. 2nd screenshot below is actually right before the panic

As for the inevitable hardware specs question (note, all was working fine; all I've added is 1 new drive):

* MoBo: ASRock C216 WS ATX Server Motherboard LGA 1155

* CPU: Intel Xeon E3-1220L V2 @ 2.30GHz (derated to 2 core / 4 threads for heat/power saving)

* RAM: 32GB ECC (Crucial 240-Pin DDR3 SDRAM ECC Unbuffered DDR3L 1600 (PC3L 12800) Server Memory)

* SAS Controller: LSI SAS9211-81

Code:

[kuyper@knox ~]$ sudo camcontrol devlist <ATA HGST HMS5C4040AL A580> at scbus0 target 2 lun 0 (pass0,da0) <ATA HGST HMS5C4040AL A580> at scbus0 target 3 lun 0 (pass1,da1) <ATA HGST HMS5C4040AL A580> at scbus0 target 4 lun 0 (pass2,da2) <ATA HGST HMS5C4040AL A580> at scbus0 target 5 lun 0 (pass3,da3) <ATA HGST HMS5C4040AL A580> at scbus0 target 6 lun 0 (pass4,da4) <STEC ZeusIOPs G3 E12B> at scbus0 target 8 lun 0 (pass5,da5) <STEC ZeusIOPs G3 E12B> at scbus0 target 9 lun 0 (pass6,da6) <ATA HGST HUH728080AL T7JF> at scbus0 target 15 lun 0 (pass7,da7) <Samsung Flash Drive FIT 1100> at scbus9 target 0 lun 0 (pass8,da8)

The HUH728080AL is the new drive. One of these

https://www.hgst.com/products/hard-drives/ultrastar-he10

in 8TB trim

Screenshot:

Just before it panics, I get this:

You can see it restarting the resilver before that has a stack backtrace itself.

Is my best bet just to pull the new drive and let it monut the old (even if degraded) pool?

Thanks

Kuyper

Attachments

Last edited: