Hey all,

Currently running TrueNAS-SCALE-23.10.2

System is a 10700k with 32Gb ram, Mellanox CX3 and 4x 4TB NVME drives in a RAIDZ1 config.

Minor mis-match on the sizing of the 4TB drives (3.73 TiB vs 3.64 TiB), so I lose a little capacity (2x Crucial drives; 2x Lexar drives)

A day or two ago I noticed that my server suddenly had a few issues - namely two of the four nvme drives had disappeared / were no longer even detected by the system. Fearing the worst (ie. dual nvme failures simultaneously... but what's the chance of that, considering they're different batches), I started to strip the system apart and do some investigation.

I tried several different configurations of drives installed (eg. standalone alternative drives; and random combinations in various m.2 slots), and was able to see that the drives in question weren't dead (ie. they were detectable by the bios and in the command line). I was still getting erratic behavior however - sometimes the PC would post; other times it wouldn't; drives would magically not show up etc.

Long story short, I'm now 99% sure it's the power supply, as switching to an alternative seems to have it working just fine now. I'll experiment more with PSU cables, but I'm pretty damn sure it's the PSU itself.

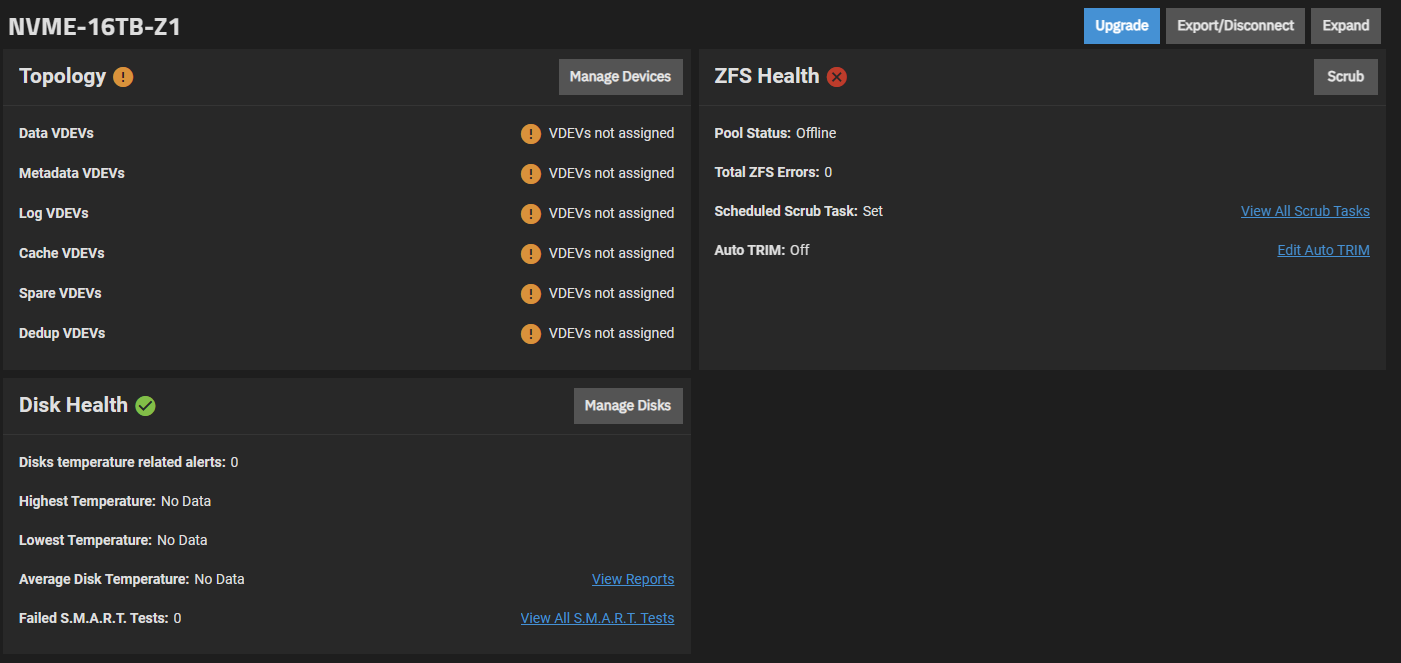

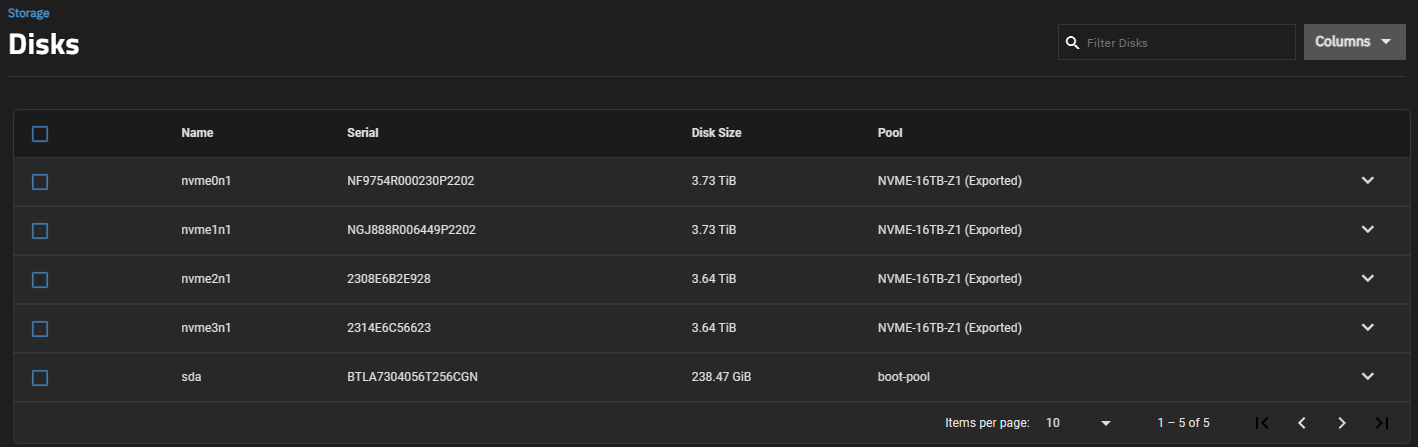

Now the issue I'm having is that all my drives for my pool are showing as exported; while the pool is not:

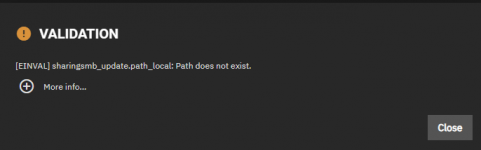

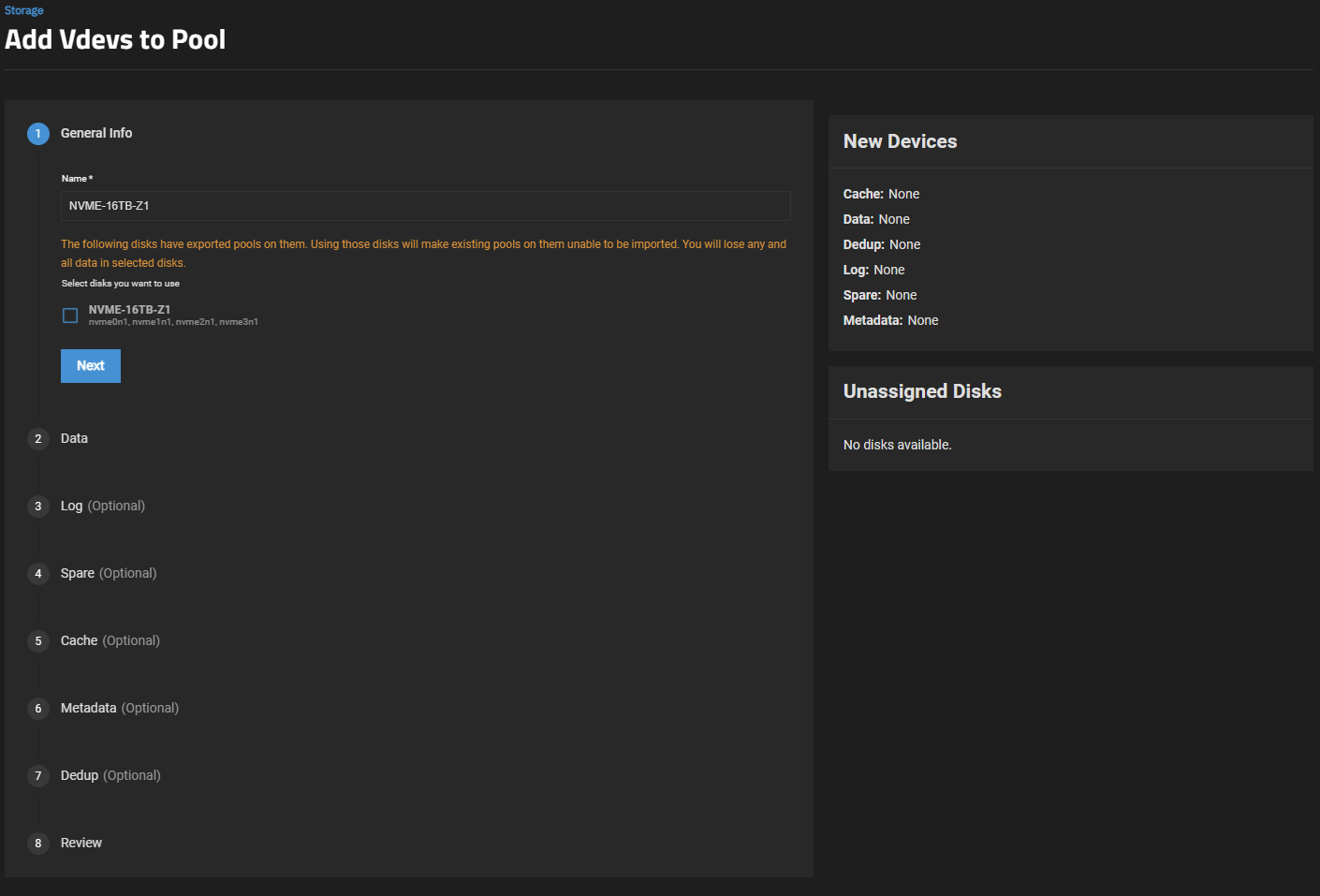

When I try and use the "Add to Pool" function under storage (showing Unassigned Disks as 4), to the existing/original pool (NVME-16TB-Z1) it basically tells me the existing disks have exported pools on them, and that I'll lose the associated data. Obviously I didn't go ahead with this

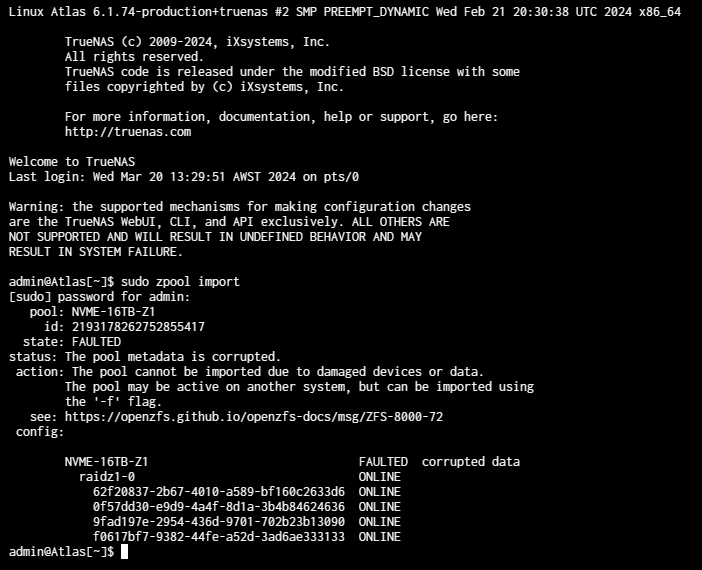

I then started looking at the shell options to import the pool, and I'm given this:

It seems I can force the pool import (using -f) but is this the correct thing to do? I'm fairly confident the data on the disks is intact, as it wouldn't have been under reads/writes when the failure occurred. We had a power outage the other night, and all this started the following morning (despite it being on a UPS). I suspect this may have caused the PSU failure as well (or vice versa).

Any help trying to re-import my disks into the pool is *greatly* appreciated!

Currently running TrueNAS-SCALE-23.10.2

System is a 10700k with 32Gb ram, Mellanox CX3 and 4x 4TB NVME drives in a RAIDZ1 config.

Minor mis-match on the sizing of the 4TB drives (3.73 TiB vs 3.64 TiB), so I lose a little capacity (2x Crucial drives; 2x Lexar drives)

A day or two ago I noticed that my server suddenly had a few issues - namely two of the four nvme drives had disappeared / were no longer even detected by the system. Fearing the worst (ie. dual nvme failures simultaneously... but what's the chance of that, considering they're different batches), I started to strip the system apart and do some investigation.

I tried several different configurations of drives installed (eg. standalone alternative drives; and random combinations in various m.2 slots), and was able to see that the drives in question weren't dead (ie. they were detectable by the bios and in the command line). I was still getting erratic behavior however - sometimes the PC would post; other times it wouldn't; drives would magically not show up etc.

Long story short, I'm now 99% sure it's the power supply, as switching to an alternative seems to have it working just fine now. I'll experiment more with PSU cables, but I'm pretty damn sure it's the PSU itself.

Now the issue I'm having is that all my drives for my pool are showing as exported; while the pool is not:

When I try and use the "Add to Pool" function under storage (showing Unassigned Disks as 4), to the existing/original pool (NVME-16TB-Z1) it basically tells me the existing disks have exported pools on them, and that I'll lose the associated data. Obviously I didn't go ahead with this

I then started looking at the shell options to import the pool, and I'm given this:

It seems I can force the pool import (using -f) but is this the correct thing to do? I'm fairly confident the data on the disks is intact, as it wouldn't have been under reads/writes when the failure occurred. We had a power outage the other night, and all this started the following morning (despite it being on a UPS). I suspect this may have caused the PSU failure as well (or vice versa).

Any help trying to re-import my disks into the pool is *greatly* appreciated!