Yves_

Dabbler

- Joined

- Jun 4, 2020

- Messages

- 11

Hi fellow FreeNASers,

I am really new to this forum. So I hope I am doing everything according to the forum rules. Otherwise please tell me.

I just finished building my first FreeNAS lab storage with old parts I got for a very good price.

- Main Chassie: Supermicro SuperChassis 847E16-R1400LPB (Link) has two Backplanes (Front: 24 Drives | Back: 12 Drives)

- Mainboard: Supermicro X9DR3-F/i (Link)

- CPU: 2x Intel(R) Xeon(R) CPU E5-2643 0 @ 3.30GHz (Link)

- RAM: 16x 16GB DDR3 eq total of 256GB

- SystemDisk: Supermicro SATA-DOM 32GB

- Additional NIC: 1x Mellanox ConnectX3-Pro Dual Port 40Gbps (Link), 1x some Intel Dual Port 10Gbps SFP+

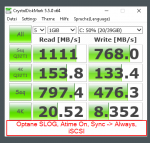

- Slog / Cache Drive: Intel Optane 900p 280GB (with 2 Partitions) 60GB for SLOG / rest for Cache

- Controller: LSI SAS9305-24i (Link) with the Supermicro Bracket AOM-SAS3-16i16e-LP adapter so I can use the two SAS Backplanes from the SuperChassis 847E16-R1400LPB and the two JBOD Extensions SuperChassis 847E16-RJBOD1

- JBOD Extensions: Supermicro SuperChassis 847E16-RJBOD1 (Link) each has also two Backplanes (Front: 24 Drives | Back: 21 Drives)

- Disks: 52x Western Digital White Label 8TB WD80EZAZ SATA (I think they are 5400 rpm drives)

Everything is up and running and works flawless as far as I can tell.

My idea was following:

The goal is a smaller but faster pool for the VMs (connected thru iSCSi) with Slog / Cache on the Intel Optane 900p and a bigger pool without a special Slog / Cache drive for Backups (mostly big single files) mainly thru SMB. So I tought I create a Pool vm-datastore01 with two VDevs (each has 4 drives and is RaidZ2) I partition the Optane with 60GB for Slog and rest (around 200GB) for Cache. For the other pool backup-store01 with 44 drives I create 4 VDevs with 11 drives each on a RaidZ1 with no Cache and no Slog. Any objections?

I am also very unsure about the 24 and 12 backplane situation.... and what happens if a complete backplane fails!? Should I only do data VDevs over disks on the same backplane? Does it matter? Should I only do vdevs over one complete system and the new VDevs from the first JBOD? How do I check if my performance is in the range it should be?

Thanks for your feedback,

Yves

I am really new to this forum. So I hope I am doing everything according to the forum rules. Otherwise please tell me.

I just finished building my first FreeNAS lab storage with old parts I got for a very good price.

- Main Chassie: Supermicro SuperChassis 847E16-R1400LPB (Link) has two Backplanes (Front: 24 Drives | Back: 12 Drives)

- Mainboard: Supermicro X9DR3-F/i (Link)

- CPU: 2x Intel(R) Xeon(R) CPU E5-2643 0 @ 3.30GHz (Link)

- RAM: 16x 16GB DDR3 eq total of 256GB

- SystemDisk: Supermicro SATA-DOM 32GB

- Additional NIC: 1x Mellanox ConnectX3-Pro Dual Port 40Gbps (Link), 1x some Intel Dual Port 10Gbps SFP+

- Slog / Cache Drive: Intel Optane 900p 280GB (with 2 Partitions) 60GB for SLOG / rest for Cache

- Controller: LSI SAS9305-24i (Link) with the Supermicro Bracket AOM-SAS3-16i16e-LP adapter so I can use the two SAS Backplanes from the SuperChassis 847E16-R1400LPB and the two JBOD Extensions SuperChassis 847E16-RJBOD1

- JBOD Extensions: Supermicro SuperChassis 847E16-RJBOD1 (Link) each has also two Backplanes (Front: 24 Drives | Back: 21 Drives)

- Disks: 52x Western Digital White Label 8TB WD80EZAZ SATA (I think they are 5400 rpm drives)

Everything is up and running and works flawless as far as I can tell.

My idea was following:

The goal is a smaller but faster pool for the VMs (connected thru iSCSi) with Slog / Cache on the Intel Optane 900p and a bigger pool without a special Slog / Cache drive for Backups (mostly big single files) mainly thru SMB. So I tought I create a Pool vm-datastore01 with two VDevs (each has 4 drives and is RaidZ2) I partition the Optane with 60GB for Slog and rest (around 200GB) for Cache. For the other pool backup-store01 with 44 drives I create 4 VDevs with 11 drives each on a RaidZ1 with no Cache and no Slog. Any objections?

I am also very unsure about the 24 and 12 backplane situation.... and what happens if a complete backplane fails!? Should I only do data VDevs over disks on the same backplane? Does it matter? Should I only do vdevs over one complete system and the new VDevs from the first JBOD? How do I check if my performance is in the range it should be?

Thanks for your feedback,

Yves