originalprime

Dabbler

- Joined

- Sep 22, 2011

- Messages

- 30

Hello All,

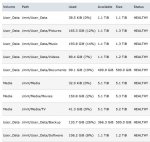

Either I'm really tired and forgot all of my math skills or something isn't adding up correctly. I have three 1.5TB drives configured in a RAIDZ1 volume called "User_Data" which should equal something in the ballpark of 3TB usable space, formatting notwithstanding. Per the attached screenshot, it is only reporting 1.1TB of total space. It is also reporting 1.1TB of available space.

I only have one reservation set on a dataset called "Backup" of 500GB. The other datasets do not have reservations or quotas set. Something simply isn't adding up properly.

Is this a bug report, or can someone slap me back into reality and explain what's going on?

Thanks,

-Prime

Either I'm really tired and forgot all of my math skills or something isn't adding up correctly. I have three 1.5TB drives configured in a RAIDZ1 volume called "User_Data" which should equal something in the ballpark of 3TB usable space, formatting notwithstanding. Per the attached screenshot, it is only reporting 1.1TB of total space. It is also reporting 1.1TB of available space.

I only have one reservation set on a dataset called "Backup" of 500GB. The other datasets do not have reservations or quotas set. Something simply isn't adding up properly.

Is this a bug report, or can someone slap me back into reality and explain what's going on?

Thanks,

-Prime