chriswiggins

Dabbler

- Joined

- Mar 4, 2018

- Messages

- 11

Hi all,

Quick intro - long time supporter of FreeNAS but only in the past year have I had the privilege of using it in a commercial setting.

We've got 3 iXSystems FreeNAS-Certified boxes purchased last year to store archived CCTV footage from a Milestone XProtect Corporate VMS system. The platform automatically moves CCTV footage every hour across to these NAS boxes from the SAN that the data is written to initially (this is part of Milestone's Live/Archive architecture).

NAS Configuration (3 identical boxes)

2x E5-2609v4 Xeon CPUs

128GB RAM

1 200GB ZIL (Intel S3710)

1 240GB ARC (Intel DC S3520)

24 HGST 8TB NL-SAS (H4K)

1 Dual-port 10G NIC (DAC version)

LSI 9300-8E SAS HBA

These came preconfigured as 4 x raidz2 vdevs, each vdev with 6 drives.

What we're seeing is an increase in read latency recently as we've added more and more cameras onto the platform and based on what I've read I'm wondering if we'd have been better off with 12 mirrored vdevs. The read latency is causing issues with the playback of footage, causing it to stutter and skip ahead. I've lodged a support case with Milestone and waiting to hear more from them but as expected they've initially pointed the finger at our beloved NAS setup!

We have a 10G Juniper switching network between our servers and NAS boxes, running jumbo frames and we see excellent throughput through it. Milestone is configured to use SMB/CIFS as the file share so there's no iSCSI funny business going on.

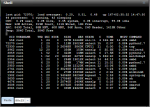

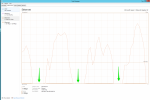

I guess what has me looking at the ZFS setup is the alarming amount of pending I/O requests on some of the disks. It doesn't seem to have any rhyme or reason to it so I'm at a loss as to what could be going on (see attached image). We also don't see the performance bottleneck during periods of no writing activity which is approximately 50% of the time (Milestone is archiving once an hour and this takes about 30 mins)

Any ideas of places to look or things to check would be much appreciated!

Cheers

Quick intro - long time supporter of FreeNAS but only in the past year have I had the privilege of using it in a commercial setting.

We've got 3 iXSystems FreeNAS-Certified boxes purchased last year to store archived CCTV footage from a Milestone XProtect Corporate VMS system. The platform automatically moves CCTV footage every hour across to these NAS boxes from the SAN that the data is written to initially (this is part of Milestone's Live/Archive architecture).

NAS Configuration (3 identical boxes)

2x E5-2609v4 Xeon CPUs

128GB RAM

1 200GB ZIL (Intel S3710)

1 240GB ARC (Intel DC S3520)

24 HGST 8TB NL-SAS (H4K)

1 Dual-port 10G NIC (DAC version)

LSI 9300-8E SAS HBA

These came preconfigured as 4 x raidz2 vdevs, each vdev with 6 drives.

What we're seeing is an increase in read latency recently as we've added more and more cameras onto the platform and based on what I've read I'm wondering if we'd have been better off with 12 mirrored vdevs. The read latency is causing issues with the playback of footage, causing it to stutter and skip ahead. I've lodged a support case with Milestone and waiting to hear more from them but as expected they've initially pointed the finger at our beloved NAS setup!

We have a 10G Juniper switching network between our servers and NAS boxes, running jumbo frames and we see excellent throughput through it. Milestone is configured to use SMB/CIFS as the file share so there's no iSCSI funny business going on.

I guess what has me looking at the ZFS setup is the alarming amount of pending I/O requests on some of the disks. It doesn't seem to have any rhyme or reason to it so I'm at a loss as to what could be going on (see attached image). We also don't see the performance bottleneck during periods of no writing activity which is approximately 50% of the time (Milestone is archiving once an hour and this takes about 30 mins)

Any ideas of places to look or things to check would be much appreciated!

Cheers