Hello to everyone,

I have been using TrueNAS for some time and I would like your help with the following findings.

Supermicro X10SL7-F

Xeon E3-1245 v3

32GB RAM ECC

LSI 2308 (on board), IT mode (passthrough to TrueNAS)

4x2TB, ZFS mirror, VMFS6 datastore

Intel 82599 10Gb

Everything updated to the latest firmware.

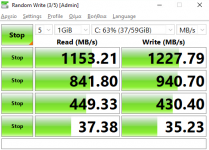

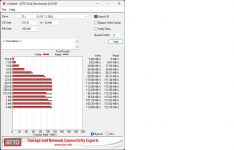

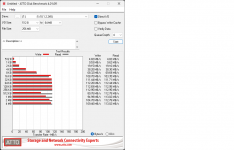

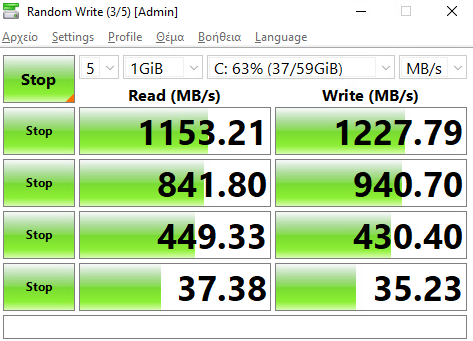

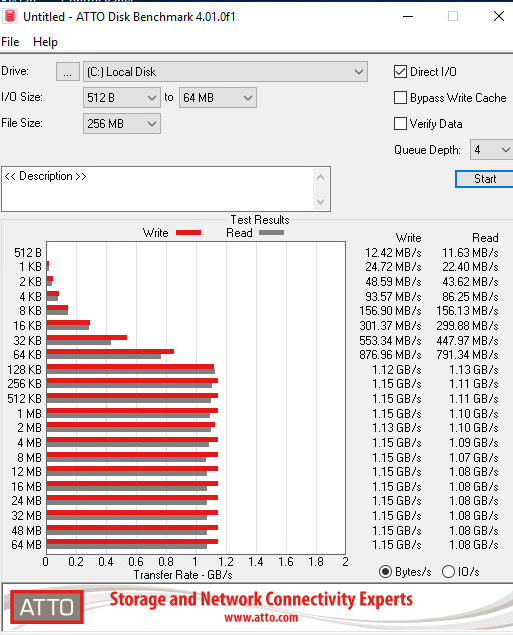

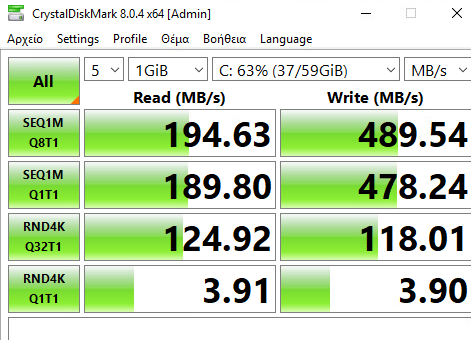

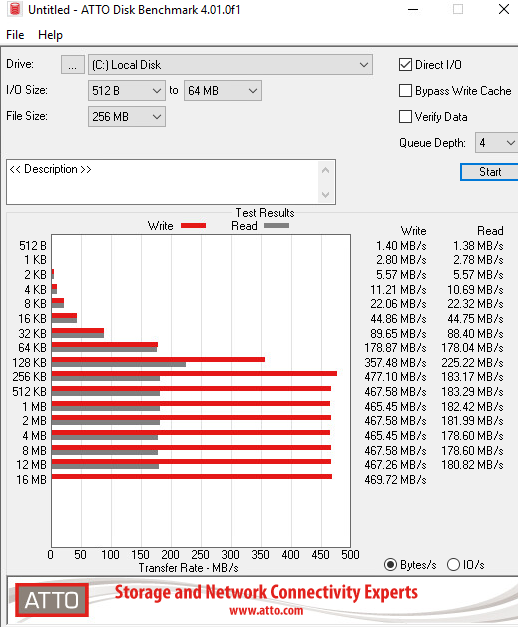

I haven't been running extensive performance tests. Just using ATTO Benchmark and Crystal Mark.

Bare metal installation:

ESXi 7.0 U2, VM installation (LSI 2308 and Intel 82599 passthrough), no other VM:

I am getting significantly lower performance in the VM installation comparing to the bare metal.

I have tried TrueNAS Auto-tune, changing PCIe slot to network card, several BIOS settings and VM settings. No success.

To a certain extent, I realize that I might not get the same performance but the gap seems to big to me.

Any thoughts or suggestions would be greatly appreciated.

Thank you in advance.

I have been using TrueNAS for some time and I would like your help with the following findings.

Supermicro X10SL7-F

Xeon E3-1245 v3

32GB RAM ECC

LSI 2308 (on board), IT mode (passthrough to TrueNAS)

4x2TB, ZFS mirror, VMFS6 datastore

Intel 82599 10Gb

Everything updated to the latest firmware.

I haven't been running extensive performance tests. Just using ATTO Benchmark and Crystal Mark.

Bare metal installation:

ESXi 7.0 U2, VM installation (LSI 2308 and Intel 82599 passthrough), no other VM:

I am getting significantly lower performance in the VM installation comparing to the bare metal.

I have tried TrueNAS Auto-tune, changing PCIe slot to network card, several BIOS settings and VM settings. No success.

To a certain extent, I realize that I might not get the same performance but the gap seems to big to me.

Any thoughts or suggestions would be greatly appreciated.

Thank you in advance.